The Kubernetes-powered Open Hybrid Cloud

Developers are focused on creating and improving software. To help them succeed, Kubernetes clusters can provide them with the right tools and interfaces, regardless of the infrastructure is on premise or in a public cloud.

We can distinguish between four infrastructure footprints to run Kubernetes:

- Bare metal hosts

- Virtual machines in traditional virtualization environments

- Private cloud infrastructure

- Public cloud infrastructure

Supporting developers and their applications properly involves providing a consistent user experience across these footprints.

In this article, we are going to focus on the private cloud infrastructure, specifically on OpenStack.

Why Kubernetes on OpenStack

Container platforms such as Kubernetes are workload-driven, the underlying infrastructure shouldn’t play a small role as the applications being run and developed are the main focus.

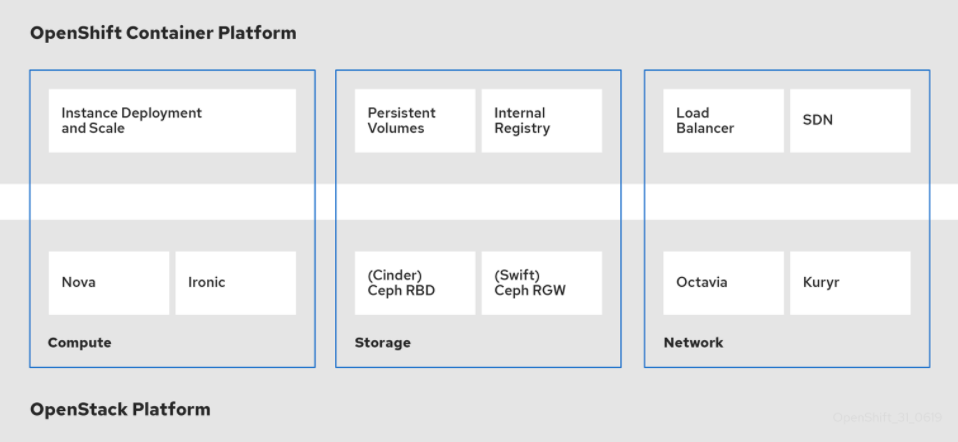

Kubernetes and OpenStack are deeply integrated. This integration is the result of years of development within two platforms, where compute, storage and networking resources are consumed from OpenStack by the Kubernetes clusters.

OpenStack has made the integration consistent and predictable thanks to the APIs that are used by Kubernetes to consume these resources seamlessly.OpenStack, itself, is an abstraction layer for these resources across the datacenter, that allows consuming compute, storage and networking resources in a consistent manner to its consumers, Kubernetes being one.

OpenStack has been designed for large scale in the datacenter, which allows Kubernetes clusters running on OpenStack to scale as required.

Integration points

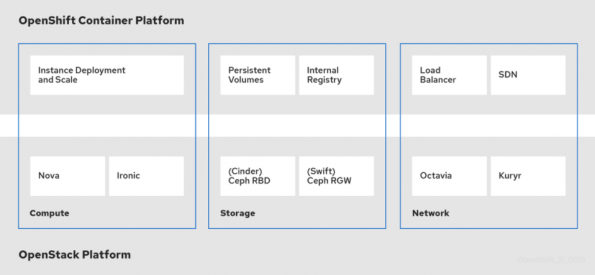

As pointed out above, the three main integration points between OpenShift and OpenStack are compute, storage and networking. Each of these resources is consumed via API through the integration points as shown below:

As you can see, we can even deploy Kubernetes clusters on bare metal nodes managed by OpenStack in the same way you would manage OpenStack virtual instances.

Some of these integration points optimize the performance of the container applications running on Kubernetes. As an example, Kuryr is a Kubernetes Container Network Interface specifically designed for integrating with OpenStack. We have seen network performance improvements of up to 9x faster when using Kuryr as your CNI (see blog post describing these integration points for details).

Architecture

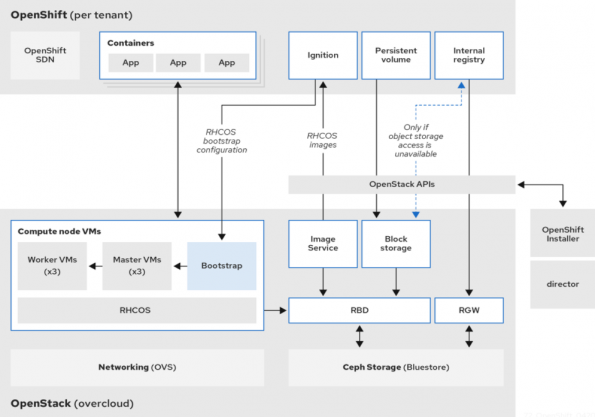

When it comes to designing your private cloud with OpenStack and Kubernetes, there are a number of decisions you will need to make. Red Hat has defined a Reference Architecture to help you understand all the details about this integration, including a solid baseline for defining your own architecture.

Strategy and vision

At Red Hat, we are supporting a large number of OpenStack users who are running Kubernetes or are developing their strategy utilizing containerised applications. To continue supporting these users, we are committed to continually improving this integration in different ways. For example, user experience is an important area for us. We want to allow as many users as possible to get up to speed with Kubernetes on their OpenStack clusters. OpenShift has played a key role in providing easy to install and easy to use Kubernetes clusters, thanks to the OpenShift installer, which is able to create all the OpenStack resources required by the cluster dynamically. Improvements in this area involve addressing new use cases and simplifying the installation workflows.

Another strategic area is the ability to use bare metal nodes provided by OpenStack to deploy Kubernetes clusters. Using bare metal for Kubernetes is an incredibly popular option. Joe Fernandes published a great blog post about this that I recommend reading.

Finally, we are seeing great momentum with OpenStack in the telecommunications industry, which is bringing new use cases to the table such as running Kubernetes applications for radio access networks (RAN) and edge computing. Here’s another great article on this topic and other related use cases.

Networking deployment options for Kubernetes on OpenStack

Deployments of Kubernetes on OpenStack have two networking CNI/plugin options for the primary interface to avoid the double encapsulation (overlays)

- Kuryr CNI and Neutron tenant networks

- Kubernetes SDN and Neutron Provider Networks

Openshift 4.x secondary interfaces on multus CNI typically use Neutron Provider Networks to enable direct L2 networking to the pods

Deployment with Kuryr CNI

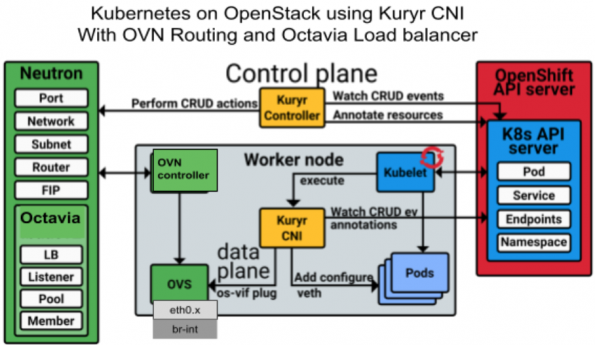

When using Kuryr CNI, OpenStack implements the network policies and load balancing services for Kubernetes pods. Kuryr CNI communicates with the Neutron API to set up security groups and Octavia load balancers.

Kuryr CNI supports either ML2/OVS or ML2/OVN Neutron plugins to avoid double encapsulation from overlays by using OpenStack self-service tenant networks with OpenStack tenant networking. Kuryr CNI uses VLAN trunking to tag/untag incoming and outgoing Kubernetes pod traffic.

Kuryr with ML2/OVN has several management and performance advantages,

- VM IP address management with distributed DHCP and metadata services

- East-West traffic using OVN Geneve overlays with distributed routing, no need to go through a central Neutron gateway router

- North-South traffic with default Distributed Virtual Routing (DVR) using Floating IPs (distributed NAT). No centralized Neutron router required

- Network policy via OpenStack security groups using OVN ACLs stateful and stateless firewalls (coming soon)

- East-West distributed load balancing with Octavia OVN driver

Deployment with Neutron Provider Networks

There are cases where enterprise data centers have special requirements like

- No support for overlay networking like VXLAN or Geneve

- Floating IPs with NAT is not supported for security reasons

- All traffic needs to go through an external firewall or VPN service like F5 before being forwarded

- External traffic should not pass through a Neutron gateway router for bare-metal deployments or for performance requirements.

- Direct L2 networking to the pods for faster external connectivity

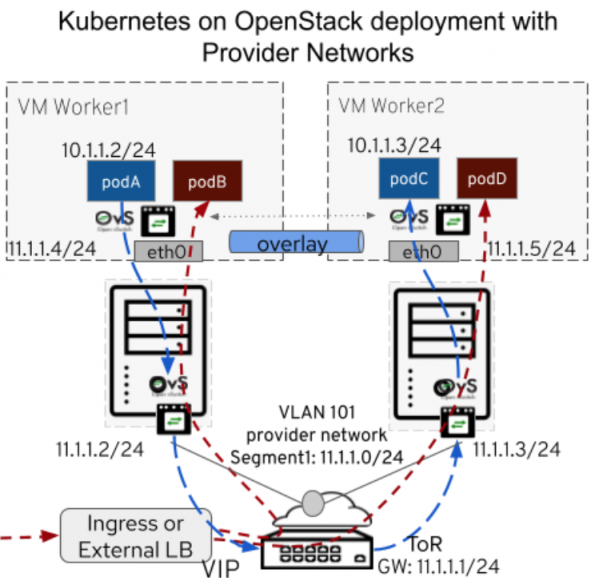

In these cases, the goal is to directly connect to an external network and use physical infrastructure to route the traffic. OpenStack Neutron Provider Networks provides access to administratively managed external networks.

Now with Kubernetes support for bring-your-own external connectivity, OpenStack Neutron Provider Networks can be used to connect to administratively managed external networks.

- Now floating IPs with NAT are no longer required.

- There is no need for Kuryr since double encapsulation was no longer a problem.

- OpenStack Neutron can still provide network policy with security groups but most other services including routing and load balancing would be provided by Kubernetes

- Kubernetes also adds the option to create your own External load balancer to support external load balancers like F5 or OpenStack Octavia or Kubernetes Ingress

In the example above, podA can communicate with podC over overlays managed by Kubernetes tenant networking but routed over VLAN 101 provider network (11.1.1.0/24) with external top of rack (ToR) router as the default gateway (11.1.1.1).

There are some limitations, Kubernetes In-tree Cloud Provider for OpenStack uses Terraform that makes it difficult to use Provider Networks requiring admin privileges and metadata services to be enabled. With Kubernetes moving to out-of-tree cloud providers for OpenStack these limitations will be overcome.

Kubernetes benefits from improvements in OpenStack security and load balancing services

In the following topics, we will discuss key improvements in the OpenStack services which are beneficial for Kubernetes use cases.

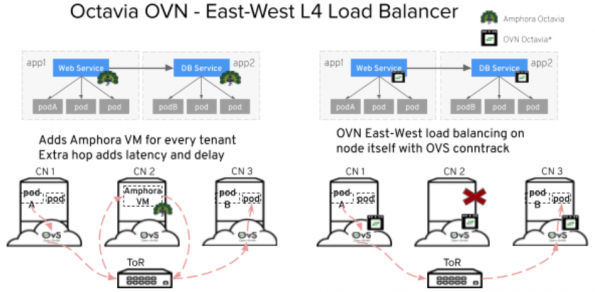

Load balancing with Octavia OVN driver

This option is available when deploying with Kuryr CNI using ML2/OVN plugin for load balancing a Kubernetes service between pods (east-west load balancer). OVN load balancer not only reduces the CPU resource overhead for Amphora VM but also avoids network latency since OVN load balancer is available on the node itself.

In the example below, Web Service app1 podA needs to connect to a DB service app2 on podB via Octavia Load balancer service. The first option is via Octavia Amphora driver which needs to spawn a Amphora VM per tenant and traffic needs to traverse an extra hop which adds network latency and consumes additional CPU resources. The second option uses OVN load balancing available on every node itself with OVS connection tracking.

However Octavia OVN Provider Driver has some known limitations, for example,

- it supports only an L4 (TCP/UDP) load balancing (no L7 HTTP load balancing capabilities)

- no support for health-monitoring. It relies on Kubernetes health checks for members

Improved performance with multi-tier applications using OVN ACLs (security groups)

In Kubernetes, pods are transient and there is constant churn. As pods are continuously being added and removed, OpenStack OVN logical switches (networks) and ports are added and removed. Kubernetes network policies, translated as OpenStack security groups into OVN ACLs, need to be updated continuously. As a result, there is constant computational overhead, CPU is always busy processing logical rules or OVN ACLs on central OVN databases and worker nodes.

OVN added several key features to improve CPU efficiency and reduce latency by over 50% on central and worker nodes when processing OVN ACLs:

- OVN incremental processing keeps track of rule changes and only affected nodes need to recompute rules

- OVN port groups and address sets where tenant network ports and IPs are added to port groups and address sets. The allows OVN ACLs to be applied at the port group level instead of at the individual port level and IP address matching is done using address sets. This reduces the explosion of rules on each tenant port and for each tenant IP.

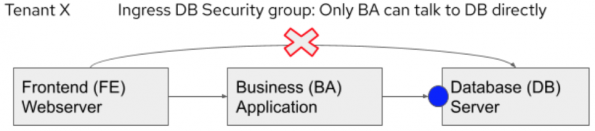

In this example, the webserver for a multi-tier application for Tenant X cannot connect directly to the backend Database (DB) server directly but must go through the Business Application’s (BA) logic first.

An ingress security group needs to be created for the Database tier, say DB_SG, that only allows IP addresses from the Business Application pods to connect to the Database server pods. An OVN port group is created for each application tier and as pods are created for the Database (DB) server their ports are added to the DB port group @pg_DB-uuid. OVN address set is automatically created to include IP addresses of ports from specific port groups, for example when pod for Business Application (BA) is created, its port is added to BA port group @pg_BA-uuidandits IP address is added to BA address set $pg_BA-uuid_ip4. Then the ingress DB security group can be applied to each at the DB port group level as shown below

outport == @pg_DB-uuid && ip4 && ip4.src == $pg_BA-uuid_ip4

Telco use cases – in particular 5G

5G is pushing for cloud native network functions, meaning that network functions implemented so far as virtual machines, in the context of 4G, are now expected to run as containers on bare-metal. However, not all network functions are going to be containerized. Existing 4G VNFs are not planned to be containerized. In that context, as 4G is here to stay for years, what are the options to run 4G and 5G, meaning VMs orchestrated by OpenStack alongside containers orchestrated by Kubernetes?

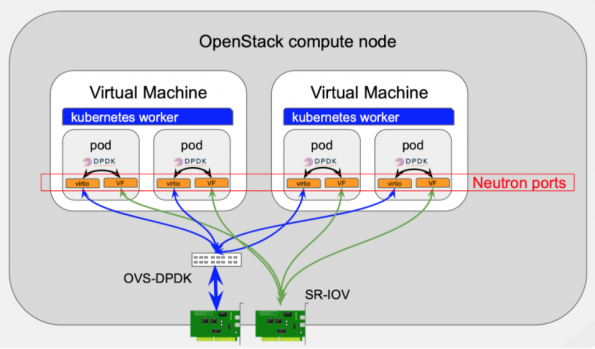

One possible answer is to have two interconnected clusters: one OpenStack and one Kubernetes, however, this doesn’t permit mixing VMs and containers on the same cluster. Running VMs on top of Kubernetes is also possible with Kubevirt for instance, however for existing VMs orchestrated via OpenStack APIs, the orchestration layer needs to be re-implemented via a Kubernetes Operator. Finally, running Kubernetes inside OpenStack VMs provides all the benefits of a private cloud to the Kubernetes cluster and to the containers it orchestrates, First abstracting Kubernetes from the underlying hardware, which is virtualization’s most obvious benefit. Then, the network interconnection between containers and VMs is taken care of by Neutron, this is an OpenStack built-in feature. This is very handy for CNF development as each and every developer can get its own set of Kubernetes clusters and experiment various network configurations without needing access to dedicated physical servers – most CNF developers have to share bare-metal servers with their whole team. Finally, the containers’ dataplane performances are identical to bare-metal ones, and we demonstrated this live on stage in Denver Open Infrastructure Summit 2019: the idea that containers are faster than VMs, debatable but measurable for enterprise use cases, has never been true for DPDK applications. Thanks to the use of huge pages, the code path is exactly the same on bare-metal and VMs, as huge pages permit to avoid TLB misses. As DPDK applications do not use interrupts, there are no hypercalls on the packet’s code path (interrupts are implemented as hypercalls in VMs).

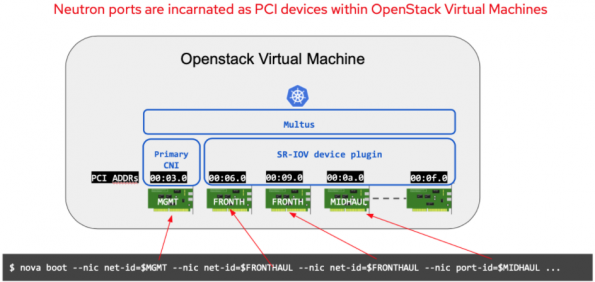

Telecom applications are “Network Functions” processing packets between a set of input and output interfaces. When running on bare-metal, those applications access network cards or network card virtual functions (SR-IOV VFs). Telecom applications can run as designed in OpenStack virtual machines as the virtual machines expose paravirtualized network cards (OVS-DPDK ports) or permit access to either the bare-metal network card or to a network card virtual function exposed by KVM. In all cases, a network card is a PCI device inside the VM, and this PCI device corresponds to a Neutron port.

Taking the example above in which a Kubernetes cluster needs to be connected to 3 networks: We need to connect the Kubernetes cluster to 3 different Neutron networks and we need to connect multiple pods on each of those networks.

Here is how we proceed:

Boot an OpenStack VM with as many Neutron ports as needed to connect all of the pods on the 3 networks: a single management interface using overlay will be used, and one Neutron port per pod for the FRONTHAUL and for the MIDHAUL network. Each and every Neutron port is inside the VM as a PCI device, so we “just” need to configure the proper CNI for those PCI devices (the primary CNI on the management interface, and SR-IOV device plugin for all others).

This solution permits to associate any Neutron port numbers with any Neutron port type to pods: it can be OVS-ML2 or OVN-ML2 ports, based on OVS TC Flower hardware offload, or OVS-DPDK, it can be SR-IOV, it can be any SDN plugin Neutron port.

The mapping between Neutron networks and Kubernetes CRs is realized by parsing the Virtual Machine metadatas, typically available via nova config drive.

In Conclusion

OpenStack has come a long way to become a robust and stable platform for Kubernetes workloads with shared infrastructure and services for bare metal nodes, VMs and container applications. We now have deployment reference architectures for several use cases with examples, customizable options, recommendations and limitations.

Kubernetes on OpenStack provides flexible deployment options based on requirements

- Enterprise use cases with significant East-West traffic can deploy with Kuryr CNI with improved tenant networking performance and efficient OpenStack load balancing and network policies

- Telco/NFV use cases with mostly North-South traffic can deploy with OpenStack provider networks and fast datapath options of SR-IOV, OVS-DPDK and hardware offload options with SmartNICs and FPGAs to Kubernetes pods with bare-metal performance.

Get Involved

This article is a summary of the Open Infrastructure Summit session, Run your Kubernetes cluster on OpenStack in Production.

Watch more Summit session videos like this on the Open Infrastructure Foundation YouTube channel. Don’t forget to join the global Open Infrastructure community, and share your own personal open source stories using the hashtag, #WeAreOpenInfra, on Twitter and Facebook.

)