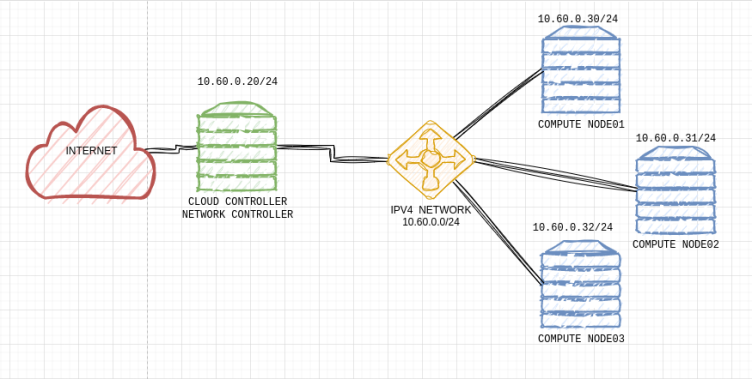

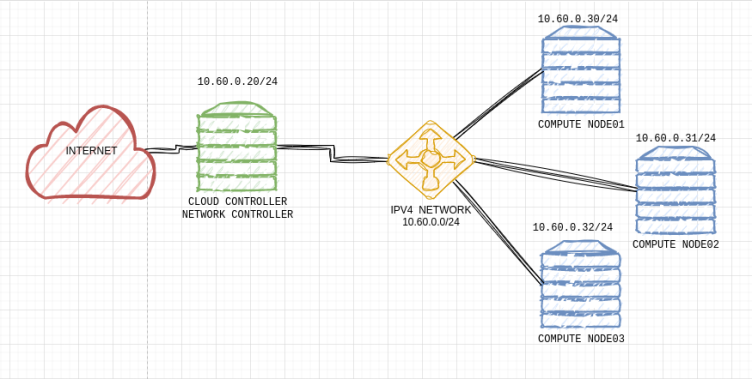

In the image, you can see my OpenStack LAB topology. I’m running four KVM Virtual Machines, one for the cloud and Network Controller, and three for Compute Node.

On a project named finance, let’s create a new volume storage and attach it to a VM.

First, let source the run control file to be able to talk with the identity service with the correct credentials:

source keystonerc_finance

Let’s now create a new volume. In this case, let’s use an image to create a volume with some data in it:

[root@cloudcontroller ~(keystone_tester)]# openstack volume create --size 1 --image cirros financev5 +---------------------+--------------------------------------+ | Field | Value | +---------------------+--------------------------------------+ | attachments | [] | | availability_zone | nova | | bootable | false | | consistencygroup_id | None | | created_at | 2023-03-10T18:33:57.126229 | | description | None | | encrypted | False | | id | e4de3df9-3a59-452e-96a0-48f968418777 | | multiattach | False | | name | financev5 | | properties | | | replication_status | None | | size | 1 | | snapshot_id | None | | source_volid | None | | status | creating | | type | iscsi | | updated_at | None | | user_id | 2debc5cd0aad4f1ca3a2b846b1ebf3b0 | +---------------------+--------------------------------------+

Launch a new VM named bc3:

[root@cloudcontroller ~(keystone_tester)]# openstack server create --image cirros --flavor m1.tiny --network finance-internal --security-group default --key-name finance-key bc +-----------------------------+-----------------------------------------------+ | Field | Value | +-----------------------------+-----------------------------------------------+ | OS-DCF:diskConfig | MANUAL | | OS-EXT-AZ:availability_zone | | | OS-EXT-STS:power_state | NOSTATE | | OS-EXT-STS:task_state | scheduling | | OS-EXT-STS:vm_state | building | | OS-SRV-USG:launched_at | None | | OS-SRV-USG:terminated_at | None | | accessIPv4 | | | accessIPv6 | | | addresses | | | adminPass | ******* | | config_drive | | | created | 2023-03-10T18:36:51Z | | flavor | m1.tiny (1) | | hostId | | | id | 72942a53-d028-40fa-96ce-34ca3e7da3b2 | | image | cirros (0832cf5e-702f-4ae8-a787-e34e7cb0b182) | | key_name | finance-key | | name | bc3 | | progress | 0 | | project_id | 634d5b6437b545c6b278f1e85e16a44f | | properties | | | security_groups | name='cdd45cab-0d69-4849-8fbe-8f7b7c0c829d' | | status | BUILD | | updated | 2023-03-10T18:36:51Z | | user_id | 2debc5cd0aad4f1ca3a2b846b1ebf3b0 | | volumes_attached | | +-----------------------------+-----------------------------------------------+3

Finally, let’s attach the volume to the instance:

[root@cloudcontroller ~(keystone_tester)]# openstack server add volume bc3 financev +-----------------------+--------------------------------------+ | Field | Value | +-----------------------+--------------------------------------+ | ID | e4de3df9-3a59-452e-96a0-48f968418777 | | Server ID | 72942a53-d028-40fa-96ce-34ca3e7da3b2 | | Volume ID | e4de3df9-3a59-452e-96a0-48f968418777 | | Device | /dev/vdb | | Tag | None | | Delete On Termination | False | +-----------------------+--------------------------------------+

Next, let’s validate all the configurations made above.

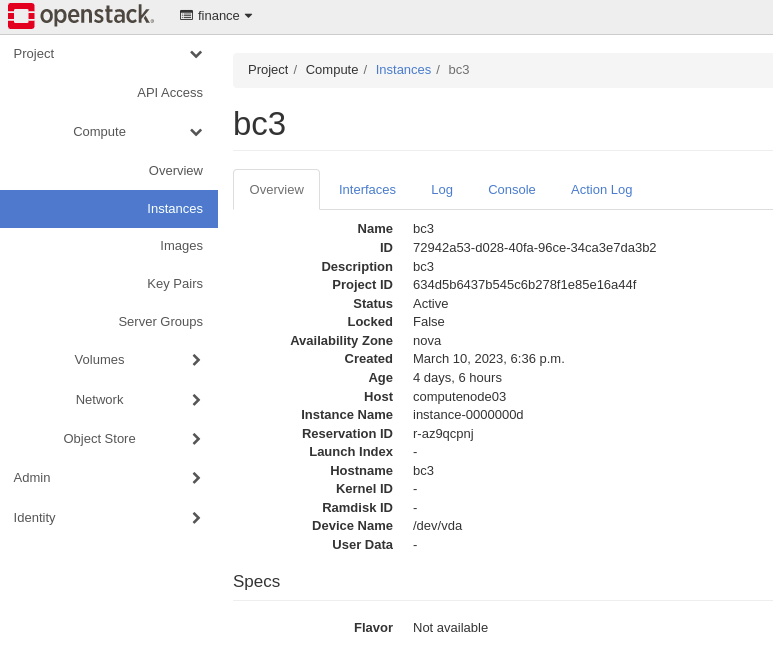

First, I will connect to the instance bc3 and inspect the attached volume. To do that we need to know where bc3 is running. In which, compute node was deployed to the VM, we can get that info by checking the BUI, specifically the Overview>Host=computenode03:

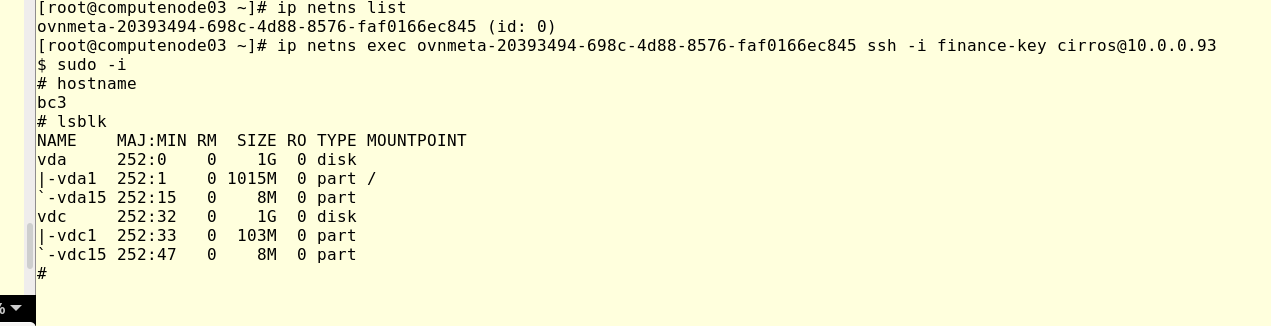

After that, let’s login in computenode03 via ssh to connect to bc3. To do that let’s check the network namespace running:

ip netns list

and

ip netns exec ovnmeta-20393494-698c-4d88-8576-faf0166ec845 ssh -i finance-key [email protected]

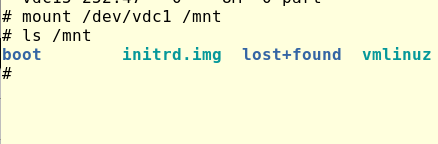

Once logged, let’s try to access the new volume attached in bc3. In the image above, we can see that effectively a second disk is available(vdc), running Linux command mount, we can mount in /mnt and check the content.

running:

mount /dev/vdc1 /mnt

then, check with ls:

ls /mnt

Just remember, we are running all this command side bc3 virtual machine, which is running in computenode03.

With the above we validated how OpenStack allows attaching a volume to a VM, now, let’s detach the volume in computenode run:

[root@cloudcontroller ~(keystone_tester)]# openstack server remove volume bc3 financev5

The command above will not generate any output, let’s validate again in bc3.

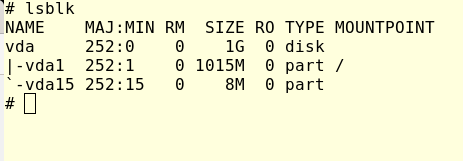

Running lsblk Linux command we can see the connected disk to the VM:

Now we can see that the new volume is not available anymore in bc3.

Most of the work above is from the point of view of the end-suer, now let’s focus a bit on OpenStack itself.

In my lab, the block storage is running in cloudcontroller node and the enabled backend is LVM, so let’s check where the logical volumes were created and how bc3 is connecting to it.

get the finanacev5 volume ID list volume in finance project:

[root@cloudcontroller ~(keystone_tester)]# openstack volume list +--------------------------------------+---------------------+-----------+------+-------------+ | ID | Name | Status | Size | Attached to | +--------------------------------------+---------------------+-----------+------+-------------+ | 355756ab-bc5d-4b09-bc7e-052b72dcfed3 | newvolume_from_snap | available | 1 | | | e4de3df9-3a59-452e-96a0-48f968418777 | financev5 | available | 1 | | | 2cfbd185-1bc9-48a5-83ed-344f692e51c6 | financev4 | available | 1 | | | 5ca9d519-94c4-49ad-bad0-151f79c933c2 | financev2 | available | 1 | | | 1aaa870e-addc-4f33-92a9-e4180332b351 | financev1 | available | 1 | | +--------------------------------------+---------------------+-----------+------+-------------+

From the output above we can see e4de3df9-3a59-452e-96a0-48f968418777 as ID for volume financev5. Now, let’s check the Logical volume in the cloudcontroller:

[root@cloudcontroller ~(keystone_tester)]# lvs |grep e4de3df9-3a59-452e-96a0-48f968418777 volume-e4de3df9-3a59-452e-96a0-48f968418777 cinder-volumes Vwi-a-tz-- 1.00g cinder-volumes-pool 100.00

As we can see above, an LVM disk was created with the same ID as financev5 volume, and this is the LVM disk that was attached to bc3 previously.

Now, how can an instance running in computenode03 access an LVM disk configured in cloudcontroller?

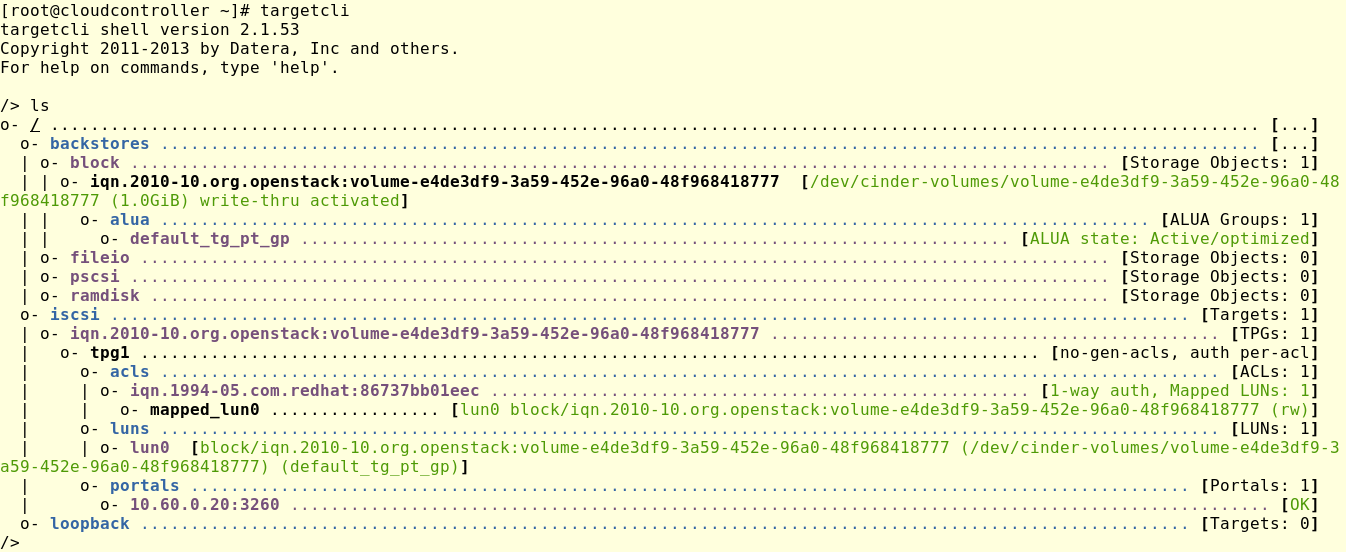

OpenStack uses iSCSI to provide block storage to the instances, we can check this as followed:

in cloudclontroller run:

targetcli, a new prompt will be available, then run ls

[root@cloudcontroller ~]# targetcli targetcli shell version 2.1.53 Copyright 2011-2013 by Datera, Inc and others. For help on commands, type 'help'. /> ls

In the image above we can see our target volume e4de3df9-3a59-452e-96a0-48f968418777 was added to ISCSI.

Now from computenode3:

[root@computenode03 ~]# iscsiadm -m session tcp: [4] 10.60.0.20:3260,1 iqn.2010-10.org.openstack:volume-e4de3df9-3a59-452e-96a0-48f968418777 (non-flash)

Again, we can see an iscsi active session corresponding to our financev5 volume.

In this small tutorial, we were able to learn how OpenStack provides persistent volumes to an instance through its block storage service cinder.

- Working with Swift, the Object Storage service in OpenStack - April 21, 2023

- Part 2 – How to Restore Volumes and Snapshots in OpenStack - March 27, 2023

- Part 1 – How to Back Up in OpenStack - March 27, 2023

)