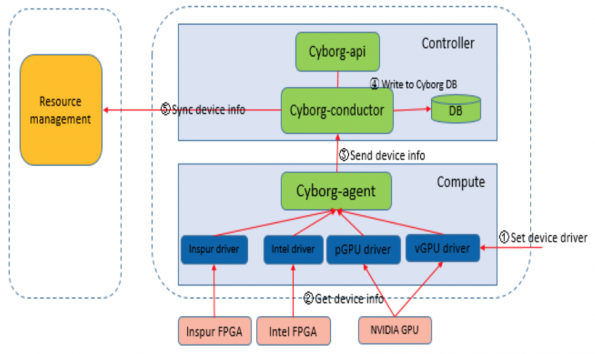

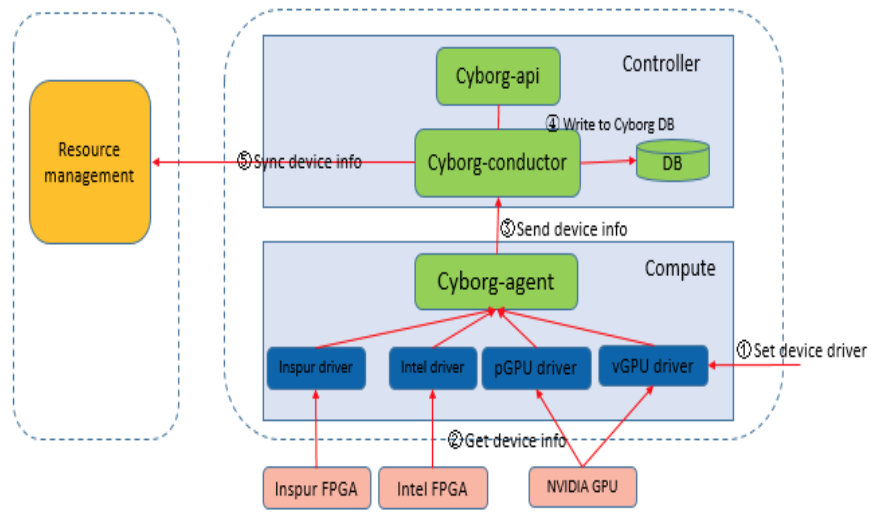

Cyborg is an accelerator resource (GPU, vGPU, FPGA, NVMe SSD, QAT, DPDK, SmartNIC etc.) management project, and it uses a micro-service architecture to support distributing deployment, which contains cyborg-api, cyborg-conductor and cyborg-agent services. Cyborg-agent collects accelerator resources information and reports it to the cyborg-conductor. Cyborg-conductor stores accelerator resources information to the database, and reports it to Placement resource management service. Placement stores the resources information and provides available resources for Nova scheduling when the server is creating. Cyborg-api service provides interfaces to request accelerator resources information. Diagram 0 is the architecture of Cyborg.

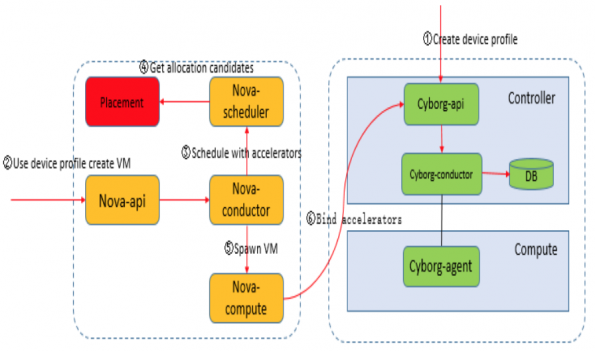

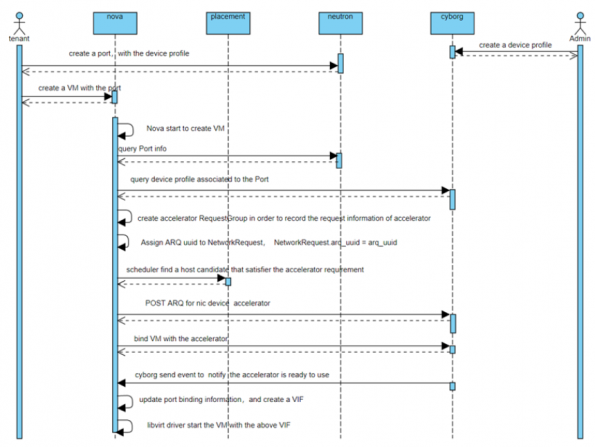

With Cyborg, we can boot server with accelerators by interacting with Nova and Placement, and support batch boot servers with scheduling accelerators (this is an enhancement in Inspur InCloud OpenStack Enterprise edition that we plan to contribute to the Nova and Cyborg community.). Then users can use these devices in server to program with FPGA, progress images via GPU and so on. We can also bind and unbind accelerators to exist server by hot-plug and non-hot plug devices, which guarantees the convenient usage of accelerators. Diagram 1 is the interaction flow between Nova and Cyborg when booting a server.

On the side of enhancing server operations with accelerators, we have supported most operations for servers, such as creation and deletion, reboots (soft and hard), pause and unpause, stop and start, take a snapshot, backup, rescue and unrescue, rebuild, evacuate. And there are other operations going on, such as shelve and unshelve, suspend and resume that are close to merge. We plan to support migration (live and cold) and resize soon. With these enhancements, operators can use accelerators more flexibly. Diagram 2 is the sequence flow about booting a server with vGPU devices.

And in Inspur InCloud OpenStack Enterprise edition we have made enhancements to some features, such as batch boot servers, bind and unbind accelerator devices based on hot-plug which are mentioned above. Cyborg was used to managing virtual GPU and the utilization rate was improved by 80%. Data synchronization strategy makes Cyborg and Placement data transportation increased by 30% on efficiency.

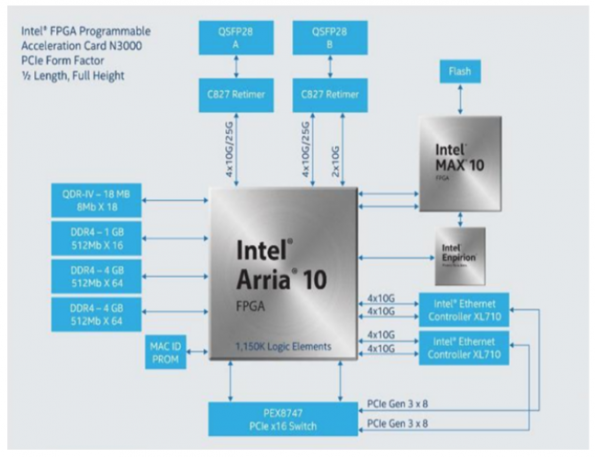

From diagram 3, the main components of N3000 include the Intel Arria® 10 FPGA, Dual Intel Ethernet Converged Network Adapter XL710, Intel MAX® 10 FPGA Baseboard Management Controller (BMC), 9 GB DDR4, 144 Mb QDR-IV. It can support High-speed network with 10Gbps/25Gbps interface and High-speed host interface with PCIe* Gen 3×16.

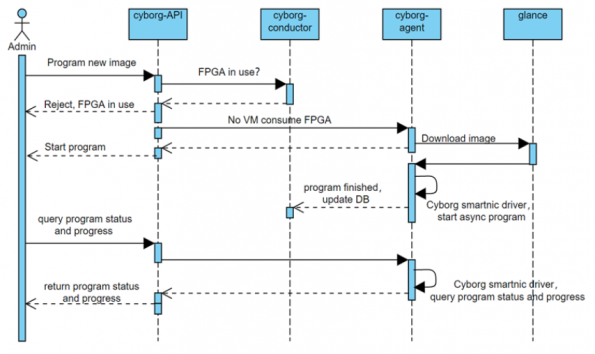

Intel® FPGA Programmable Acceleration Card N3000 (Intel FPGA PAC N3000) is a highly customized platform that enables high-throughput, lower latency, and high-bandwidth applications. It allows the optimization of data plane performance to achieve lower costs while maintaining a high degree of flexibility. End-to-end industry-standard and open-source tool support allow users to quickly adapt to evolving workloads and industry standards. Intel is accelerating 5G and network functions virtualization (NFV) adoption for ecosystem partners, such as telecommunications equipment manufacturers (TEMs), virtual network functions (VNF) vendors, system integrators, and telcos, to bring scalable and high-performance solutions to market. Diagram 4 is the sequence flow for program in Cyborg.

For SmartNIC, we can program it with OVS image as NFVI function in OpenStack, e.g. N3000, Mellanox CX5 and BF2. Diagram 5 is the sequence for enabling accelerators for SmartNIC.

In Cyborg, we support several new features:

- Asynchronous program API.

- Program process API.

- Resource report dynamically.

Users can start a new program process request for N3000. It is an async API, and it is safe to detect intelligently whether there is a conflict in resource usage and decide on accepting or rejecting the request. We also support a friendly program process query API. Users can use it to check the stage of the process and what percentage is completed at any time. When the program is finished, the resource typed and quantified, then Cyborg can discover and report the change dynamically.

In the whole OpenStack, we have also made some new improvements.

- Cyborg supports new drivers for new SRIOV NIC.

- Neutron supports a new option to create a port with accelerator device profile.

- Improvements on Nova, to retrieve the new VF NIC and pass-through to server as an interface.

After these improvements, the OpenStack can support SmartNIC more flexibly and conveniently, and the improvements include SmartNIC cards (as mentioned above), even other SRIOV card.

All the new features and improvements on OpenStack will be upstream.

On November 25, Inspur InCloud OpenStack (ICOS) completed the 1000-nodes “The world’s largest single-cluster practice of Cloud, Bigdata and AI”, a practice for convergence of Cloud, Bigdata and Artificial Intelligence. It is the largest SPEC Cloud test and the first large-scale multi-dimensional fusion test in the industry. It has achieved a comprehensive breakthrough in scale, scene and performance, and completed the upgrade from 500 nodes to 1000 nodes, and achieved the sublimation from quantitative change to qualitative change. Inspur is working on the white paper for this large-scale test, which will be released soon. This will certainly be a reference for products in large-scale environments.

Get Involved

This article is a summary of the Open Infrastructure Summit session, Enhancement of new heterogeneous accelerators based on Cyborg.

Watch more Summit session videos like this on the Open Infrastructure Foundation YouTube channel. Don’t forget to join the global Open Infrastructure community, and share your own personal open source stories using the hashtag, #WeAreOpenInfra, on Twitter and Facebook.

Thanks to the 2020 Open Infrastructure Summit sponsors for making the event possible:

Headline: Canonical (ubuntu), Huawei, VEXXHOST

Premier: Cisco, Tencent Cloud

Exhibitor: InMotion Hosting, Mirantis, Red Hat, Trilio, VanillaStack, ZTE

- Enhancement of New Heterogeneous Accelerators Based on Cyborg - January 13, 2021

- Unleashing the OpenStack “Train”: Contribution from Intel and Inspur - December 10, 2019

)