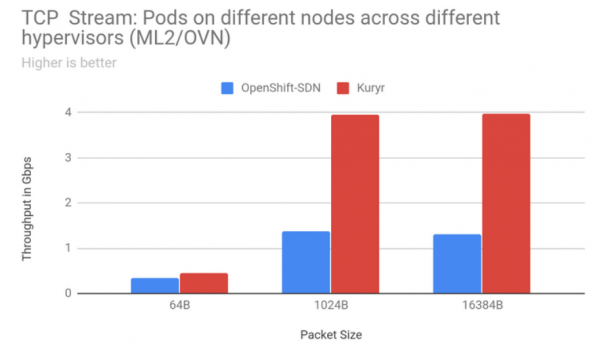

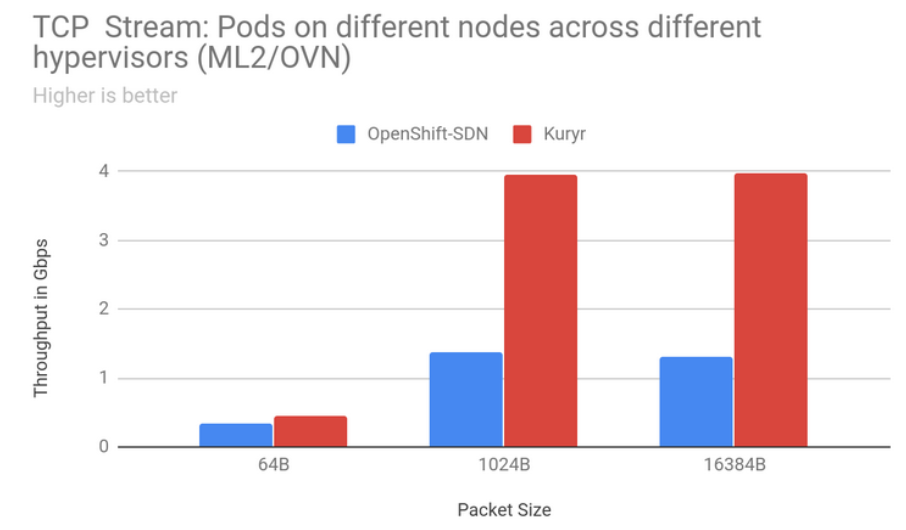

Kuryr is an OpenStack project that relies on Neutron and Octavia to provide connectivity for Kubernetes Pods and Services. This improves the network performance especially when running Kubernetes clusters on top of OpenStack Virtual Machines with Kuryr. It avoids double encapsulation of network packets as only the Neutron overlay is used and consequently simplifies the network stack and facilitates debugging. The following diagram shows the gain of using Kuryr when compared to using Openshift SDN with TCP stream traffic between Pods on nodes across different hypervisors.

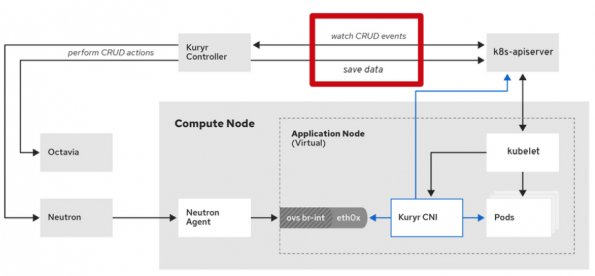

Kuryr-Kuberntes has two components that are deployed as K8s Pods: the controller and the CNI. It differs from other OpenStack projects that react to events resulting from user interaction with OpenStack APIs since the CNI and controller actions are based on events that happen at the Kubernetes API level. Kuryr controller is responsible for mapping those Kubernetes API calls into Neutron or Octavia objects and saving the information about the OpenStack resources generated, while the CNI provides the proper network interface binding to the Pods. Figure 02 shows the interactions of Kuryr components, Neutron, Octavia and Kubernetes components.

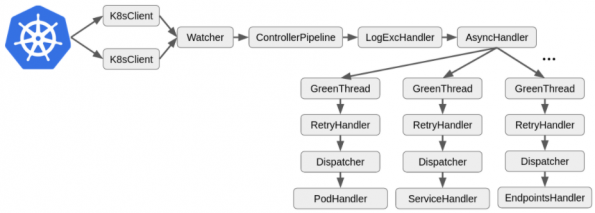

A controller pattern in Kubernetes works like a control loop that aims to make the system state match the system specification, which is embedded in the Kuberntes API. To achieve this the controller needs to react to Kubernetes events, change the system state by interacting with external systems and saving data when necessary. There are golang libraries to facilitate the implementation of the controller pattern, but Kuryr needed its own version as it’s mainly written in Python.

Kuryr controller can be considered a collection of controllers where each controller handles a Kubernetes resource that is represented by an OpenStack resource, e.g. Pods are mapped into Neutron ports, Services into Octavia load balancers and Network Policies into Neutron Security groups. Previously, the references to the OpenStack resource used to be saved directly on the corresponding Native Kubernetes resources with key-value pairs of string to ensure the proper OpenStack resource was updated or cleaned up when needed. However, that proved to not facilitate troubleshooting and synchronization of the current state with the specification.

Users can now rely on Kubernetes API extensions with custom resource definitions to introspect the state of the system and view Kubernetes resources easily mapped to OpenStack resources and being handled like any other native Kubernetes object. The custom resource not only allows having small specialized controllers, but also follows the Kubernetes API conventions and patterns, improves readability and debugging. Each resource definition contains the specification and status fields facilitating the synchronization and finalizers to avoid the hard deletion of the Kubernetes resource while the OpenStack resource deletion is being handled. Previously, if the Kubernetes resource was deleted prior to the corresponding OpenStack one being removed a conflict could happen when a new object was created. Examples of Kuryr CRD and more can be found on the Kuryr documentation.

All these new improvements are already available Upstream on Kuryr-Kubernetes since Victoria release.

Get Involved

This article is a summary of the Open Infrastructure Summit session, K8s controller and CRD patterns in Python – kuryr-kubernetes case study.

Watch more Summit session videos like this on the Open Infrastructure Foundation YouTube channel. Don’t forget to join the global Open Infrastructure community, and share your own personal open source stories using the hashtag, #WeAreOpenInfra, on Twitter and Facebook.

- Enhancement of Kubernetes Controller Pattern in Python with CRD - February 10, 2021

)