Leading tech giants and multiple enterprises are investing heavily in edge computing solutions.

Edge computing will enable businesses to act faster after consuming data and to stay on top of the competition. Faster responses expected by innovative applications will need near real-time access to data, processing data to nearby edge nodes and generating insights to feed the cloud and originating devices. Edge solution vendors are building solutions to reduce the impact of latency on a number use cases.

The goal of edge computing enabled network should be to maintain the end-to-end quality of service and user experience continuity in a network where edge nodes are active. For example, considering edge will be mainstream in 5G-telecom network, a 5G subscriber should not lose the active services while moving within edge premises. Also, new services need to push in real time, irrespective of any edge zone in the network. A subscriber will demand not just new services on a consistent basis but in a faster way to realize 100% outcome for real-time applications. As IoT is evolving in technology market landscape, such low latency demand will be higher from consumers to network operators and solution providers.

Along these same lines, the Red Hat team has devised an integrated solution to reduce latency and maintain user experience continuity within a 5G network enabled with edge nodes. Let’s take a closer look at it.

Co-locating Ceph and OpenStack for hyperconvergence

5G networks are characterized with distributed cloud infrastructure in which services are set to deliver at every part of network, i.e. from central data center/cloud to regional and edge. But, having distributed edge nodes connected to a central cloud comes with constraints in the case of 5G networks.

- The basic requirement for service providers is to life cycle management of network services to every node in the network, have a centralized control on those functions and end-to-end orchestration from a central location.

- A 5G network should provide lower latency, higher bandwidth along with resiliency (failure and recovery at a single node) and scalability (of services as per increasing demands) at edge level.

- Service providers will need to provide faster and more reliable services to consumers with minimum hardware resources, especially at regional nodes and edge nodes.

- A huge amount of data processing and analysis will take place at edge nodes. This will require storage systems that can store every type of data in all available ways and faster access to that data.

To address the above needs, Red Hat’s Sean Cohen, Giulio Fidente and Sébastien Han proposed the solution architecture in OpenStack Summit in Berlin. This architecture amalgamates OpenStack’s core as well as storage-related projects with Ceph in a hyperconverged way. The resulting architecture will support distributed NFV (the backbone technology for 5G), emerging use cases with fewer control planes and distribute VNFs (virtual network functions or network services) within all regional and edge nodes involved in network.

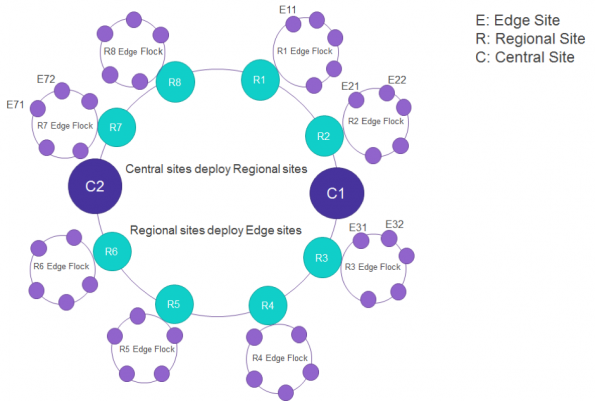

The solution employs the Akraino Edge Stack (a Linux Foundation project), with a typical edge architecture consisting of central site/data center/cloud, regional site and a far edge site.

A central cloud is the backbone of all operations and management of a network where all processed data can be stored. Regional sites or edge nodes can be mobile towers, a node dedicated to specific premises or any other telco-centric premises. Far edge nodes are endpoints of a network which can be digital equipment like mobiles, drones, smart devices; autonomous vehicles, industrial IoT equipment, etc. Shared storage is available at edge zone to make persistent to survive in case of node failure.

Sample deployment

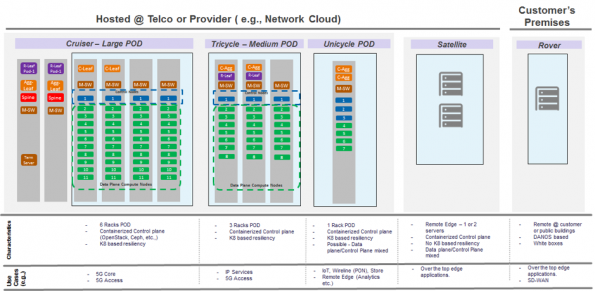

In this proposed solution, edge point of delivery (POD) architecture for telco service providers are referred by the Red Hat team to explain where Ceph clusters can be placed with OpenStack project in a hyperconverged way.

Based on the above diagram, let’s take a further look at the deployment and operations scenarios.

OpenStack

In the case of figure two above, OpenStack already covers the support for Cruiser and Tricycle of POD. However, for edge deployments different OpenStack projects can be utilized for various operations.

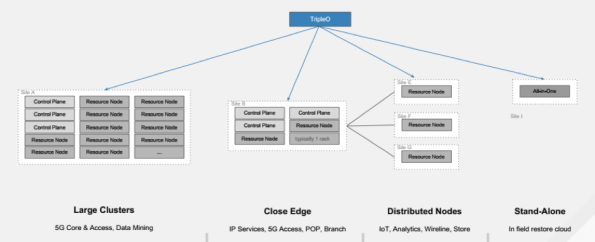

TripleO: A proposed TripleO architecture targeted to reduce the control planes from central cloud to far edge nodes using OpenStack TripleO controller node at the middle layer. The proposed solution is to make TripleO capable of deploying non-controller nodes that are at the edge. With the power of TripleO, OpenStack can have central control over all the edge nodes participated in the network.

Glance API: It will mainly responsible for workload delivery in form of VM images in the edge network from the central data center to far edge nodes. Glance is set up at to central data center and deployed it on the middle edge node where the controller resides. Glance API with a cache can be pushed at far edge site, which is hyperconverged. This way images can be pulled to far edge nodes from the central data center.

Ceph

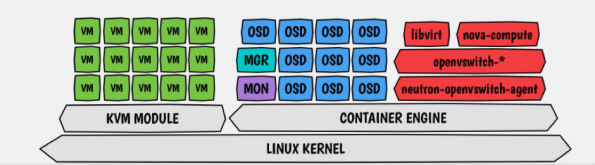

Ceph provides different interfaces to access your data based on the object, block, or file storage. In this architecture, Ceph can be deployed in containers as well as a hypervisor as well. Containerizing Ceph clusters brings more benefits to dynamic workloads. Like better isolation, faster access to applications, better control on resource utilization, etc.

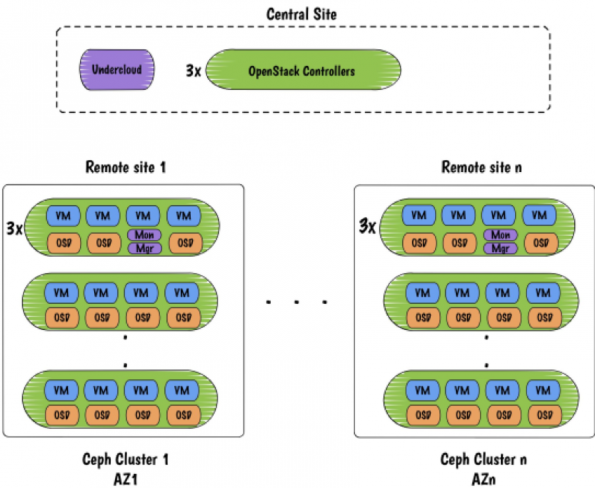

Deployment of Ceph in hyperconverged should be done at Unicycle and Satellite PODs (refer to figure 2) that is the edge nodes; right after central cloud. Therefore, a resultant architecture, which depicts the co-location of containerized Ceph clusters at a regional site, looks like below. Such co-location can (refer to figure 2).

Ceph is deployed with two packages: Monitor and Manager; to bring monitoring benefits such as gathering info, managing maps and storing them.

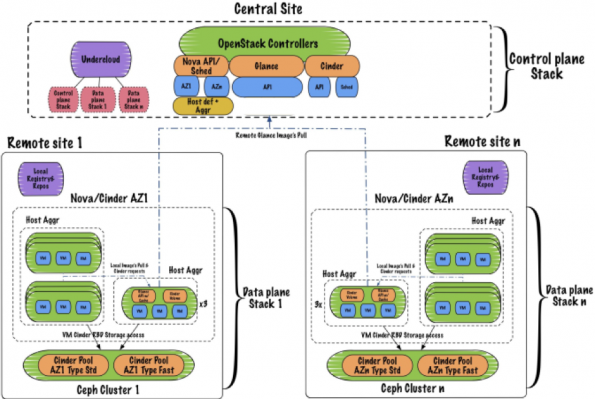

The graphic shows how the control plane is detached from decedent nodes and put on a central site.

This brings a number of benefits, including:

- Reduction of hardware resources and cost at the edge, since edge nodes are hyperconverged and no control plane is required to manage each node

- Better utilization of compute and storage resources

- Reduction of deployment complexity

- Reduction in operational maintenance as the control plane will be similar across all edge nodes and a unified life cycle will be followed for scaling, upgrades, etc.

Final architecture (OpenStack + Ceph Clusters)

Here is the overall architecture from the central site to far edge nodes comprising the distribution of OpenStack services with integration in Ceph clusters. The representation shows how projects are distributed; control plane projects stack at central nodes and data stacks for far edge nodes.

There are few considerations and future work involved in upcoming OpenStack release, Stein. It will involve focusing on service sustenance when edge nodes disconnections, no storage requirement at the far edge, HCI with Ceph monitors using containers resource allocations, ability to deploy multiple Ceph clusters with TripleO, etc.

Conclusion

Hyperconvergence of hardware resources is expected to be a fundamental architecture for multiple mini data center i.e. edge nodes. Red Hat team came with an innovative hyper-convergence of OpenStack projects along with Ceph software-defined storage. As this solution shows, it’s possible to gain better control of all edge nodes by reducing control planes and yet maintain the continuity and sustainability of 5G network along with the performance required by the latest applications.

This article was first published on TheNewStack.

About the author

Sagar Nangare is a technology blogger, focusing on data center technologies (Networking, Telecom, Cloud, Storage) and emerging domains like Open RAN, Edge Computing, IoT, Machine Learning, AI). Based in Pune, he is currently serving Coredge.io as Director – Product Marketing.

- Kubernetes Troubleshooting: A Practical Guide - May 21, 2024

- Overview of the OpenStack Documentation - March 18, 2024

- Refactoring Your Application for OpenStack: Step-by-Step - December 27, 2023

)