It’s only been a few weeks since I embarked on my Outreachy internship at Openstack and I already feel like my brain has evolved so much in terms of the tremendous amount of learning that has taken place. This post is aimed at sharing some of that knowledge. Before we begin, here’s a short introduction to the organization that I am working with, OpenStack.

OpenStack is a set of open source, scalable software tools for building and managing cloud computing platforms for public and private clouds. OpenStack is managed by the OpenStack Foundation, a non-profit that oversees both development and community-building around the project.

There are many teams working on different projects under the banner of Openstack, one of which is Ironic. Openstack Ironic is a set of projects that perform bare metal (no underlying hypervisor) provisioning in use cases where a virtualized environment might be inappropriate and a user would perfer to have an actual, physical, bare-metal server. Thus, bare metal provisioning means a customer can use hardware directly, deploying the workload (image) onto a real physical machine instead of a virtualized instance on a hypervisor.The title for the project that I am working on is: “Extend sushy to support RAID”Reading the topic, the first word that jumps out is RAID, an acronym for Redundant Array of Independent Disks. It’s a technique of storing the same data in different places on multiple disks instead of a single disk for increased I/O performance, data storage reliability or both. There are different RAID levels that use a mix/match of striping, parity or mirroring, each optimized for a specific situation.

The main theme behind the existence of Ironic is to allow its users (mostly system admins) to access the hardware, running their servers, remotely.This gives them the ability to manage and control the servers 24X7, crucial in case of any server failures at odd hours. Here’s where Baseboard Management Controller (BMC) comes to the rescue. It’s an independent satellite computer that can typically be a system-on-chip microprocessor or a standalone computer that’s used to perform system monitoring and management related tasks.

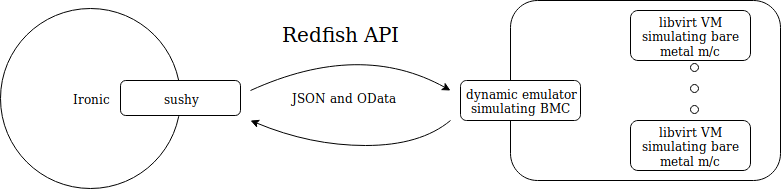

At the heart of a BMC lies Redfish, a protocol used by BMC (on bare metal machines) to communicate remotely via JSON and OData. It’s a standard RESTful API offered by DMTF to get and set hardware configuration items on physical platforms. Sushy is a client-side Python library used by Ironic to communicate with Redfish-based systems.

To test and support the development of the sushy library, a set of simple simulation tools called sushy-tools is used, since it’s not always possible to have an actual bare metal machine with Redfish at hand.

The package offers two emulators, a static emulator and a dynamic emulator. The static emulator simulates a read-only BMC and is a simple REST API server that responds the same things to client queries. The dynamic emulator simulates the BMC of a Redfish bare metal machine and is used to manage libvirt VMs (mimicking actual bare metal machines), resembling how a real Redfish BMC manages actual bare metal machine instances. Like its counterpart, the dynamic emulator also exposes REST API endpoints that can be consumed by a client using the sushy library.

The aim of my project is to add functionality to the existing sushy library so that clients can configure RAID-based volumes for storage on their bare metal instances remotely. There are two aspects to this project, one is the addition of code in the sushy library and the other is adding support for emulating the storage subsystem in sushy-tools so that we’re actually able to test the added functionality in sushy against something. Since there is already one contributor working on adding RAID implementation to sushy, my task in the project involves adding the RAID support for emulation to the sushy-tools dynamic emulator (more specifically the libvirt driver).

Early challenges

The first task that the mentors asked me to do was learn how to get and receive data from the libvirt VMs using sushy via the sushy-tools dynamic emulator. Honestly, the task did feel a bit intimidating in the beginning. I had to go back to the networking basics and brush up on them. After that, I spent quite some time reading blogs and following videos on libvirt VMs. I encountered some problems while setting up and creating the VMs using the virt-install command and had to ultimately fall back to the virt-manager GUI to spin up the VMs.

I also wasn’t able to set up the local development environment for sushy-tools due to the absence of instructions for the same on the README. I have to admit that I thought ‘maybe the instructions aren’t mentioned because they are too obvious’ and felt a bit embarrassed about them not being obvious to me. Then I reminded myself that I’m here to learn, so I went ahead and asked my mentor who very warmly helped me out with the necessary commands to set up the repository. After successfully setting up the environment, I even submitted a patch for adding the corresponding instructions to the docs to make it a bit easier for new-comers like me to start contributing to the project.

While working the first task, I came across some anomalies in the behavior of the dynamic emulator which led me to dig up the code to find out what was happening. I ultimately found out that it was actually a bug and submitted a patch for the same.

Right now, I’m working on exposing the volume API in the dynamic emulator. There are a lot of decisions to be made, related to the mapping of the sushy storage resources to the libvirt VMs. I’ve been researching about them and am regularly having discussions with the mentors on the same and will hopefully include the final implementation mapping in my next blog post.

This post first appeared on Varsha Verma’s blog.

Superuser is always interested in open infrastructure community content. Get in touch: editorATopenstack.org.

Photo // CC BY NC

)