No matter how you stack up the projections, containers will only grow in popularity.

Olaph Wagoner launched into his talk about micro-services citing research that projects

container revenue will approach $3.5 billion in 2021, up from a projected $1.5 billion

in 2018.

Wagoner, a software engineer and developer advocate at IBM, isn’t 100 percent sure about that prediction but he is certain that containers are here to stay.

In a talk at the recent OpenInfra Days Vietnam, he offered an overview on micro-services, Kubernetes and Istio.

Small fry?

There is no industry consensus yet on the properties of micro-services, he says, though defining characteristics include being independently deployable and easy to replace.

As for these services being actually small, that’s up for debate. “If you have a hello world application, that might be considered small if all it does is print to the console,” he explains. “A database server that runs your entire application could still be considered a micro-service, but I don’t think anybody would call that small.”

K8s

Kubernetes defines itself as a portable, extensible open-source platform for managing containerized workloads and services that facilitates both declarative configuration and automation. “Simply put, it’s a way to manage a bunch of containers or services whatever you want to call them,” he says.

Meshing well

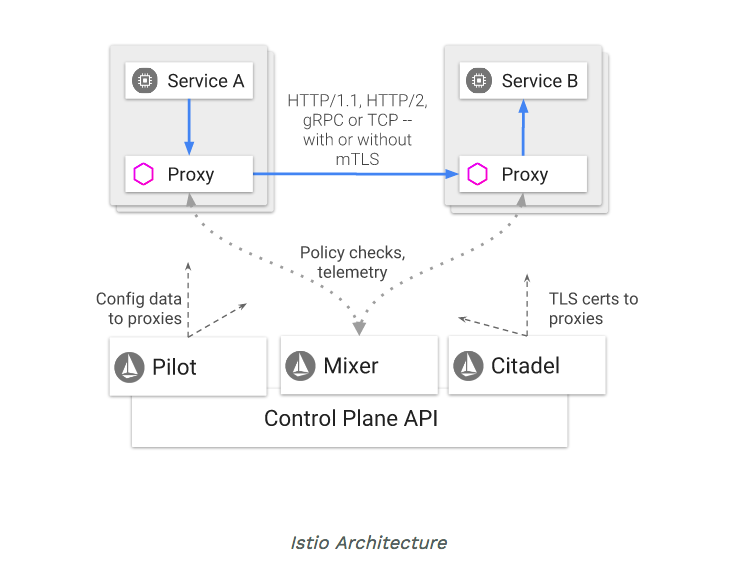

Istio is an open-source service mesh that layers transparently onto existing distributed applications, allowing you to connect, secure, control and observe services. And one last definition: service mesh is the network of micro-services that make up these distributed applications and the interactions between them.

What does this mean for users?

“Istio expands on all the things you can do with the Kubernetes cluster,” Wagoner says. And that’s a long list: Automatic load balancing for HTTP, gRPC, WebSocket, and TCP traffic; fine-grained control of traffic behavior with rich routing rules, retries, failovers and fault injection; a pluggable policy layer and configuration API supporting access controls, rate limits and quotas; Secure service-to-service authentication with strong identity assertions between services in a cluster.

“The coolest things are the metrics, logs and traces,” he says. “All of a sudden you can read logs to your heart’s content and know exactly which services are talking to each other, how often and who’s mad at who.”

What’s inside Istio

What’s inside Istio

Wagoner outlined Istio’s main components:

“Getting down to the nitty gritty”

At the 13:07 mark, Wagoner goes into detail about how Istio works with OpenStack and why it’s worthwhile.

Once you’ve got Kubernetes and installed Istio on top, the K8s admin can create a cluster using the OpenStack API. The K8s user in OpenStack can just use the cluster — they don’t get to see all the APIs doing the heavy lifting behind the scenes. All of this is now possible thanks to the Kubernetes OpenStack Cloud Provider. “What does this bad boy do?” Wagoner asks. “The Kubernetes services sometimes needs stuff from the underlying cloud, services, endpoints etc. and that’s the goal.” Ingress (part of Mixer) is a perfect example, he says, it relies on the OpenStack Cloud Provider for load balancing and add end points.

“This is my favorite part of the whole idea of why you would want to make OpenStack run Kubernetes in the first place — the idea of mesh expansion. You’ve got your cloud and you’ve got Kubernetes running on your OpenStack cloud and it’s do telling you everything your cluster is doing. You can expand that expand that service mesh to include not only virtual machines from your OpenStack cloud is but also bare metal instances.”

Catch the full 27-minute talk here or download the slides.

- Exploring the Open Infrastructure Blueprint: Huawei Dual Engine - September 25, 2024

- Open Infrastructure Blueprint: Atmosphere Deep Dive - September 18, 2024

- Datacomm’s Success Story: Launching A New Data Center Seamlessly With FishOS - September 12, 2024

)