This post originally ran on “OpenStack In Production” . Belmiro Moreira is a software engineer passionate about the challenges and complexities of architecting and deploying Cloud Infrastructures in very large-scale environments. He works at CERN and during the last two years his main role was to design, develop and build the CERN Cloud Infrastructure based on OpenStack. You should follow him on Twitter.

In the last OpenStack Design Summit in Hong Kong I presented the CERN Cloud Architecture with the talk “Deep Dive into the CERN Cloud Infrastructure”. Since then the infrastructure grown to a third cell and we enabled ceilometer compute-agent. Because of that we needed to perform some architecture changes to cope with the number of nova-api calls that ceilometer compute-agent generates.

The cloud infrastructure has now more than 50000 cores and after the next hardware delivers expected during the next months, more than 35000 new cores will be added most of them in the remote Computer Centre in Hungary. Also, we continue to migrate existing servers to OpenStack compute nodes at an average of 100 servers per week.

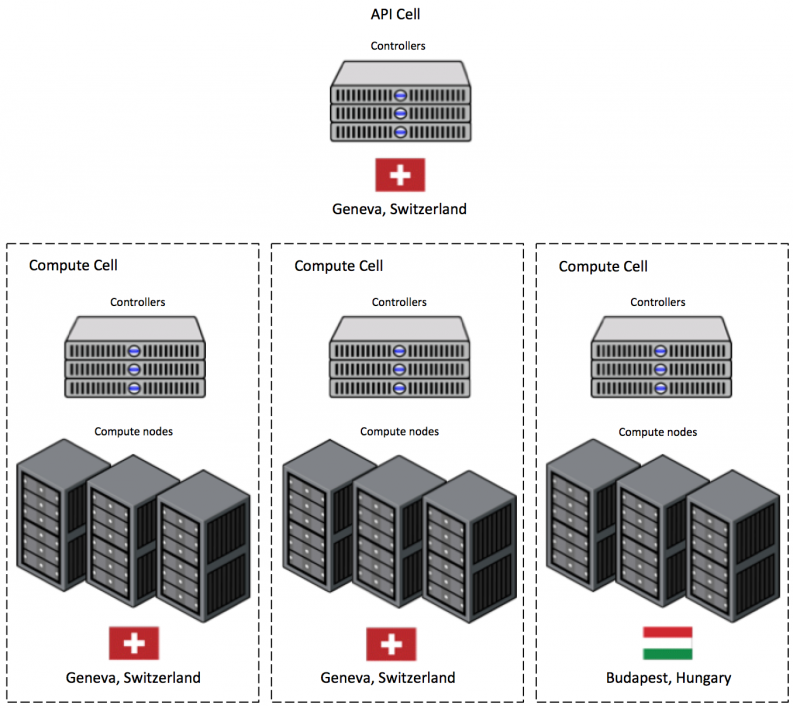

We are using Cells in order to scale the infrastructure and for project distribution.

At the moment we have three Compute Cells. Two are deployed in Geneva, Switzerland and the other in Budapest, Hungary.

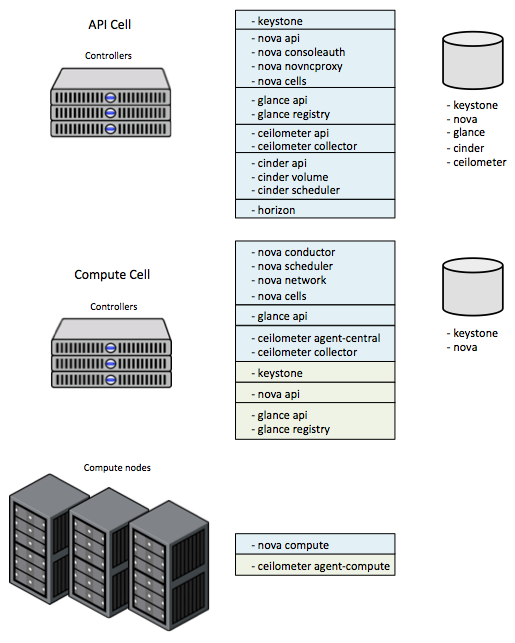

All OpenStack services running in the Cell Controllers are behind a Load Balancer and we have at least 3 running instances for each of them. As message broker we are using RabbitMQ clustered with HA queues.

Metering is an important requirement for CERN to account resources and ceilometer is the obvious solution to provide this functionality.

Considering our Cell setup we are running ceilometer api and ceilometer collector in the API Cell and the ceilometer agent-central and ceilometer collector in the Compute Cells.

In order to get information about the running VMs ceilometer compute-agent calls nova-api. In our initial setup we used the simple approach to use nova-api already running in the API Cell. This means all ceilometer compute-agents will authenticate with keystone in the API Cell and then call nova-api running there. Unfortunately this approach doesn’t work using cells because the bug: https://bugs.launchpad.net/nova/+bug/1211022

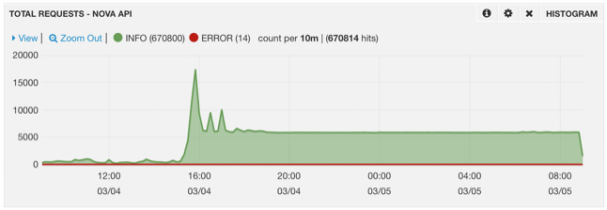

Even if ceilometer was not getting the right instance domain and failed to find the VMs in the compute nodes we noticed a huge increase in the number of nova-api calls that were hitting the nova-api servers on API Cell that could degrade user experience.

We then decided to move this load to the Compute Cells enabling nova-api compute there and point all ceilometer compute-agents to them instead. This approach has 3 main advantages:

- Isolation of nova-api calls per Cell allowing a better dimensioning of Compute Cell controllers and separation between user and ceilometer requests.

- Nova-api on the Compute Cells uses nova Cell databases. Distribute the queries between databases not overloading API Cell database.

- Because point 2) the VM domain name is now reported correctly.

However to deploy nova-api compute at Compute Cell level we also needed to configure other components: keystone, glance-api and glance-registry.

-

Keystone is configured per Compute Cell with the following endpoints (local nova-api and local glance-api). Only service accounts can authenticate with the keystones running in the Compute Cells, users are not allowed.

Configuring keystone per Compute Cell allows us to distribute the nova-api load at Cell level. For ceilometer only Cell databases are used to retrieve instance information. Keystone load is also distributed. Instead using the API Cell keystone used by every user, ceilometer only uses the Compute Cell keystones that are completely isolated from the API Cell. This is especially important because we are not using PKI and our keystone configuration is single threaded. -

From the beginning we are running glance-api at Compute Cell level. This allows us to have image cache in the Compute Cells, which is especially important for the Budapest Computer Centre since Ceph deployment is at Geneva.

Ceilometer compute-agent also queries for image information using nova-api. However we can’t use the existing glance-api because it uses the API Cell keystone for token validation. Because of that we setup other glance-api and glance-registry at Compute Cell level but listening a different port and using the local keystone. - Nova-api compute is enabled at Compute Cell level. All nova services running in the Compute Cell controllers use the same configuration file “nova.conf”. This means that for nova-api service we needed to overwrite the “glance_api_servers” configuration option to point to the new local glance-api, but keeping the old configuration that is necessary to spawn instances. Nova-api service is using the local keystone. Metadata service is not affected because of that.

We expect that with this distribution if ceilometer starts to overload the infrastructure, user experience will not be affected.

With all these changes in the architecture we now enabled ceilometer compute-agent in all Compute Cells.

Just out of curiosity I would like to finish this blog post with the plot showing the number of the nova-api calls before and after enabling ceilometer compute-agent in all infrastructure. In total it increased more than 14 times.

__

Image credit: CERN

- Scaling Bare Metal Provisioning with Nova and Ironic at CERN:Challenges & Solutions - January 11, 2021

- CERN Cloud Architecture – Update - May 9, 2014

)