After reviewing much of the existing collateral regarding OpenStack networking, one quickly comes to the conclusion that the commonly accepted network deployment model centers squarely on tenant networks. In this article, I will argue that specifically in the private cloud context tenant networks are problematic and that provider networks (specifically, shared provider networks) may be a better fit.

What’s a tenant?

The term “tenant” as used in this article can be replaced interchangeably with the more current term “project.” In a private cloud, a given tenant may be mapped to a particular business unit, a specific multi-tier application or even a single application tier. In these examples, all tenants ultimately belong to a single organization. This contrasts sharply with a public cloud, where individual tenants generally represent separate organizations.

What are tenant networks/provider networks?

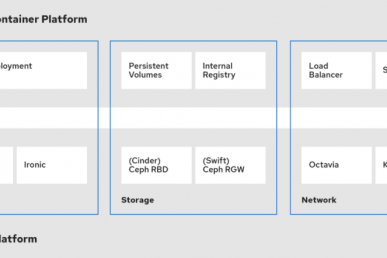

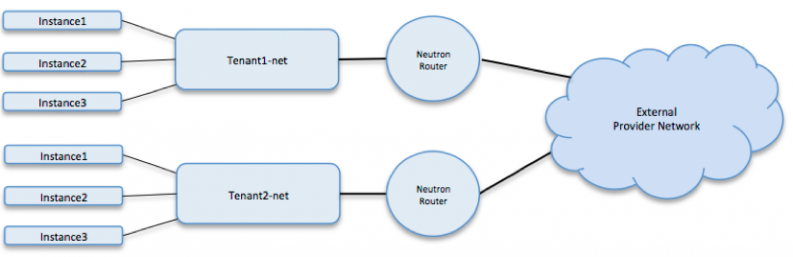

The primary difference between tenant networks and provider networks revolves around who provisions them. Provider networks are created by the OpenStack administrator on behalf of tenants and can be dedicated to a particular tenant, shared by a subset of tenants (see RBAC for networks) or shared by all tenants. On the other hand, tenant networks are created by tenants for use by their instances and cannot be shared (based upon default policy settings).

Typically, provider networks are directly associated with a physical network (i.e. virtual LAN (VLAN)) in the data center but that is not a requirement. It’s certainly possible to provision a provider network using an overlay protocol (i.e. generic routing encapsulation (GRE) or virtual extensible LAN) and then map the overlay network to the physical network using software or hardware gateways. Likewise, tenant networks can be instantiated using either underlay or overlay technologies. In general, tenants are unaware of how their tenant networks are physically realized. In summary, selection of an underlay or overlay technology in general has no bearing on whether a tenant network or provider network can be used.

Finally, provider networks rely on the physical network infrastructure to provide default gateway/first hop routing services. In contrast, tenant networks rely on Neutron routers to fulfill this role. Neutron routers must attach their upstream interfaces to provider networks designated as “external” in order to connect to the physical network.

Tenant networks

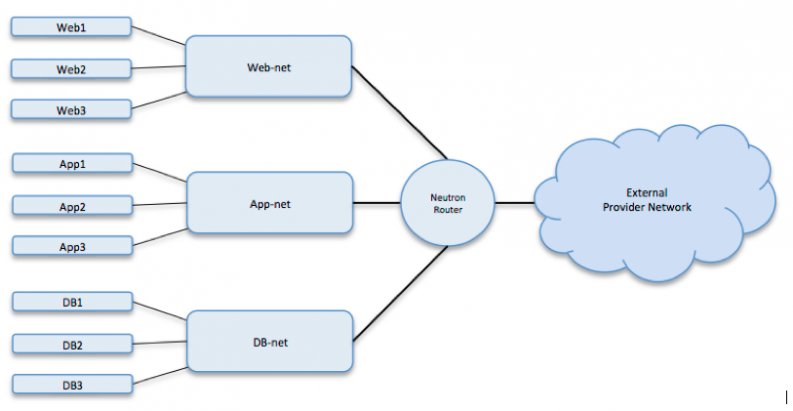

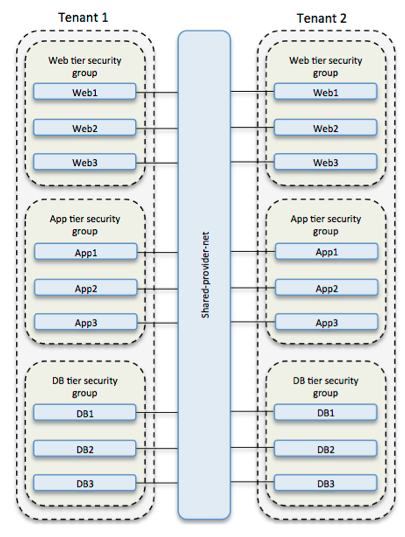

Shared provider network

Network security is now orthogonal to network topology.

Historically, it has only been possible to apply network security at the perimeter of the network (a layer 3 boundary), typically using a firewall or router. More recently, advanced techniques such as VLAN stitching have enabled the use of so-called transparent firewalls. Now, using Neutron logical ports in conjunction with Neutron security groups, network security can be applied directly where each workload attaches to the network. As a result, it is now possible to provision security zones and protect application tiers using security groups rather than dedicated layer 2 networks.

Traditional application tiering

Application tiering/security zones using security groups

In addition, Neutron port security prevents layer 2 attack techniques such as MAC and IP spoofing.

Together, these Neutron features make shared provider networks a viable alternative to tenant networks for application tiering/security zones.

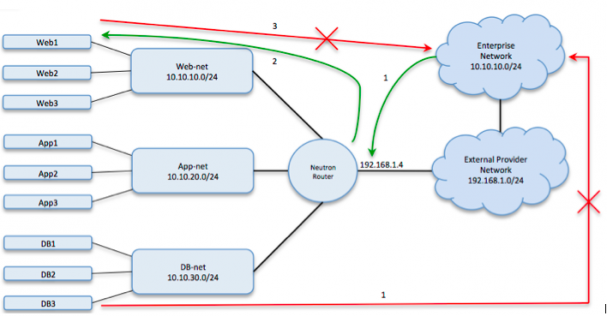

The tenant network IP address space selection problem

When provisioning a tenant network, tenants must specify the IP address space they wish to use. How does the tenant make an appropriate selection? This is a more difficult challenge than it might seem at first glance. Let’s assume that either source NAT or destination NAT will be used and therefore the tenant network IP address space will never be exposed outside the cloud. Even in this case, the tenant cannot pick any IP address space they wish (even if it’s in RFC 1918 private address space). If the tenant selects an IP address space that is in use elsewhere in the enterprise (let’s say 10.10.10.0/24, for example), that enterprise network will be unable to communicate with the tenant network. Furthermore, any other tenant networks connected to the same Neutron router will also be unable to communicate with the enterprise network. This occurs because the source IP address of network traffic originating from outside the cloud is not subject to NAT by OpenStack. OpenStack will only apply NAT to the source IP address of network traffic originating from the tenant network. Since the 10.10.10.0/24 network is directly connected to the Neutron router and the instances on the tenant network, traffic from the cloud destined for the enterprise network will never find its way there.

IP address space collision example when using source NAT

This scenario cannot occur in public clouds if tenants use private address space for their networks (since consumers of the cloud will all be coming from registered address space). However, in the private cloud context it is quite likely that private address space is in use and that there are consumers of the cloud that are originating from private address space.

Thankfully, subnet pools were introduced in the Kilo release as a mechanism to deal with the tenant network IP address space selection issue. Unfortunately, Horizon enhancements to support subnet pools were not delivered until the Liberty release.

Even so, subnet pools assume that a large block of address space can be delegated to the cloud.

IPv4 address space scarcity

In large organizations, even RFC 1918 private address space is a scarce commodity and must be judiciously allocated. Common sense dictates that a shared resource will experience higher utilization than a dedicated resource. This argument holds true when comparing the likely IP address space utilization of dedicated tenant networks with shared provider networks. There is likely to be stranded address space “capacity” in tenant networks, while shared provider networks can be utilized to their full potential.

Network address translation (NAT) – Use it if you must

By default, source NAT (essentially port address translation) is enabled when Neutron routers are attached to external provider networks. Optionally, destination NAT can be enabled on a per-instance basis using floating IP addresses allocated from the external provider network. It’s important to point out that certain protocols break when NAT is employed. RFC 3027 details a few such examples while RFC 2993 expounds on the architectural implications of NAT. In essence, NAT violates the end-to-end principle that end systems should manage state and the network should be a simple datagram service. NAT is certainly required in specific use cases but should be avoided where possible.

Furthermore, certain enterprises may mandate that all networked systems be reachable and consequently all instances must be accessible from outside the cloud. This implies either a 1:1 NAT for every instance or more logically simply dispensing with NAT.

Tenants can choose to disable NAT entirely when attaching their Neutron routers to an external provider network. However, this leads to the tenant network reachability issue detailed in the next section.

Tenant network reachability

When using source NAT or destination NAT, instances use IP addresses from the external provider network to communicate with systems outside the cloud. The subnet associated with the external provider network is made routable as a part of the initial provider network deployment process. Consequently, instances are able to communicate with systems outside the cloud.

However, if NAT is disabled the IP address spaces associated with tenant networks are directly exposed to systems outside the cloud. If multiple Neutron routers have been deployed, how does the physical networking routing infrastructure know which IP address spaces reside behind a particular Neutron router? OpenStack Neutron does not currently support any dynamic routing protocols, so it’s not currently possible to programmatically advertise the tenant networks as a part of the tenant network creation process. In order to make the tenant networks reachable from external systems, a scheme out-of-band from OpenStack must be devised.

Although far less common than routed tenant network topologies, tenant networks can also be isolated. In this case, the layer 2 network created by the tenant does not enjoy connectivity to the external world through a Neutron router, but instead could be accessed through a jump host that has been dual-homed, for example.

Neutron router dependency

Tenant networks rely on Neutron routers for connectivity to other networks. This also means the tenant networks are constrained by the features supported by Neutron routers. This can be an issue if, for example, a tenant has a requirement for IP multicast routing. In contrast, since provider networks rely on the physical network infrastructure for layer 3 services, a more robust set of features is potentially available to instances.

Looking to the future and IPv6

IPv6 addresses some of the concerns raised above. Chiefly, address space scarcity is not a current concern with IPv6. Likewise, allocation of large blocks of IPv6 address space to subnet pools eliminates the tenant network IP address space selection problem. Since address space conservation is not required, all networks can be allocated unique, routable IPv6 address space and therefore NAT is not required.

However, the question still remains: Are dedicated layer 2 networks necessary if application tiering/security zones can be implemented using Neutron security groups and MAC/IP spoofing can be prevented with Neutron port security?

The benefits of provider networks

Provider networks are pre-created by the OpenStack administrator for use by tenants. This frees tenants from the responsibility (read: burden) of provisioning networks, selecting an appropriate address space and making sure it is reachable. Shared provider networks have a higher likelihood of making efficient use of scarce IPv4 address space. Application tiering/security zones no longer necessitate the deployment of dedicated layer 2 networks and can be implemented using security groups on a common, shared provider network. Lastly, if a dedicated layer 2 network is mandated, a dedicated provider network can be provisioned.

Conclusion

OpenStack administrators must decide what their Neutron network deployment strategy will leverage- tenant networks, provider networks or some combination of the two. Much of the existing documentation focuses on the deployment and management of tenant networks. Provider networks seem to have been given short shrift. Specifically in the private cloud context, provider networks should be given serious consideration due to the challenges presented by tenant networks and the corresponding benefits associated with provider networks.

This is Swanson’s second post on OpenStack networking, catch his previous tutorial on Provisioning Neutron networks.

Swanson has approximately 20 years of IT infrastructure experience, with much of that time spent in the network engineering and Unix system engineering spaces. He is a member of Wells Fargo’s OpenStack private cloud engineering team, focused on software-defined networking (SDN) and network functions virtualization (NFV). Prior to joining Wells Fargo, he worked in the semiconductor (Micron Technology), e-commerce (Amazon.com) and higher education (Bethel University) industries. Alain holds a Bachelor of Science degree in physics and a Master of Science degree in computer science.

Superuser is always interested in how-tos and other contributions from the OpenStack community, please get in touch! Write to [email protected]

- Tenant networks vs. provider networks in the private cloud context - March 4, 2016

- Provisioning Neutron networks with the NSX-v Plugin - December 11, 2015

)