If you skipped the first part of this series, I am not responsible for the technical confusion and emotional stress created while you read this one. I highly recommend reading part one before continuing. However, if you like to live dangerously, then please carry on.

Part 1 recap:

- You were introduced to the main character, OpenStack.

- You learned its whereabouts and became acquainted with the “neighborhood.”

- You learned what it needs to thrive and how to set it up for yourself.

Here’s an overview of what we learned in the last post:

Basic setup for deploying OpenStack

The first step to implementing OpenStack is to set up the identity service, Keystone.

A. Keystone

Keystone

Simply put, Keystone is a service that manages all identities. These identities can belong to your customers who you have offered services to, and also to all the micro-services that make up OpenStack. These identities have usernames and passwords associated with them in addition to the information on who is allowed to do what. There is much more to it but we will leave some detail out for now. If you work in tech, you’re already familiar with the concept of identity/access cards. These cards not only identify who you are but also control which doors you can and cannot open on company premises. So in order to set up this modern day OpenStack watchman, perform the following steps:

@controller

Log in to MariaDB:

sudo mysql -u root -pCreate a database for Keystone and give the user full Keystone privileges to the newly created database:

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'MINE_PASS';

exitInstall the Keystone software:

sudo apt install keystoneEdit the Keystone configuration file:

sudo vi /etc/keystone/keystone.conf

#Tell keystone how to access the DB

[database]

connection = mysql+pymysql://keystone:MINE_PASS@controller/keystone (Comment out the exiting connection entry)

#Some token management I don’t fully understand. But put it in, its important)

[token]

provider = fernetThis command will initialize your Keystone database using the configuration that you just did above:

sudo su -s /bin/sh -c "keystone-manage db_sync" keystoneSince we have no identity management (because you are setting it up right now!), we need to bootstrap the identity management service to create an admin user for Keystone

sudo keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

sudo keystone-manage credential_setup --keystone-user keystone --keystone-group keystoneSince OpenStack is composed of a lot of micro-services, each service that we define will need to have an endpoint URL(s). This is how other services will access this service (notice, in the sample below, that there are three URLs). Run the following:

sudo keystone-manage bootstrap --bootstrap-password MINE_PASS \

--bootstrap-admin-url http://controller:35357/v3/ \

--bootstrap-internal-url http://controller:35357/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOneYou need to configure Apache for Keystone. Keystone uses Apache to entertaining requests it receives from its other buddy services in OS. Lets just say that Apache is like a good secretary that is better at handling and managing requests than if Keystone tried to do it independently.

sudo vi /etc/apache2/apache2.conf

ServerName controller

sudo service apache2 restart

sudo rm -f /var/lib/keystone/keystone.dbOne of the most useful ways to interact with OS is via the command line, since, if you want to interact with OS, you need to be authenticated and authorized. An easy way to do this is to create the following file and then source it in your command line.

sudo vi ~/keystone_admin

export OS_USERNAME=admin

export OS_PASSWORD=MINE_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_DOMAIN_NAME=default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export PS1='[\u@\h \W(keystone_admin)]$ 'If you want to source just use the following command on the controller:

source ~/keystone_adminBefore we proceed, we need to talk about a few additional terms. OpenStack utilizes the concepts of domains, projects and users.

- Users are, well, just users of OpenStack.

- Projects are similar to customers in an OpenStack environment. So, if I am using my OpenStack environment to host VMS for Customer ABC and Customer XYZ, then ABC and XYZ could be two projects.

- Domains are a recent addition (as if things weren’t already complex enough) that allows you further granularity. If you wanted to have administrative divisions within OpenStack so each division could manage their own environments, you would use domains. So you could put ABC and XYZ in different domains and have separate administration for both, or you could put them in the same domain and manage them with a single administration. Its just an added level of granularity. And you thought your relationships were complex!

Create a special project to hold all the internal users (most micro-services in OpenStack will have their own service users and they are associated to this special project.)

openstack project create --domain default \

--description "Service Project" serviceVerify Operations

Run the following command to request an authentication token using the admin user:

openstack --os-auth-url http://controller:35357/v3 \

--os-project-domain-name default --os-user-domain-name default \

--os-project-name admin --os-username admin token issue

Password:

+------------+-----------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------+

| expires | 2016-11-30 13:05:15+00:00 |

| id | gAAAAABYPsB7yua2kfIZhoDlm20y1i5IAHfXxIcqiKzhM9ac_MV4PU5OPiYf_ |

| | m1SsUPOMSs4Bnf5A4o8i9B36c-gpxaUhtmzWx8WUVLpAtQDBgZ607ReW7cEYJGy |

| | yTp54dskNkMji-uofna35ytrd2_VLIdMWk7Y1532HErA7phiq7hwKTKex-Y |

| project_id | b1146434829a4b359528e1ddada519c0 |

| user_id | 97b1b7d8cb0d473c83094c795282b5cb |

+------------+-----------------------------------------------------------------+Congratulations, you have the keys!

Now we meet, Glance the image service. Glance is essentially a store for all the different flavors (images) of virtual machines that you may want to offer to your customers. When a customer requests a particular type of virtual machine, Glance finds the correct image in its repository and hands it over to another service (which we will discuss later).

B. Glance

To configure OpenStack’s precious image store, perform the following steps on the @controller:

Log in to the DB:

sudo mysql -u root -pCreate the database and give full privileges to the Glance user:

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'MINE_PASS';

exitSource the keystone_admin file to get command line access:

source ~/keystonerc_adminCreate the Glance user:

openstack user create --domain default --password-prompt glanceGive the user rights:

openstack role add --project service --user glance adminCreate the Glance service:

openstack service create --name glance \

--description "OpenStack Image" imageCreate the Glance endpoints:

openstack endpoint create --region RegionOne \

image public http://controller:9292

openstack endpoint create --region RegionOne \

image internal http://controller:9292

openstack endpoint create --region RegionOne \

image admin http://controller:9292Install the Glance software:

sudo apt install glanceConfigure the configuration file for Glance:

sudo vi /etc/glance/glance-api.conf

#Configure the DB connection

[database]

connection = mysql+pymysql://glance:MINE_PASS@controller/glance

#Tell glance how to get authenticated via keystone. Every time a service needs to do something it needs to be authenticated via keystone.

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = MINE_PASS

#(Comment out or remove any other options in the [keystone_authtoken] section.)

[paste_deploy]

flavor = keystone

#Glance can store images in different locations. We are using file for now

[glance_store]

stores = file,http

default_store /[= file

filesystem_store_datadir = /var/lib/glance/images/Edit another configuration file:

sudo vi /etc/glance/glance-registry.conf

#Configure the DB connection

[database]

connection = mysql+pymysql://glance:MINE_PASS@controller/glance

#Tell glance-registry how to get authenticated via keystone.

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = MINE_PASS

#(Comment out or remove any other options in the [keystone_authtoken] section.)

#No Idea just use it.

[paste_deploy]

flavor = keystoneThis command will initialize the Glance database using the configuration files above:

sudo su -s /bin/sh -c "glance-manage db_sync" glanceStart the Glance services:

sudo service glance-registry restart

sudo service glance-api restartVerify Operation

Download a cirros cloud image:

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.imgLog in to the command line:

source ~/keystonerc_adminCreate an OpenStack image using the command below:

openstack image create "cirros" \

--file cirros-0.3.4-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public List the images and ensure that your image was created successfully:

openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| d5edb2b0-ad3c-453a-b66d-5bf292dc2ee8 | cirros | active |

+--------------------------------------+--------+--------+Are you noticing a pattern here? In part one, I mentioned that the OpenStack components are similar but subtly different. As you will continue to see, most OpenStack services follow a standard pattern in configuration. The pattern is as follows:

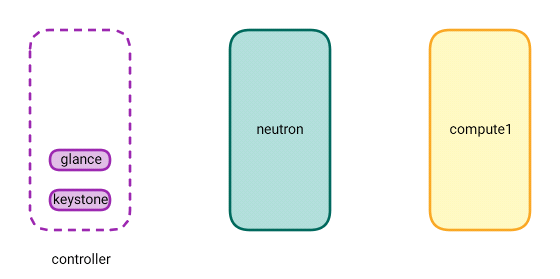

General set of steps for configuring OpenStack components

Most OpenStack components will follow the sequence above with minor deviations. So, if you’re having trouble configuring a component, it’s a good idea to refer to this list and see what steps you’re missing.

What follows is probably one of the most important parts of OpenStack. It’s called Nova and, although it has nothing to do with stars and galaxies, it is still quite spectacular. At the end of this last section you got operating system image (from here on out, references to “image” mean “Glance image”).

Now I’ll introduce another concept, called an instance. An instance is what is created out of an image. This is the virtual machine that you use to provide services to your customers. In simpler terms, let’s imagine you had a Windows CD and you use this CD to install Windows on a laptop. Then you use the same CD to install another Windows on another laptop. You input different license keys for both, create different users for each and they are then two individual and independent laptops running Windows from the same CD.

Using this analogy, an image is equivalent to the Windows CD with the Windows running on each of the laptops acting as different instances. Nova does the same thing by taking the CD from Glance and creating, configuring and managing instances in the cloud which are then handed to customers.

C. Nova

Nova

Nova is one of the more complex components that resides on more than one machine and does different things on each. A component of Nova sits on the controller and is responsible for overall management and communication with other OpenStack services and external world services. The second component sits on each compute (yes, you can have multiple computes, but we will look at those later). This service is primarily responsible for talking to the virtual machine monitors and making them launch and manage instances. Perform the following configuration to configure the Nova component:

@controller

Create the database(s) and grant relevant privileges:

sudo mysql -u root -p

CREATE DATABASE nova_api;

CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'MINE_PASS';

exitLog in to the command line:

source ~/keystonerc_adminCreate the user and assign the roles:

openstack user create --domain default \

--password-prompt nova

openstack role add --project service --user nova admin Create the service and the corresponding endpoints:

openstack service create --name nova \

--description "OpenStack Compute" compute

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)sInstall the software:

sudo apt install nova-api nova-conductor nova-consoleauth \

nova-novncproxy nova-schedulerConfigure the configuration file:

sudo vi /etc/nova/nova.conf

#Configure the DB-1 access

[api_database]

connection = mysql+pymysql://nova:MINE_PASS@controller/nova_api

#Configure the DB-2 access (nova has 2 DBs)

[database]

connection = mysql+pymysql://nova:MINE_PASS@controller/nova

[DEFAULT]

#Configure how to access RabbitMQ

transport_url = rabbit://openstack:MINE_PASS@controller

#Use the below. Some details will follow later

auth_strategy = keystone

my_ip = 10.30.100.215

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#Tell Nova how to access keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = MINE_PASS

#This is a remote access to instance consoles (French ? Just take it on faith. We will explore this in a much later episode)

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

#Nova needs to talk to glance to get the images

[glance]

api_servers = http://controller:9292

#Some locking mechanism for message queing (Just use it.)

[oslo_concurrency]

lock_path = /var/lib/nova/tmpInitialize both of the databases using the configuration done above:

sudo su -s /bin/sh -c "nova-manage api_db sync" nova

sudo su -s /bin/sh -c "nova-manage db sync" novaStart all the Nova services:

sudo service nova-api restart

sudo service nova-consoleauth restart

sudo service nova-scheduler restart

sudo service nova-conductor restart

sudo service nova-novncproxy restart@compute1

Install the software:

sudo apt install nova-computeConfigure the configuration file:

sudo vi /etc/nova/nova.conf

[DEFAULT]

#Define DB access

transport_url = rabbit://openstack:MINE_PASS@controller

#Take it on faith for now

auth_strategy = keystone

my_ip = 10.30.100.213

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#Tell the nova-compute how to access keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = MINE_PASS

#This is a remote access to instance consoles (French ? Just take it on faith. We will explore this in a much later episode)

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

#Nova needs to talk to glance to get the images

[glance]

api_servers = http://controller:9292

#Some locking mechanism for message queuing (Just use it.)

[oslo_concurrency]

lock_path = /var/lib/nova/tmpThe following requires some explanation. In a production environment, your compute will be a physical machine, so the below steps will NOT be required. But since this is a lab, we need to set the virtualization type for KVM hypervisor to qemu (as opposed to KVM). This setting runs the hypervisor without looking for the hardware acceleration that is provided by KVM on a physical machine. So, you are going to run virtual machines inside a virtual machine in the lab and, trust me, it works.?

For a virtual compute:

sudo vi /etc/nova/nova-compute.conf

[libvirt]

virt_type = qemuStart the Nova service:

sudo service nova-compute restartVerify Operation

@controller

Log in to the command line:

source ~/keystonerc_adminRun the following command to list the Nova services. Ensure the state is up as show below:

openstack compute service list

+----+------------------+-----------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+-----------------------+----------+---------+-------+----------------------------+

| 3 | nova-consoleauth | controller | internal | enabled | up | 2016-11-30T12:54:39.000000 |

| 4 | nova-scheduler | controller | internal | enabled | up | 2016-11-30T12:54:36.000000 |

| 5 | nova-conductor | controller | internal | enabled | up | 2016-11-30T12:54:34.000000 |

| 6 | nova-compute | compute1 | nova | enabled | up | 2016-11-30T12:54:33.000000 |

+----+------------------+-----------------------+----------+---------+-------+----------------------------+

Notice the similarity in the sequence of steps followed to configure Nova?

Time for some physics! Remember that our goal is to provide services to our customers. These services are in the form of virtual machines, or services running over these virtual machines. If we want to cater to many customers, then each of them will have their own set of services they are consuming. These services, like any other infrastructure, will require a network. You may need things like routers, firewalls, load balancers, VPN and so on. Now imagine setting these up manually for each customer. Yeah, that’s not happening. This is exactly what Neutron does for you.

D. Neutron

Neutron

Of course, it’s never going to be easy. In my personal opinion, Neutron is of OpenStack’s personality that has the worst temper and suffers from very frequent mood swings. In simpler terms, it’s complex. In our setup, major Neutron services will be residing on two servers, namely the controller and the Neutron. You could put everything on one machine and it would work, however splitting is suggested by the official documentation. (My hunch is that it has something to do with easier scaling.) There will also be a Neutron component on the compute node which I will explain later.

However, before we get into detailing the configuration, I need to explain a few minor terms:

- When we talk about networks under neutron, we will come across mainly two types of networks. One is called an external network. An external network is usually configured once and represents the network used by OS to access the external world. The second is tenant networks, and these are the networks assigned to customers.

- An OpenStack environment also requires a virtual switching (bridging) component in order to manage virtualized networking across neutron and compute nodes. The two components mostly used are Linux Bridge and Open vSwitch. (If you want to understand a bit more about Open vSwitch, you can refer to one of my other entries Understanding Open vSwitch). We will be using Open vSwitch for our environment. Please note that if you intend to use Linux Bridge, the configuration will be different.

To deploy Neutron, perform the following configuration:

@controller

Create the database and assign full rights to the Neutron user (yawn!):

sudo mysql -u root -p

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'MINE_PASS';

exitLog in to command line:

source ~/keystonerc_adminCreate the Neutron user and add the role:

openstack user create --domain default --password-prompt neutron

openstack role add --project service --user neutron adminCreate the Neutron service and the respective endpoints:

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696Install the software components:

sudo apt install neutron-server neutron-plugin-ml2Configure the Neutron config file:

sudo vi /etc/neutron/neutron.conf

[DEFAULT]

#This is a multi-layered plugin

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[DATABASE]

#Configure the DB connection

connection = mysql+pymysql://neutron:MINE_PASS@controller/neutron

[keystone_authtoken]

#Tell neutron how to talk to keystone

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = MINE_PASS

[nova]

#Tell neutron how to talk to nova to inform nova about changes in the network

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = MINE_PASSConfigure the plugin file:

sudo vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

#In our environment we will use vlan networks so the below setting is sufficient. You could also use vxlan and gre, but that is for a later episode

type_drivers = flat,vlan

#Here we are telling neutron that all our customer networks will be based on vlans

tenant_network_types = vlan

#Our SDN type is openVSwitch

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

#External network is a flat network

flat_networks = external

[ml2_type_vlan]

#This is the range we want to use for vlans assigned to customer networks.

network_vlan_ranges = external,vlan:1381:1399

[securitygroup]

#Use Ip tables based firewall

firewall_driver = iptables_hybridNote that I tried to run the su command directly using sudo and, for some reason, it fails for me. An alternative is to use “sudo su” (get root access) and then run the database using the config files above. Run the following sequence to instantiate the database.

sudo su -

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutronStart all the Neutron services:

sudo servive neutron-* restartEdit the Nova configuration file:

sudo vi /etc/nova/nova.conf

#Tell nova how to get in touch with neutron, to get network updates

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = MINE_PASS

service_metadata_proxy = True

metadata_proxy_shared_secret = MINE_PASSRestart Nova services:

sudo service nova-* restart@neutron

Install the required services:

sudo apt install neutron-plugin-ml2 \

neutron-l3-agent neutron-dhcp-agent \

neutron-metadata-agent neutron-openvswitch-agentRun the following Open vSwitch commands to create the following bridges:

Create a bridge named”br-ex;” this will connect OS to the external network:

sudo ovs-vsctl add-br br-exAdd a port from the br-ex bridge to the ens10 interface. In my environment, ens10 is the interface connected on the External Network. You should change it as per your environment.

sudo ovs-vsctl add-port br-ex ens10The following bridge that we will add is used by the VLAN networks for the customer networks in OS. Run the following command to create the bridge.

sudo ovs-vsctl add-br br-vlanAdd a port fromt the br-vlan bridge to the ens9 interface. In my environment, ens9 is the interface connected on the tunnel network. You should change it as per your environment.

sudo ovs-vsctl add-port br-vlan ens9 For our Open vSwitch configuration to persist beyond server reboots, we need to configure the interface file accordingly.

sudo vi /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

# No Change

auto lo

iface lo inet loopback

#No Change on management network

auto ens3

iface ens3 inet static

address 10.30.100.216

netmask 255.255.255.0

# Add the br-vlan bridge

auto br-vlan

iface br-vlan inet manual

up ifconfig br-vlan up

# Configure ens9 to work with OVS

auto ens9

iface ens9 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE down

# Add the br-ex bridge and move the IP for the external network to the bridge

auto br-ex

iface br-ex inet static

address 172.16.8.216

netmask 255.255.255.0

gateway 172.16.8.254

dns-nameservers 8.8.8.8

# Configure ens10 to work with OVS. Remove the IP from this interface

auto ens10

iface ens10 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE downReboot to ensure the new configuration is fully applied

sudo rebootConfigure the neutron config file

sudo vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

#Tell neutron how to access RabbitMQ

transport_url = rabbit://openstack:MINE_PASS@controller

#Tell neutron how to access keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = MINE_PASSConfigure the Open vSwitch agent config file

sudo vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

#Configure the section for OpenVSwitch

[ovs]

#Note that we are mapping alias(es) to the bridges. Later we will use these aliases (vlan,external) to define networks inside OS.

bridge_mappings = vlan:br-vlan,external:br-ex

[agent]

l2_population = True

[securitygroup]

#Ip table based firewall

firewall_driver = iptables_hybrid

Configure the Layer 3 Agent configuration file:

sudo vi /etc/neutron/l3_agent.ini

[DEFAULT]

#Tell the agent to use the OVS driver

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

#This is required to be set like this by the official documentation (If you don’t set it to empty as show below, sometimes your router ports in OS will not become Active)

external_network_bridge =Configure the DHCP Agent config file:

sudo vi /etc/neutron/dhcp_agent.ini

[DEFAULT]

#Tell the agent to use the OVS driver

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

enable_isolated_metadata = TrueConfigure the Metadata Agent config file:

sudo vi /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = MINE_PASSStart all Neutron services:

sudo service neutron-* restart@compute1

Install the ml2 plugin and the openvswitch agent:

sudo apt install neutron-plugin-ml2 \

neutron-openvswitch-agentCreate the Open vSwitch bridges for tenant VLANs:

sudo ovs-vsctl add-br br-vlanAdd a port on the br-vlan bridge to the ens9 interface. In my environment ens9 is the interface connected on the tunnel network. You should change it as per your environment.

sudo ovs-vsctl add-port br-vlan ens9In order for our Open vSwitch configuration to persist beyond reboots we need to configure the interface file accordingly.

sudo vi /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

#No Change

auto lo

iface lo inet loopback

#No Change to management network

auto ens3

iface ens3 inet static

address 10.30.100.213

netmask 255.255.255.0

# Add the br-vlan bridge interface

auto br-vlan

iface br-vlan inet manual

up ifconfig br-vlan up

#Configure ens9 to work with OVS

auto ens9

iface ens9 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE downReboot to ensure the new network configuration is applied successfully:

sudo rebootConfigure the Neutron config file:

sudo vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

#Tell neutron component how to access RabbitMQ

transport_url = rabbit://openstack:MINE_PASS@controller

#Configure access to keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = MINE_PASSConfigure the Nova config file:

sudo vi /etc/nova/nova.conf

#Tell nova how to access neutron for network topology updates

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = MINE_PASSConfigure the Open vSwitch agent configuration:

sudo vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

#Here we are mapping the alias vlan to the bridge br-vlan

[ovs]

bridge_mappings = vlan:br-vlan

[agent]

l2_population = True

[securitygroup]

firewall_driver = iptables_hybridIt’s a good idea to reboot the compute at this point. (I was getting connectivity issues without rebooting. Let me know how it goes for you.)

sudo rebootStart all Neutron services:

sudo service neutron-* restartVerify Operation

@controller

Log in to the command line:

source ~/keystonerc_adminRun the following command to list the neutron agents. Ensure the “Alive” status is “True” and “State” is “Up” as show below:

openstack network agent list

+--------------------------------------+--------------------+-----------------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+-----------------------+-------------------+-------+-------+---------------------------+

| 84d81304-1922-47ef-8b8e-c49f83cff911 | Metadata agent | neutron | None | True | UP | neutron-metadata-agent |

| 93741a55-54af-457e-b182-92e15d77b7ae | L3 agent | neutron | None | True | UP | neutron-l3-agent |

| a3c9c1e5-46c3-4649-81c6-dc4bb9f35158 | Open vSwitch agent | neutron | None | True | UP | neutron-openvswitch-agent |

| ba9ce5bb-6141-4fcc-9379-c9c20173c382 | DHCP agent | neutron | nova | True | UP | neutron-dhcp-agent |

| e458ba8a-8272-43bb-bb83-ca0aae48c22a | Open vSwitch agent | compute1 | None | True | UP | neutron-openvswitch-agent |

+--------------------------------------+--------------------+-----------------------+-------------------+-------+-------+---------------------------+It’s becoming a bit of a drag, I know. But hang on: we’re almost there.

Up to this point, our interactions with OpenStack have taken place in a mostly black-and-white environment. It’s time to add some color to this relationship and see what we can achieve by pressing the right buttons.

E. Horizon

Horizon

Horizon is the component that handles the graphical user interface for OpenStack. It’s simple and sweet — and so is configuring it.

Perform the following configuration to deploy Horizon:

@controller

Install the software:

sudo apt install openstack-dashboardConfiguration file updates. Please search for these entries in the file and then replace them to avoid any duplicates:

sudo vi /etc/openstack-dashboard/local_settings.py

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', ]

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

TIME_ZONE = "TIME_ZONE"Replace TIME_ZONE with an appropriate time zone identifier. For more information, see the list of time zones.

Start the dashboard service:

sudo service apache2 reloadVerify Operation

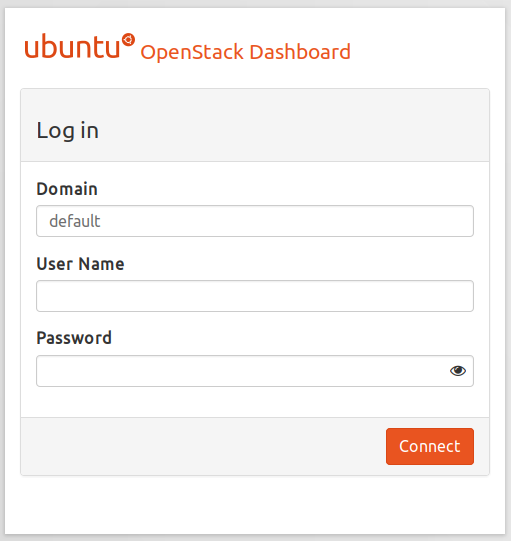

Using an computer that has access to the controller open the following URL in the browser:

Please replace controller in the above URL with the controller IP if you cannot resolve the controller by its alias. So in my case the URL will become: http://10.30.1.215/horizon

You should see a login screen similar to the one below

OpenStack Dashboard login screen

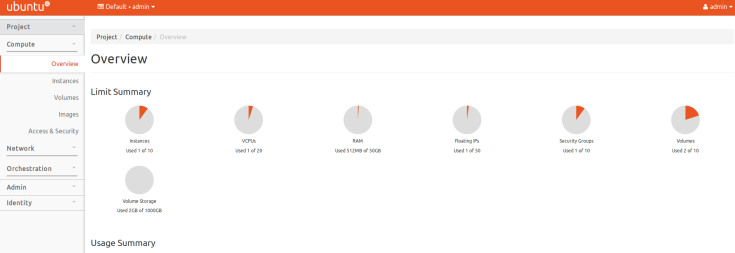

Enter admin as the username and the password you used to set up the user. In my case, it is MINE_PASS. If you are successfully logged in and see an interface similar (not necessarily the same) to the one below then your Horizon dashboard is working just fine.

OpenStack Dashboard

Recap:

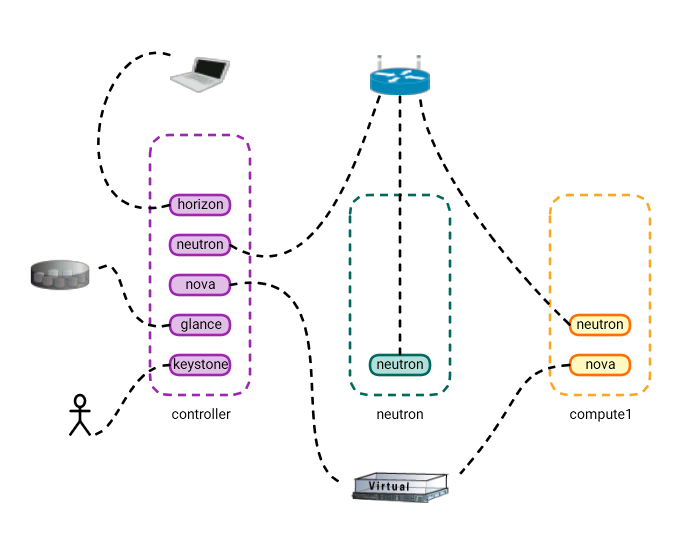

Look at the diagram below. Here’s what we’ve achieved so far.

Next:

- We will learn about OpenStack’s block storage component…

- …and its orchestration service

- The fun continues!

Once again thanks for reading. If you have any questions/comments please comment below so everyone can benefit from the discussion.

This post first appeared on the WhatCloud blog. Superuser is always interested in community content, email: editorATsuperuser.org.

Cover Photo // CC BY NC

- Managing port level security in OpenStack - April 21, 2017

- Let’s heat things up with Cinder and Heat - April 7, 2017

- Getting to know the essential OpenStack components better - March 21, 2017

)