This post originally ran on Nathan Kinder’s blog “Orderly Chaos”. Nathan is a Software Engineering Manager at Red Hat, where he manages the development of the identity and security related components of the Red Hat Enterprise Linux OpenStack Platform, Red Hat Directory Server, and Red Hat Certificate System products. His current teams regularly contribute to the Keystone, Barbican, and Designate projects. He has been a developer on the 389 Directory Server project since it’s inception, and has a long history of working with LDAP and X.509 certificate management.

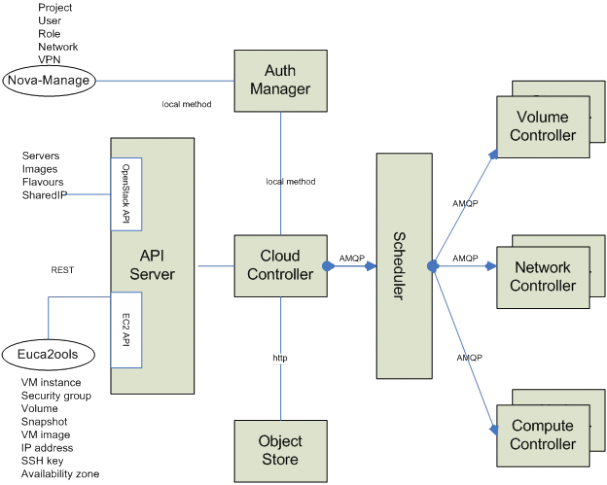

OpenStack uses message queues for communication between services. The messages sent through the message queues are used for command and control operations as well as notifications. For example, nova-scheduler uses the message queues to control instances on nova-compute nodes. This image from Nova’s developer documentation gives a high-level idea of how message queues are used:

It’s obvious that the messages being sent are critical to the functioning of an OpenStack deployment. Actions are taken on behalf of the messages that are received, which means that the contents of the message need to be trusted. This calls for secure messaging. Before discussing how messages can be secured, we need to define what makes a message secure.

A secure message has integrity and confidentiality. Message integrity means that the sender has been authenticated by the recipient and that the message is tamper-proof. Think of this like an imprinted wax seal on an envelope. The imprint is used to identify the sender and ensure that it is authentic. An unbroken seal indicates that the contents have not been tampered with after the sender sealed it. This is usually accomplished by computing a digital signature over the contents of the message. Message confidentiality means that the message is only readable by it’s intended recipient. This is usually accomplished by using encryption to protect the message contents.

Messages are not well protected in OpenStack today. Once a message is on the queue, no further authorization checks of that message are performed. In many OpenStack deployments, the only thing used to protect who can put messages on the queue is basic network isolation. If one has network access to the message broker, messages can be placed on a queue.

It is possible to configure the message broker for authentication. This authenticates the sender of the message to the broker itself. Rules can then be defined to restrict who can put messages on specific queues. While this authentication is a good thing, it leaves a lot to be desired:

- The sender doesn’t know who it is really talking to, as the broker is not authenticated to the sender.

- Messages are not protected from tampering or eavesdropping.

- The recipient is unable to authenticate the sender, allowing one sender to impersonate another.

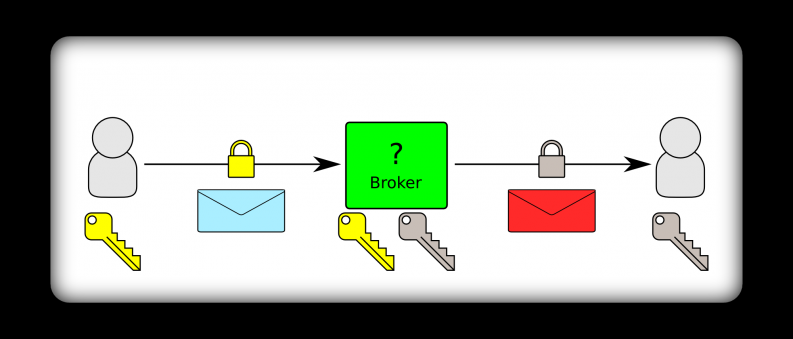

SSL/TLS can be enabled on the message broker to protect the transport. This buys us a few things over the authentication described above. The broker is now authenticated to the sender by virtue of certificate trust and validation. Messages are also protected from eavesdropping and tampering between the sender and the broker as well as between the recipient and the broker. This still leaves us with a few security gaps:

- Messages are not protected from tampering or eavesdropping by the broker itself.

- The recipient is still unable to authenticate the sender.

Utilizing the message broker security described above looks like this:

You can see that what is protected here is the transport between each communicating party and the broker itself. There is no guarantee that the message going into the broker is the same when it comes out of the broker.

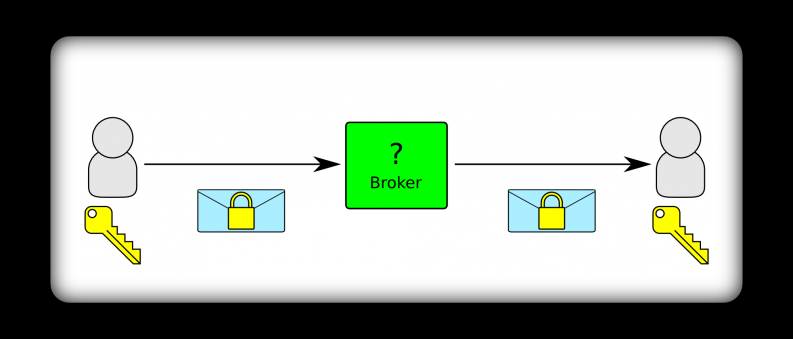

Kite is designed to improve upon the existing message broker security by establishing a trust relationship between the sender and recipient that can be used to protect the messages themselves. This trust is established by knowledge of shared keys. Kite is responsible for the generation and secure distribution of signing and encryption keys to communicating parties. The keys used to secure messages are only known by the sender, recipient, and Kite itself. Once these keys are distributed to the communicating parties, then can be used to ensure message integrity and confidentiality. Sending a message would look like this:

You can see that the message itself is what is protected, and doesn’t rely on protection of the transport. The broker is unable to view or manipulate the contents of the message since it does not have the keys used to protect it.

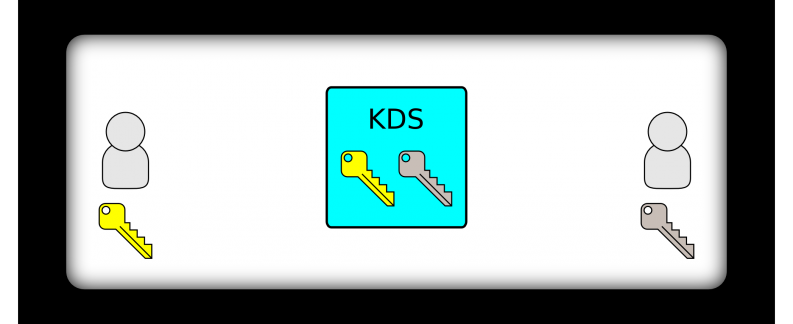

In order for Kite to securely distribute keys to the communicating parties, a long-term shared secret needs to be established between Kite and each individual communicating party. The long-term shared secret allows Kite and an individual party to trust each other by proving knowledge of the shared secret. Once a long-term shared secret is established, it is never sent over the network.

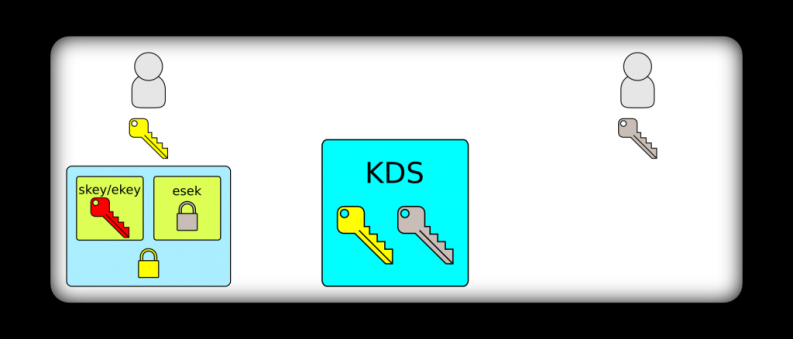

In the diagram below, we can see that two parties each have a unique long-term shared secret that is only known by themselves and Kite, which is depicted as the Key Distribution Service (KDS):

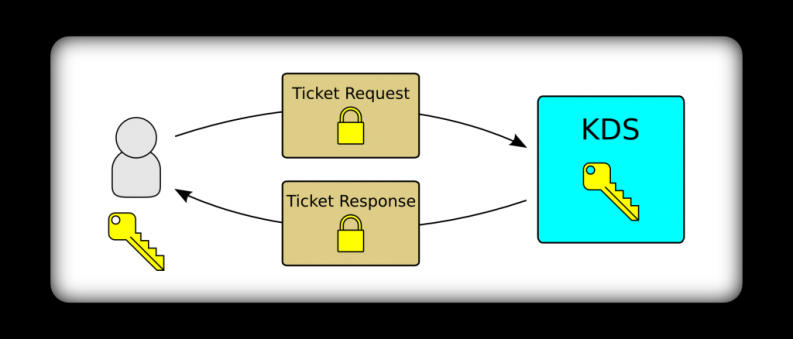

When one party wants to send a message to another party, it requests a ticket from Kite. A ticket request is signed by the requestor using it’s long-term shared secret. This allows Kite to validate the request by checking the signature using the long-term shared secret of the requesting party. This signature serves to authenticate the requestor to Kite and ensure that it has not been tampered with. Conceptually, this looks like this:

When Kite receives a valid ticket request, it generates a new set of signing and encryption keys. These keys are only for use between two specific parties, and only for messages being sent in one direction. The actual contents of the ticket request are shown below:

request:

{

"metadata": <Base64 encoded metadata object>,

"signature": <HMAC signature over metadata>

}

metadata:

{

"source": "scheduler.host.example.com",

"destination": "compute.host.example.com",

"timestamp": "2012-03-26T10:01:01.720000",

"nonce": 1234567890

}The contents of the ticket request are used by Kite to generate the signing and encryption keys. The timestamp and nonce are present to allow Kite to check for replay attacks.

For signing and encryption key generation, Kite uses the HMAC-based Key Derivation Function (HKDF) as described in RFC 5869. The first thing that Kite does is to generate an intermediate key. This intermediate key is generated by using the sender’s long-term shared secret and a random salt as inputs to the HKDF Extract function:

intermediate_key = HKDF-Extract(salt, source_key)The intermediate key, sender and recipient names (as provided in the ticket request), and a timestamp from Kite are used as inputs into the HKDF Expand function, which outputs the key material that is used as the signing and encryption keys:

keys = HKDF-Expand(intermediate_key, info, key_size)

info = "<source>,<dest>,<timestamp>"Once the signing and encryption keys are generated, they are returned to the ticket requestor as a part of a response that is signed with the requestor’s long-term shared secret:

Since the ticket response is signed using the requestor’s long-term shared secret, the requestor is able to validate the ticket response truly came from Kite since nobody else has knowledge of the long-term shared secret. The contents of the ticket response are shown below:

response:

{

"metadata": <Base64 encoded metadata object>,

"ticket": <Ticket object encrypted with source's key>,

"signature": <HMAC signature over metadata + ticket>

}

metadata:

{

"source": "scheduler.host.example.com",

"destination": "compute.host.example.com",

"expiration": "2012-03-26T11:01:01.720000"

}

ticket:

{

"skey": <Base64 encoded message signing key>,

"ekey": <Base64 encoded message encryption key>,

"esek": <Key derivation info encrypted with destination's key>

}

esek:

{

"key": <Base64 encoded intermediate key>,

"timestamp": <Timestamp from KDS>

"ttl": <Time to live for the keys>

}We can see that the actual key material in ticket is encrypted using the requestor’s long-term shared secret. Only the requestor will be able to decrypt this portion of the response to extract the keys. We will discuss the esek portion of the response in more detail when we get to sending messages. For now, it is important to note that esek is an encrypted payload that Kite created for the destination party. It is encrypted using the destination party’s long-term shared secret, so it is an encrypted blob as far as the source party is concerned. A conceptual diagram of the ticket response should make this clear:

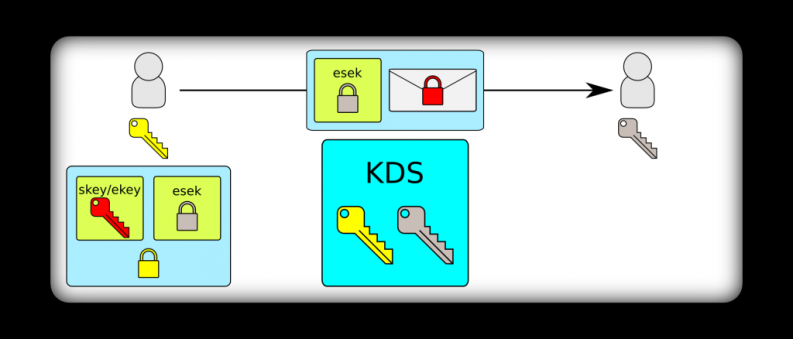

When sending a secured message, the sender will use an envelope that contains information the recipient needs to derive the keys, a signature, and a flag indicating if the message is encrypted. The envelope looks like this:

RPC_Message:

{

_VERSION_KEY: _RPC_ENVELOPE_VERSION,

_METADATA_KEY: MetaData,

_MESSAGE_KEY: Message,

_SIGNATURE_KEY: Signature

}

MetaData:

{

'source': <sender>,

'destination': <receiver>,

'timestamp': <timestamp>,

'nonce': <64bit unsigned number>,

'esek': <Key derivation info encrypted with destination's key>,

'encryption': <true | false>

}The following diagram shows how the envelope is used when sending a message:

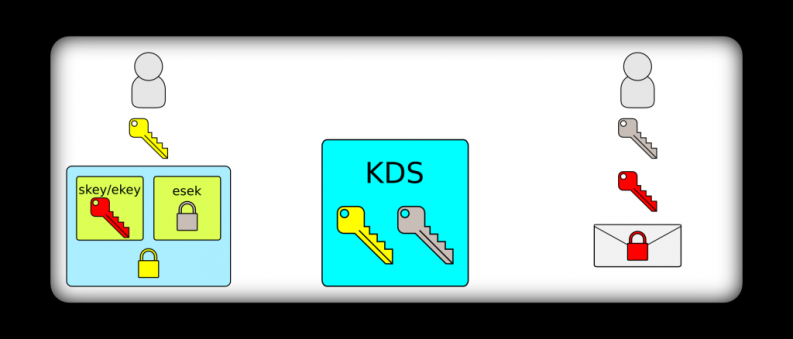

Upon receipt of a secured message, the recipient can decrypt esek using it’s long-term shared secret. It can trust that the contents of esek are from Kite since nobody else has knowledge of the shared secret. The contents of esek along with the source and destination from the envelope contain all of the information that is needed to derive the signing and encryption keys.

esek:

{

"key": <Base64 encoded intermediate key>,

"timestamp": <Timestamp from KDS>

"ttl": <Time to live for the keys>

}

HKDF-Expand(intermediate_key, info, key_size)

info = "<source>,<dest>,<timestamp>"We perform this derivation step on the recipient to force the recipient to validate the source and destination from the metadata in the message envelope. It the source and destination were somehow modified, the correct keys would not be able to be derived from esek. This provides the recipient with a guarantee that Kite generated the signing and encryption keys specifically for the correct source and destination.

Once the signing and encryption keys are derived, the recipient can validate the signature and decrypt the message if necessary. The end result after deriving the signing and encryption keys looks like this:

Signing and encryption keys are only valid for a limited period of time. The validity period is a policy determination set by the person deploying Kite. In general, the validity period represents the amount of exposure you would have if a particular pair of signing and encryption keys were compromised. In general, it is advisable to have a short validity period.

This validity period is defined by the expiration timestamp in the ticket response for the sender, and the timestamp + ttl in esek for the recipient. While keys are still valid, a sender can reuse them without needing to contact Kite. The esek payload for the recipient is still sent with every message, and the recipient derives the signing and encryption keys for every message. When the keys expire, the sender needs to send a new ticket request to Kite to get a new set of keys.

Kite also supports sending secure group messages, though the workflow is slightly different than it is for direct messaging. Groups can be defined in Kite, but a group does not have a long-term shared secret associated with it. When a ticket is requested with a group as the destination, Kite will generate a temporary key that is associated with the group if a current key does not already exist. This group key is used as the destination’s long-term shared secret. When a recipient receives a message where the destination is a group, it contacts Kite to request the group key. Kite will deliver the group key to a group member encrypted using the member’s long-term shared secret. A group member can then use the group key to access the information needed to derive the keys needed to verify and decrypt the secured group message.

The usage of a temporary group key prevents the need to have a log-term shared secret shared amongst all of the group members. If a group member becomes compromised, they can be removed from the group in Kite to cut-off access to any future group keys. Using a short group key lifetime limits the exposure in this situation, and it also doesn’t require changing the shared secret across all group members since a new shared secret will be generated upon expiration.

There is one flaw in the group messaging case that is important to point out. All members of a group will have access to the same signing and encryption keys once they have received a message. This allows a group member to impersonate the original sender who requested the keys. This means one compromised group member is able to send falsified messages to other members within the same group. This is a limitation due to the use of symmetric cryptography. It would be possible to improve upon this by using public-key cryptography for message signing. There is a session scheduled at the Juno design summit to discuss this.

There are a number of areas to look into making future improvements in Kite. Improving the group messaging solution as mentioned above is an obvious area to investigate. It would also be a good idea to look into using Barbican to store the long-term shared secrets. There have been brief discussions around adding policies to Kite to be able to restrict which parties are allowed to request tickets for certain recipients.

Kite is currently being implemented as a standalone service within the Key Management (Barbican) project. Patches are landing, and hopefully we get to something initially usable in the Juno timeframe. To utilize Kite, changes will also be needed in oslo.messaging. An ideal way of dealing with this would be to allow for “message security” plug-ins to Oslo. A Kite plug-in would allow services to use Kite for secure messages, but other plug-ins can be developed if alternate solutions come along (such as a public-key based solution as mentioned above). This would allow Oslo to remain relatively static in this area as capabilities around secure messaging change. There is a lot that can be done around secure messaging, and I think that Kite looks like a great step forward.

- Secure Messaging with Kite - May 22, 2014

)