Nowadays, Kubernetes has changed the way software development is done. As a portable, extensible, open-source platform for managing containerized workloads and services that facilitates both declarative configuration and automation, Kubernetes has proven itself to be a dominant player for managing complex microservices. Its popularity stems from the fact that Kubernetes meets the following needs: businesses want to grow and pay less, DevOps want a stable platform that can run applications at scale, developers want reliable and reproducible flows to write, test and debug code. Here is a good article to learn more about Kubernetes evolution and architecture.

One of the important areas of managing Kubernetes network is to forward container ports internally and externally to make sure containers and Pods can communicate with one another properly. To manage such communications, Kubernetes offers the following four networking models:

- Container-to-Container communications

- Pod-to-Pod communications

- Pod-to-Service communications

- External-to-internal communications

In this article, we dive into Pod-to-Pod communications by showing you ways in which Pods within a Kubernetes network can communicate with one another.

While Kubernetes is opinionated in how containers are deployed and operated, it is very non-prescriptive of how the network should be designed in which Pods are to be run. Kubernetes imposes the following fundamental requirements on any networking implementation (barring any intentional network segmentation policies):

- All pods can communicate with all other pods without NAT

- All nodes running pods can communicate with all pods (and vice-versa) without NAT

- IP that a pod sees itself as is the same IP that other pods see it as

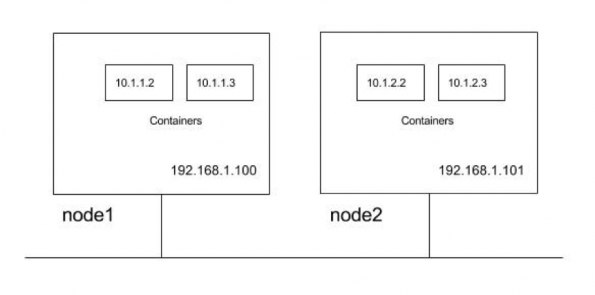

For the illustration of these requirements let us use a cluster with two cluster nodes. Nodes are in subnet 192.168.1.0/24 and Pods use 10.1.0.0/16 subnet, with 10.1.1.0/24 and 10.1.2.0/24 used by node1 and node2 respectively for the Pod IP’s.

So from above, Kubernetes requirements following communication paths must be established by the network.

- Nodes should be able to talk to all pods. For e.g. 192.168.1.100 should be able to reach 10.1.1.2, 10.1.1.3, 10.1.2.2 and 10.1.2.3 directly (without NAT)

- A Pod should be able to communicate with all nodes. For e.g. Pod 10.1.1.2 should be able to reach 192.168.1.100 and 192.168.1.101 without NAT

- A Pod should be able to communicate with all Pods. For e.g 10.1.1.2 should be able to communicate with 10.1.1.3, 10.1.2.2 and 10.1.2.3 directly (without NAT)

While exploring these requirements, we will lay the foundation for how the services are discovered and exposed. There can be multiple ways to design the network that meets Kubernetes networking requirements with varying degrees of complexity and flexibility.

Pod-to-Pod Networking and Connectivity

Kubernetes does not orchestrate setting up the network and offloads the job to the CNI plug-ins. Here is more info for the CNI plugin installation. Below are possible network implementation options through CNI plugins which permits Pod-to-Pod communication honoring the Kubernetes requirements:

- Layer 2 (switching) solution

- Layer 3 (routing) solution

- Overlay solutions

I- Layer 2 Solution

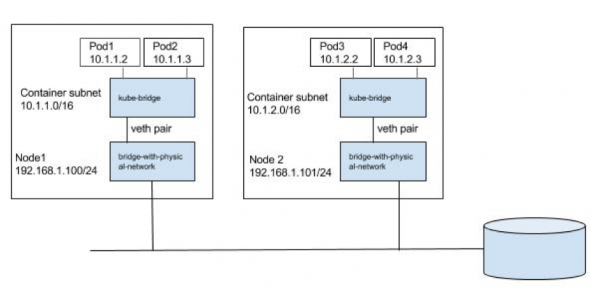

This is the simplest approach and should work well for small deployments. Pods and nodes should see subnet used for Pod’s IP as a single l2 domain. Pod-to-Pod communication (on same or across hosts) happens through ARP and L2 switching. We could use bridge CNI plug-in to reuse a L2 bridge for pod containers with the below configuration on node1 (note /16 subnet).

{

“name”: “mynet”,

“type”: “bridge”,

“bridge”: “kube-bridge”,

“isDefaultGateway”: true,

“ipam”: {

“type”: “host-local”,

“subnet”: “10.1.0.0/16”

}

}

kube-bridge needs to be pre-created such that ARP packets go out on the physical interface. In order for that we have another bridge with physical interface connected to it and node IP assigned to it to which kube-bridge is hooked through the veth pair like below.

We can pass a bridge which is pre-created, in which case bridge CNI plugin will reuse the bridge.

II- Layer 3 Solutions

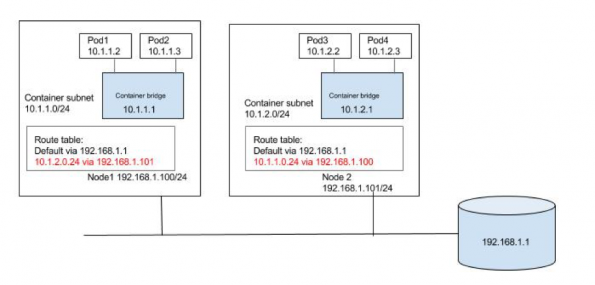

A more scalable approach is to use node routing rather than switching the traffic to the Pods. We could use bridge CNI plug-in to create a bridge for Pod containers with gateway configured. For e.g. on node1 below the configuration can be used (note /24 subnet).

{

“name”: “mynet”,

“type”: “bridge”,

“bridge”: “kube-bridge”,

“isDefaultGateway”: true,

“ipam”: {

“type”: “host-local”,

“subnet”: “10.1.1.0/24”

}

}

So how does Pod1 with IP 10.1.1.2 running on node1 communicate with Pod3 with IP 10.1.2.2 running on node2? We need a way for nodes to route the traffic to other node Pod subnets.

We could populate the default gateway router with routes for the subnet as shown in the below diagram. Routes to 10.1.1.0/24 and 10.1.2.0/24 are configured to be through node1 and node2 respectively. We could automate keeping the route tables updated as nodes are added or deleted in to the cluster. We can also use some of the container networking solutions which can do the job on public clouds, for e.g. Flannel’s backend for AWS and GCE, Weave’s AWS-VPC mode, etc.

Alternatively, each node can be populated with routes to the other subnets as shown in the below diagram. Again, updating the routes can be automated in small/static environment as nodes are added/deleted in the cluster or container networking solutions like calico, or Flannel host-gateway backend can be used.

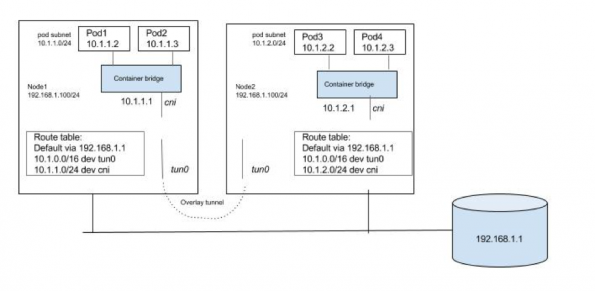

III- Overlay Solutions

Unless there is a specific reason to use an overlay solution, it generally does not make sense considering the networking model of Kubernetes and it lacks of support for multiple networks. Kubernetes requires that nodes should be able to reach each Pod, even though Pods are in an overlay network. Similarly, Pods should be able to reach any node as well. We will need host routes in the nodes set such that Pods and nodes can talk to each other. Since inter host Pod-to-Pod traffic should not be visible in the underlay, we need a virtual/logical network that is overlaid on the underlay. Pod-to-Pod traffic would need to be encapsulated at the source node. The encapsulated packet is then forwarded to the destination node where it is de-encapsulated. A solution can be built around any existing Linux encapsulation mechanism. We need to have a tunnel interface (with VXLAN, GRE, etc. encapsulation) and a host route such that inter node Pod-to-Pod traffic is routed through the tunnel interface. Below is a very generalized view of how an overlay solution can be built that can meet Kubernetes network requirements. Unlike two previous solutions there is significant effort in the overlay approach with setting up tunnels, populating FDB, etc. Existing container networking solutions like Weave and Flannel can be used to setup a Kubernetes deployment with overlay networks. Here is a good article for reading more on similar Kubernetes topics.

Conclusion

In this article, we covered how the cross-node Pod-to-Pod networking works, how services are exposed with-in the cluster to the Pods, and externally. What makes Kubernetes networking interesting is how the design of core concepts like services, network policy, etc. permits several possible implementations. Though some core components and add-ons provide default implementations, they are replaceable. There is a whole ecosystem of network solutions that plug neatly into the Kubernetes networking semantics. Now that you learn how Pods inside a Kubernetes system can communicate and exchange data, you can move on to learn other Kubernetes networking models such as Container-to-Container or Pod-to-Service communications. Here is a good article for learning more advanced topics on Kubernetes development.

About the Author

This article is written by Matt Zand who is the founder of High School Technology Services, DC Web Makers and Coding Bootcamps. He has written extensively on advance topics on web design, mobile App development and blockchain. He is a senior editor at Touchstone Words where he writes and reviews coding and technology articles. He is also senior instructor and developer living in Washington DC. You can follow him on Linkedin.

- Review of Pod-to-Pod Communications in Kubernetes - September 30, 2019

)