We all know Kubernetes is a distributed platform that orchestrates different worker nodes and can be controlled by central master nodes. There can be ‘n’ number of worker nodes that can be distributed to handle pods. To keep track of all changes and updates of these nodes and pass on the desired action, Kubernetes uses etcd.

What is etcd in Kubernetes?

Etcd is a distributed reliable key-value store which is simple, fast and secure. It acts like a backend service discovery and database, runs on different servers in Kubernetes clusters at the same time to monitor changes in clusters and to store state/configuration data that should to be accessed by a Kubernetes master or clusters. Additionally, etcd allows Kubernetes master to support discovery service so that deployed application can declare their availability for inclusion in service.

The API server component in Kubernetes master nodes communicates with etcd the components spread across different clusters. Etcd is also useful to set up the desired state for the system.

By means of key-value store for Kubernetes etcd, it stores all configurations for Kubernetes clusters. It is different than traditional database which stores data in tabular form. Etcd creates a database page for each record which do not hampers other records while updating one. For example, this might happen that few records may require additional columns, but those not required by other records in the same database. This creates redundancy within database. Etcd adds and manages all records in reliable way for Kubernetes.

Distributed and Consistent

Etcd stores a critical data for Kubernetes. By means of distributed, it also maintains a copy of data stores across all clusters on distributed machines/servers. This copy is identical for all data stores and maintains the same data from all other etcd data stores. If one copy get destroys, the other two hold the same information.

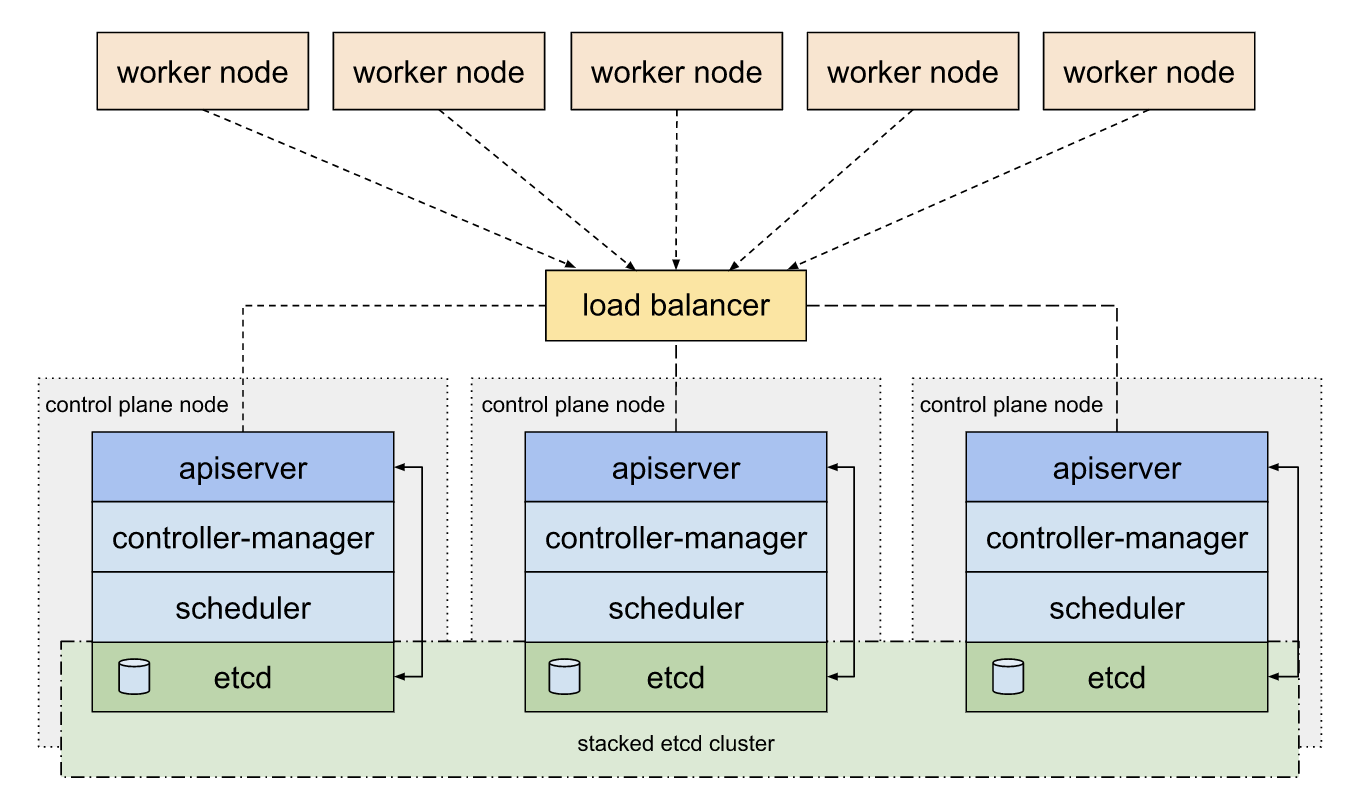

Deployment Methods for etcd in Kubernetes Clusters

Etcd is implementation is architected in such a way to enable high availability in Kubernetes. Etcd can be deployed as pods in master nodes

Image source: https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/ha-topology/

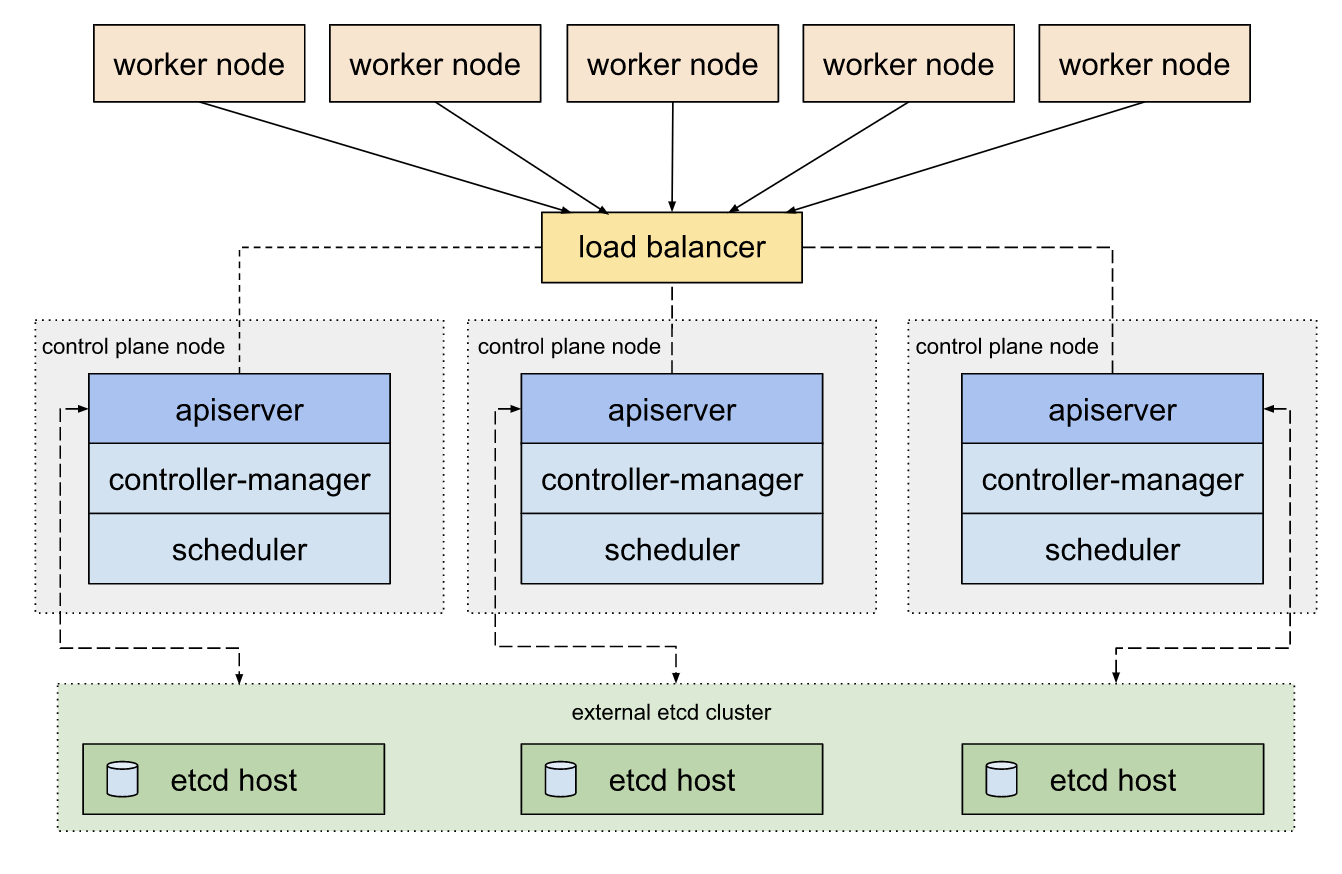

It can also be deployed externally to enable resiliency and security

Image source: https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/ha-topology/

How etcd Works

Etcd acts as the brain of the Kubernetes cluster. Monitoring the sequence of changes is done using the ‘Watch’ function of etcd. With this function, Kubernetes can subscribe to changes within clusters and execute any state request coming from API server. Etcd co-ordinates with different components within distributed clusters. Etcd reacts to changes with state of components and other components may get reacted to changes.

There might be a situation – while maintaining the same copy of all state among group of etcd components in clusters, the same data needs to be stored in two etcd instances. However, etcd is not supposed to update the same record in different instances.

In such cases, etcd does not process the writes on each cluster node. Instead, only one of the instances gets the responsibility to process the writes internally. That node is called leader. The other nodes in cluster elect a leader using RAFT algorithm. Once the leader get elected, the other node becomes the followers of the leader.

Now, when the write requests came to the leader node then the leader processes the write. The leader etcd node broadcasts a copy of the data to other nodes. If one of the follower nodes is not active or offline that moment, based on the majority of available nodes write requests get a complete flag. Normally, the write gets the complete flag if the leader gets consent from the other members in the cluster.

This is the way they elect the leader among themselves and how do they ensure a write is propagated across all instances. This distributed consensus is implemented in etcd using raft protocol.

How Clusters Work in etcd

Kubernetes is the main consumer for etcd project, initiated by CoreOS. Etcd has become a norm for functionality and overall tracking of Kubernetes cluster pods. Kubernetes allows various cluster architectures that may involve etcd as a crucial component or might involve multiple master nodes along with etcd as isolated component.

The role of etcd changes per system configuration in any particular architecture. Such dynamic placement of etcd to manage clusters can be implemented to improve scaling. The result is easily supported and managed workloads.

Here are the steps for initiating etcd in Kubernetes.

Wget the etcd files:

wget -q --show-progress --https-only --timestamping \ "https://github.com/etcd-io/etcd/releases/download/v3.4.0/etcd-v3.4.0-linux-amd64.tar.gz"

Tar and install the etcd server and the etcdctl tools:

{

tar -xvf etcd-v3.4.0-linux-amd64.tar.gz

sudo mv etcd-v3.4.0-linux-amd64/etcd* /usr/local/bin/

}

{

sudo mkdir -p /etc/etcd /var/lib/etcd

sudo cp ca.pem kubernetes-key.pem kubernetes.pem /etc/etcd/

}

Get the internal IP address for the current compute instance. It will be will be used to deal with client requests and data transmission with etcd cluster peers.:

INTERNAL_IP=$(curl -s -H "Metadata-Flavor: Google" \ http://metadata.google.internal/computeMetadata/v1/instance/network-interfaces/0/ip)

Place the unique name for etcd to match the hostname of the current compute instance:

ETCD_NAME=$(hostname -s)

Create the etcd.service systemd unit file:

cat <<EOF | sudo tee /etc/systemd/system/etcd.service[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/kubernetes.pem \\

--key-file=/etc/etcd/kubernetes-key.pem \\

--peer-cert-file=/etc/etcd/kubernetes.pem \\

--peer-key-file=/etc/etcd/kubernetes-key.pem \\

--trusted-ca-file=/etc/etcd/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster-0 \\

--initial-cluster controller-0=https://10.240.0.10:2380,controller-1=https://10.240.0.11:2380,controller-2=https://10.240.0.12:2380 \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

Initiate etcd Server

{

sudo systemctl daemon-reload

sudo systemctl enable etcd

sudo systemctl start etcd

}

Repeat above commands on: controller-0, controller-1, and controller-2.

List the etcd cluster members:

sudo ETCDCTL_API=3 etcdctl member list \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem

Output:

3a57933972cb5131, started, controller-2, https://10.240.0.12:2380, https://10.240.0.12:2379

f98dc20bce6225a0, started, controller-0, https://10.240.0.10:2380, https://10.240.0.10:2379

ffed16798470cab5, started, controller-1, https://10.240.0.11:2380, https://10.240.0.11:2379

Conclusion

Etcd is an independent project at its core. But, it has been used extensively by the Kubernetes community to provide various benefits for managing states of clusters, enabling further automation for dynamic workloads. The key benefit for using Kubernetes with etcd is that, etcd is itself a distributed database that co-align with distributed Kubernetes clusters. So, using etcd with Kubernetes is vital for the health of the clusters.

About the author

Sagar Nangare is a technology blogger, focusing on data center technologies (Networking, Telecom, Cloud, Storage) and emerging domains like Open RAN, Edge Computing, IoT, Machine Learning, AI). Based in Pune, he is currently serving Coredge.io as Director – Product Marketing.

- Kubernetes Troubleshooting: A Practical Guide - May 21, 2024

- Overview of the OpenStack Documentation - March 18, 2024

- Refactoring Your Application for OpenStack: Step-by-Step - December 27, 2023

)