Emerging edge cloud services like smart city applications have suffered due to lack of acceptable APIs and services that can dynamically provision and deliver services through provider edges. Except for caching services widely used by providers, due to economy of scale in cloud and few low traffic gaming apps there is little to cheer about.

A talk about the future of edge at the OpenStack Summit in Sydney was itself something of an evolving situation, with the originally scheduled for presentation by Prakash Ramchandran, Rolf Schuster, and Narinder Gupta, ended up presented by Mark Shuttleworth with Canonical and Joseph Wang from Inwinstack due to none of the original presenters able to travel due to visa problems.

The good news: it’s only a matter of time before there’s another generational wave of the radio frequency spectrum as the industry moves to 5G. “That will create a new set of possibilities, but it’s also going to create a huge amount of cost,” said Shuttleworth. “It’s going to be very expensive to deploy that next generation radio frequency.” 5G will enable very high speed, very low latency communication.

“What kind of killer applications could you create in a world where you could have compute that’s very, very close to a mobile device or very, very close to a car or very, very close to somebody walking around,” asked Shuttleworth. “What sort of applications would be interesting?” That, he said, is the heart of the research project at Carnegie Mellon University (CMU), where people are already creating a developer ecosystem to form the basis of the next generation of killer apps. On the other hand, he said, there are also people looking at operating standards and procedures as well as the economics around this new emerging technology.

Shuttleworth believes that this will emerge as a class of computing unto itself, in a line that started with desktops, then mobile apps, then data centers and large-scale clouds. The apps that emerged in each type of computing class were only capable with each new class — none could have existed in the previous era alone. “I tend to be more interested in the stuff that the Carnegie Mellon guys are interested in, which is essentially saying, ‘What kind of developers do we need to attract in order to get killer applications for edge computing?’

The technology, said Shuttleworth, will have a latency of less than 50 milliseconds. “Well, 50 milliseconds is I think 20 times a second,” he said. “So anything that you need to refresh 20 times a second (will become) things that require near real time feedback, effectively.”

An example is augmented reality. “If you want to essentially provide somebody with visuals that are overlaid on the real world,” said Shuttleworth, “then you need to refresh that 20 times a second and not be more than 50 milliseconds behind, effectively. So then you need an architecture that looks like this kind of edge cloud-type architecture.”

There are many other technologies, like the Internet of Things, that will also need to be part of the connected 5G ecosystem. “There’s no suggestion that these clouds are somehow decoupled from the internet or from public clouds,” Shuttleworth said. “But there’s a very strong suggestion that what they have to do is have to be relatively tightly coupled to the end user.”

People will have mobile agents, said Shuttleworth, whether that’s a cell phone, virtual reality goggles, augmented reality, a self-driving car, a robot, or a drone. “You want to minimize the amount of compute that you’re doing on that drone,” he said. “Energy is very precious for a drone or mobile phone.” There are heavy-duty applications that run on a phone, for sure, but they tend to use up the battery. If you can get the applications off the phone with minimal latency, you could save battery life.

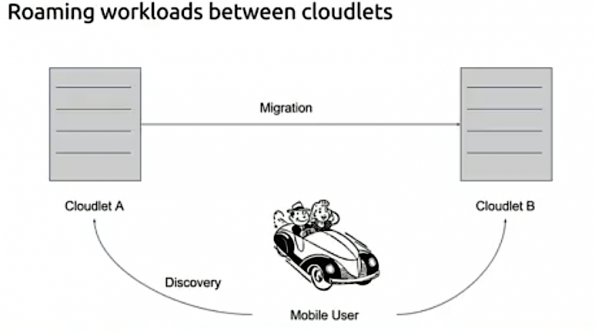

Discovery of such devices is an important primitive, said Shuttleworth. “You initiate a session with a cloudlet. You would offload work to that cloudlet; you now have a relationship with that cloudlet. That cloudlet is less than 50 milliseconds away from you in the network, so you can have a very high or low latency, high bandwidth-type conversation with that digital twin or compute (in) whatever form it is.”

But your mobile device will be moving, so it might need to migrate to a different cloud. “So this starts to stress and exercise OpenStack in new and unusual ways,” said Shuttleworth. “We’ve all done a lot of work with live migration in the context of a cloud. For example, you’ve got a hypervisor, you’ve got a bunch of VMs on that hypervisor, you need to reboot that hypervisor. It’s better if you can live migrate the VMs off that hypervisor.”

You’ll be migrating within the cloud, which assumes you have a consistent low-cost, high-bandwidth network interconnect. While it’s easy to migrate between two rack servers, it’s not so simple when you’re migrating between two cloudlets that might have a significant distance between them. “So a lot of the work that Carnegie Mellon has done is in optimizing, effectively, that process,” said Shuttleworth. “And the way I would characterize that is that they’ve essentially brought together a lot of the thinking that you’d find inside something like Docker with, basically, VM operations.”

In the world of Docker, it’s normal to use layered file systems. For example, you can have two developers get the same copy of Ubuntu, and any changes made to one can easily be created in the other, just via the delta. In the world of edge networks, however, this type of delta sharing is done through some clever block-level primitives which compress and stream the changes in a short period of time. That allows for live migration of VMs in an efficient way over low-bandwidth environments.

That’s the concept, anyway — enabling a mobile agent (car, phone, drone, other) to discover a cloudlet, unstantiate capability there, and then efficiently translate it to a completely different cloudlet. “And then that is supposed to be the backdrop against which applications can emerge,” said Shuttleworth.

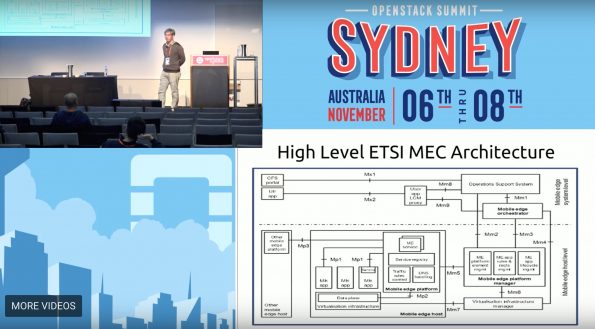

Joseph Wang took over the presentation with a high-level multi-access edge computing (MEC) schematic from the European Telecommunications Standards Institute (ETSI), which provides standards for all telecoms operating in the edge computing arena. “The ETSI also published a specifications API for people wanting to work applications on top of edge infrastructures,” said Wang. “So it lets the developer or the cloud service provider host the application on the edge gateway IT servers.” Developers need to follow this high-level API specification when creating applications on top of edge infrastructure.

Carnegie Mellon University (CMU) researchers have a branch of OpenStack that extends the platform to add some key capabilities, the first of which is the discovery of the aforementioned cloudlets. “So you imagine an agent running on your phone,” said Shuttleworth. The current implementation of this is on Android: you install an APK onto an Android phone, which is then able to discover cloudlets. The CMU campus has two cloudlets running over a prototype 5G network and the researchers there have found a way to effectively describe how to assemble an image from known parts, like Windows or ubuntu, and then apply delta effects to get the image for a specific app on your phone. “(There’s) a language to essentially agree on things like resource allocations and network addresses and so on to essentially set up the session.” he continued.

Then the VM migration primitives — all of it open-source — move these images around to keep the lag under 50 milliseconds.

And then the VM migration primitives, basically moving images around so that you can always stay sub 50 milliseconds. And they’ve demonstrated that … I think their target is sort of DSL-type speeds. The wanna be able to support DSL-type speeds between cloudlets. All of this is open source.

What Inwinstack has done is upgraded Cloudlet (OpenStack++) from Kilo to Pike. Due to VM data compression, said Wang, things migrated quickly. Inwinstack does a lot of API work with different technology included in OpenStack, like Nova, Neutron, and Horizon. “If the OpenStack community people want to join,” said Wang, “we are more happy to ask you to join to work with us.”

Canonical, the company behind Ubuntu, got involved when Shuttleworth met CMU’s Professor Satyanarayanan (who goes by the name Satya) at 2015’s Tokyo OpenStack Summit. Shuttleworth was intrigued by the possibility of a new class of application that might emerge at the edge. “The engagement that we’ve had with his research team have mainly been around thinking about the operational consequences of having potentially tens of thousands of these cloudlets,” said Shuttleworth. “Because of the explicit latency target, you know that you have to have lots and lots and lots of them in order to cover a country, and that just means that you’re going to have to think about operating them in a totally automated way.”

Most of the work with OpenStack, he said, is typically with ten to twenty OpenStacks, but not ten to twenty thousand. So far, that’s something that’s truly difficult to achieve with OpenStack.

In addition, the ratio of cloudlet overhead to actual compute capacity becomes important. If there are only three, eight, or ten nodes, for example, two nodes of overhead is a much bigger chunk of the overall capacity than it would be if you were working with 200 node, said Shuttleworth. “So a lot of our interest is in figuring out ways to make this whole thing operable and efficient so that at scale, the economics will be clean,” he said.

One way to scale is with an interesting containerization. “We’ve been working with the CMU guys to show how you can do all the same things with a container rather than with a VM,” Shuttleworth said. “Now, the one thing that you can’t do with a container is run a Windows workload, so they have some great demos, which are literally Windows, like a Paint application that follows you around in the car. But for cases where your workload is a Linux workload, a container is going to be a much more efficient way to essentially distribute those workloads and then get more value out of that distributed compute.”

There are two ways the teams approach this. “One is LXD, which is more VM-like and has many of the same type of primitives you’d see in a VM of CentOS or Ubuntu and Kubernetes,” said Shuttleworth, “which is a sort of Docker orchestration capability that you can’t avoid hearing about at the Summit.”

The final piece of the puzzle, he said, is straight Bare Metal. “There are certain workloads where actually you might want to constitute for Bare Metal capability for that offload or pass through Bare Metal,” said Shuttleworth. “A lot of the augmented reality applications that are being developed depend on having access to GPGPU-type capabilities. So we’ve been working with the CMU guys to enable access to either raw networking or GPGPU.”

The goal, said Shuttleworth, is to enable any institution to set up cloudlets inexpensively. “If you just have five PCs, six PCs, you want to be able to press a button and have a cloudlet,” he said. “And then the next step would be essentially to enable different institutions to start collaborating so that apps can migrate effectively between cloudlets from different institutions.”

The start of that kind of future is in Pittsburgh at Carnegie Mellon. “They have two different cloudlets,” said Shuttleworth. “They have the beginnings of the radio frequency back-hold to support that. But I think they’re actively interested in getting more institutions with more diverse perspectives and specific interests from the point of view of the applications in particular to participate.”

The applications he’s seen are very interesting. They have one project between a telco and a firm of architects as well as one firm that works with drones. The project basically monitors a construction project just across the road from the university, said Shuttleworth. “They essentially are flying drones around and then comparing in real time the state of the building with the construction plans, effectively, so that they can very cheaply sign off on the quantity surveying parts of the construction project.”

There are other projects, including one in industrial automation, where someone wearing a pair of goggles can walk onto an industrial site and see exactly what valves they need to turn and other processes that need to happen without any previous knowledge. ”The idea is to be able to detect exactly where they are and then overlay in their field of vision exactly the sequence of instructions,” said Shuttleworth.

Carnegie Mellon is the place it’s happening. “It’s going to be much easier to make the business case for 5G deployments if you have a real concrete sense of the apps that could exist in that world that are impossible in the world that we have today,” concluded Shuttleworth.

Check out the whole presentation below.

https://www.youtube.com/watch?v=DnB56vIDGRY

- Yes it blends: Vanilla Forums and private clouds - November 5, 2018

- How Red Hat and OpenShift navigate a hybrid cloud world - July 25, 2018

- Airship: Making life cycle management repeatable and predictable - July 17, 2018

)