Since the last OpenStack Summit in Tokyo last November, we realized the impact that containers will have on the global community.

There has been a lot of talk about using containers and Kubernetes instead of standard virtual machines (VMs). There are a couple of reasons for the buzz: they are lightweight, easy and fast to deploy and developers love that they can easily develop, maintain, scale and roll-update their applications. At tcp cloud, because we focus on building private cloud solutions based on open-source technologies, we wanted to dive into Kubernetes to see if it can really be used in a production setup along or within the OpenStack-powered virtualization.

Kubernetes brings a new way to manage container-based workloads and enables similar features like OpenStack for VMs. If you start using Kubernetes, you quickly realize that you can deploy easily it in AWS, GCE or Vagrant, but what about your on-premise bare-metal deployment? How can you integrate it into your current OpenStack or virtualized infrastructure? Many blog posts and manuals document small clusters running in VMs with sample web applications, but none of them show real scenarios for bare-metal or enterprise performance workloads with integration in current network design. The most difficult part of architectural design is to properly design networking, just like with OpenStack. So we defined following networking requirements:

- Multi tenancy – separation of containers workload is a basic requirement for every security policy standard. e.g. default Flannel networking only provides flat network architecture.

- Multi-cloud support – not every workload is suitable for containers and you still need to put heavy loads like databases in VMs or even on bare metals. For this reason, a single control plane for the SDN is the best option.

- Overlay – is related to multi-tenancy. Almost every OpenStack Neutron deployment uses some kind of overlays (VXLAN, GRE, MPLSoverGRE, MPLSoverUDP), and we have to be able inter-connect them.

- Distributed routing engine – East-West and North-South traffic cannot go through one central software service. Network traffic has to go directly between OpenStack compute nodes and Kubernetes nodes. Providing routing on routers instead of proprietary gateway appliances is optimal.

Based on these requirements, we decided to start using OpenContrail SDN first and our mission was to integrate OpenStack workloads with Kubernetes, then find a suitable application stack for the actual load testing.

OpenContrail overview

OpenContrail is an open source SDN and NFV solution, which has had tight ties to OpenStack since Havana. It was one of the first production ready Neutron plugins along with Nicira (now VMware NSX-VH) and last summit’s survey showed it is the second most deployed solution after OpenVwitch and first of the vendor-based solutions. OpenContrail has integrations to OpenStack, VMware, Docker and Kubernetes.

The Kubernetes network plugin, kube-network-manager, has been under development since the OpenStack Summit in Vancouver last year and its first announcement was released at the end of the year.

The kube-network-manager process uses the Kubernetes controller framework to listen to changes in objects that are defined in the API and add annotations to some of these objects. Then it creates a network solution for the application using the OpenContrail API that define objects such as virtual-networks, network interfaces and access control policies. More information is available at this blog.

Architecture

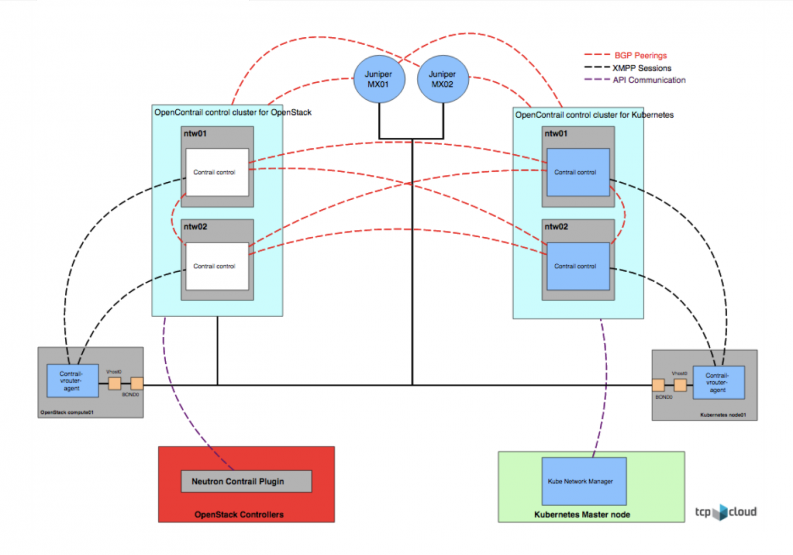

We started testing with two independent Contrail deployments and then set up a BGP federation. The reason for federation is Keystone authentication of kube-network-manager. When contrail-neutron-plugin is enabled, contrail API uses Keystone authentication and this feature is not yet implemented at the Kubernetes plugin. The Contrail federation is described in more later in this post.

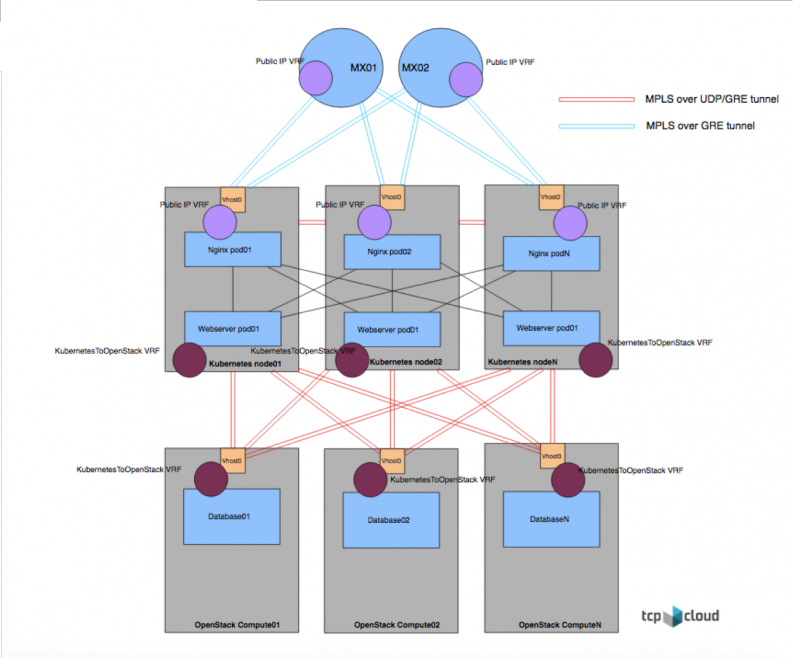

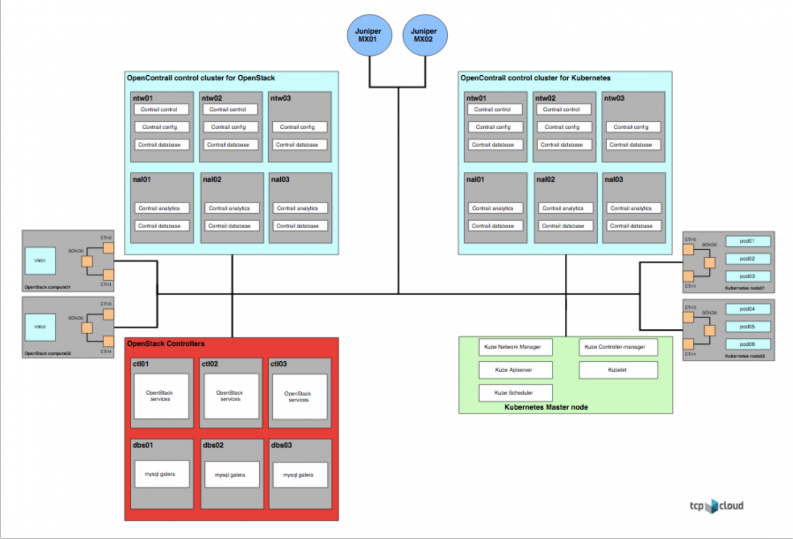

The following schema shows high-level architecture, which shows the OpenStack cluster on the left and the Kubernetes cluster on the right. OpenStack and OpenContrail are deployed in fully High Available (HA) best practice design, which can be scaled up to hundreds of compute nodes.

OpenStack integration with Kubernetes

The following figure shows federation of two Contrail clusters. In general, this feature enables Contrail controllers a connection between different sites of a Multi-site DC without requiring a physical gateway. The control nodes at each site are peered with other sites using BGP. It is possible to stretch both L2 and L3 networks across multiple DCs this way.

This design is usually used for two independent OpenStack cloud or two OpenStack Region. All components of Contrail including vRouter are exactly the same. Kube-network-manager and neutron-contrail-plugin just translate API requests for different platforms. The core functionality of the networking solution remains unchanged. This brings not only robust networking engine, but analytics too.

OpenContrail control plane

Application Stack Overview

Let’s have a look at a typical scenario. Our developers gave us Docker compose.yml, which is used for development and local tests on their laptop. This situation is easier, because our developers already know Docker and application workload is Docker-ready. This application stack contains the following components:

- Database – PostgreSQL or MySQL database cluster.

- Memcached – it is for content caching.

- Django app Leonardo – Django CMS Leonardo was used for application stack testing.

- Nginx – web proxy.

- Load balancer – HAProxy load balancer for containers scaling.

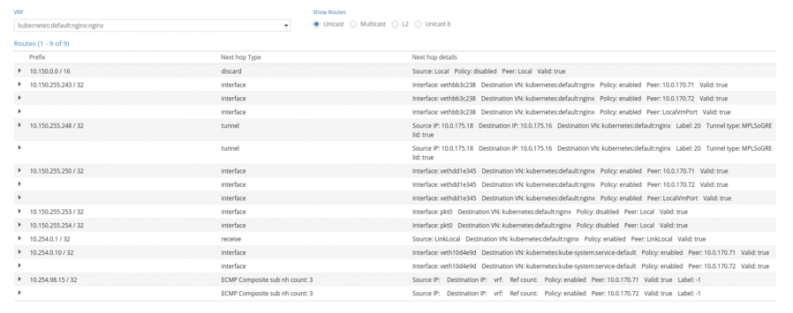

When we want to get it into production, we can transform everything into Kubernetes replication controllers with services, but as we mentioned at beginning not everything is suitable for containers. So we separate database cluster to OpenStack VMs and rewrite rest into Kubernetes manifests.

Application deployment

This section describes workflow for application provisioning on OpenStack and Kubernetes.

OpenStack side

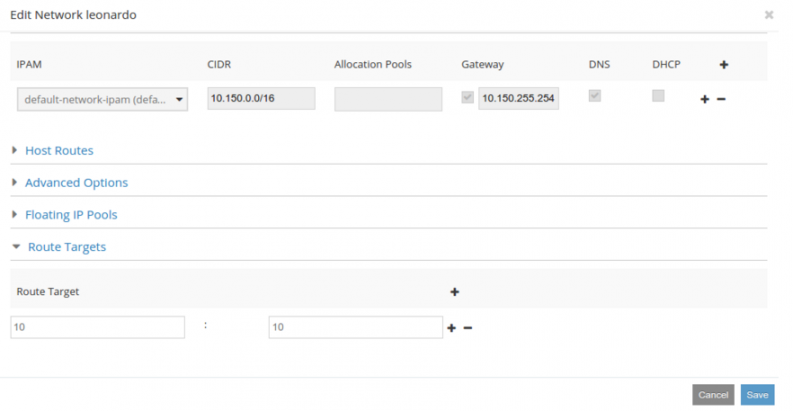

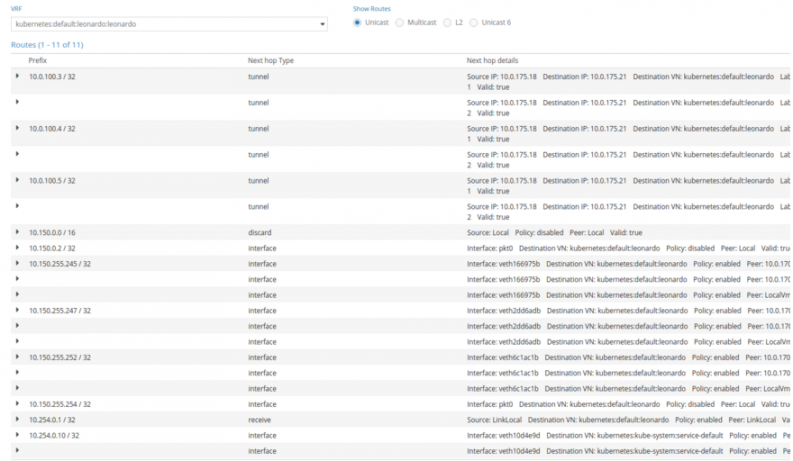

At the first step, we have launched a Heat database stack on OpenStack. This created three VMs with PostgreSQL and database network. The database network is private tenant isolated network.

# nova list

+--------------------------------------+--------------+--------+------------+-------------+-----------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+--------------+--------+------------+-------------+-----------------------+

| d02632b7-9ee8-486f-8222-b0cc1229143c | PostgreSQL-1 | ACTIVE | - | Running | leonardodb=10.0.100.3 |

| b5ff88f8-0b81-4427-a796-31f3577333b5 | PostgreSQL-2 | ACTIVE | - | Running | leonardodb=10.0.100.4 |

| 7681678e-6e75-49f7-a874-2b1bb0a120bd | PostgreSQL-3 | ACTIVE | - | Running | leonardodb=10.0.100.5 |

+--------------------------------------+--------------+--------+------------+-------------+-----------------------+

)