This process should work with any OpenStack Newton platform deployed with TripleO; an already deployed environment updated with the configuration templates described here should also work.

The workflow is based on the upstream documentation.

Architecture setup

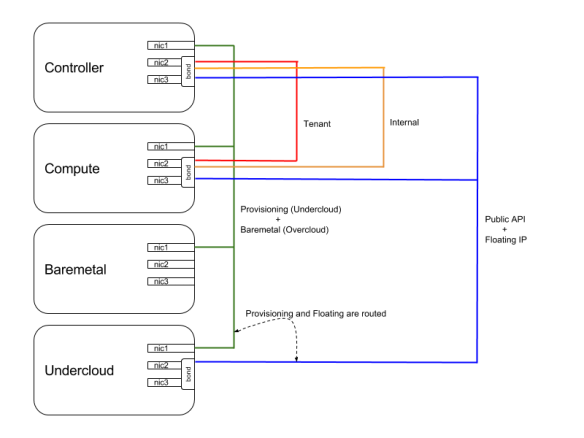

With this setup, we can have virtual instances and instances on baremetal nodes in the same environment. In this architecture, I’m using floating IPs with VMs and a provisioning network with the baremetal nodes.

To be able to test this setup in a lab with virtual machines, we can use Libvirt+KVM using VMs for all the nodes in an all-in-one lab. The network topology is described in the diagram below.

Ideally we would have more networks, such as a dedicated network for cleaning the disks and another one for provisioning the baremetal nodes from the Overcloud with room for an extra one as the tenant network for the baremetal nodes in the Overcloud. For simplicity reasons though, I reused the Undercloud’s provisioning network in this lab for this four network roles:

- Provisioning from the Undercloud

- Provisioning from the Overcloud

- Cleaning the baremetal nodes’ disks

- Baremetal tenant network for the Overcloud nodes

Virtual environment configuration

To test with root_device hints in the nodes (Libvirt VMs) that we want to test as baremetal nodes, we must define the first disk in Libvirt with a iSCSI bus and a World Wide Identifier:

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2'/>

<source file='/var/lib/virtual-machines/overcloud-2-node4-disk1.qcow2'/>

<target dev='sda' bus='scsi'/>

<wwn>0x0000000000000001</wwn>

</disk>To verify the hints, we can optionally introspect the node in the Undercloud (as currently there’s no introspection in the Overcloud). This is what we can see after introspection of the node in the Undercloud:

$ openstack baremetal introspection data save 7740e442-96a6-496c-9bb2-7cac89b6a8e7|jq '.inventory.disks'

[

{

"size": 64424509440,

"rotational": true,

"vendor": "QEMU",

"name": "/dev/sda",

"wwn_vendor_extension": null,

"wwn_with_extension": "0x0000000000000001",

"model": "QEMU HARDDISK",

"wwn": "0x0000000000000001",

"serial": "0000000000000001"

},

{

"size": 64424509440,

"rotational": true,

"vendor": "0x1af4",

"name": "/dev/vda",

"wwn_vendor_extension": null,

"wwn_with_extension": null,

"model": "",

"wwn": null,

"serial": null

},

{

"size": 64424509440,

"rotational": true,

"vendor": "0x1af4",

"name": "/dev/vdb",

"wwn_vendor_extension": null,

"wwn_with_extension": null,

"model": "",

"wwn": null,

"serial": null

},

{

"size": 64424509440,

"rotational": true,

"vendor": "0x1af4",

"name": "/dev/vdc",

"wwn_vendor_extension": null,

"wwn_with_extension": null,

"model": "",

"wwn": null,

"serial": null

}

]Undercloud templates

The following templates contain all the changes needed to configure Ironic and to adapt the NIC config to have a dedicated OVS bridge for Ironic as required.

Ironic configuration

~/templates/ironic.yaml

parameter_defaults:

IronicEnabledDrivers:

- pxe_ssh

NovaSchedulerDefaultFilters:

- RetryFilter

- AggregateInstanceExtraSpecsFilter

- AvailabilityZoneFilter

- RamFilter

- DiskFilter

- ComputeFilter

- ComputeCapabilitiesFilter

- ImagePropertiesFilter

IronicCleaningDiskErase: metadata

IronicIPXEEnabled: true

ControllerExtraConfig:

ironic::drivers::ssh::libvirt_uri: 'qemu:///system'Network configuration

First we map an extra bridge called br-baremetal which will be used by Ironic:

~/templates/network-environment.yaml:

[...]

parameter_defaults:

[...]

NeutronBridgeMappings: datacentre:br-ex,baremetal:br-baremetal

NeutronFlatNetworks: datacentre,baremetal

This bridge will be configured in the provisioning network (control plane) of the controllers as we will reuse this network as the Ironic network later. If we wanted to add a dedicated network, we would do the same configuration.

It is important to mention that this Ironic network used for provisioning can’t be VLAN tagged, which is yet another reason to justify using the Undercloud’s provisioning network for this lab:

~/templates/nic-configs/controller.yaml:

[...]

network_config:

-

type: ovs_bridge

name: br-baremetal

use_dhcp: false

members:

-

type: interface

name: eth0

addresses:

-

ip_netmask:

list_join:

- '/'

- - {get_param: ControlPlaneIp}

- {get_param: ControlPlaneSubnetCidr}

routes:

-

ip_netmask: 169.254.169.254/32

next_hop: {get_param: EC2MetadataIp}

[...]Deployment

This is the deployment script I’ve used. Note there’s a roles_data.yaml template to add a composable role (a new feature in OSP 10) that I used for the deployment of an Operational Tools server (Sensu and Fluentd). The deployment also includes three Ceph nodes. These are irrelevant for the purpose of this setup but I wanted to test it all together in an advanced and more realistic architecture.

Red Hat’s documentation contains the details for configuring these advanced options and the base configuration with the platform director.

~/deployment-scripts/ironic-ha-net-isol-deployment-dupa.sh:

openstack overcloud deploy \

--templates \

-r ~/templates/roles_data.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml \

-e ~/templates/network-environment.yaml \

-e ~/templates/ceph-storage.yaml \

-e ~/templates/parameters.yaml \

-e ~/templates/firstboot/firstboot.yaml \

-e ~/templates/ips-from-pool-all.yaml \

-e ~/templates/fluentd-client.yaml \

-e ~/templates/sensu-client.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/services/ironic.yaml \

-e ~/templates/ironic.yaml \

--control-scale 3 \

--compute-scale 1 \

--ceph-storage-scale 3 \

--compute-flavor compute \

--control-flavor control \

--ceph-storage-flavor ceph-storage \

--timeout 60 \

--libvirt-type kvmPost-deployment configuration

Verifications

After the deployment completes successfully, we should see how the controllers have the compute service enabled:

$ . overcloudrc

$ openstack compute service list -c Binary -c Host -c State

+------------------+------------------------------------+-------+

| Binary | Host | State |

+------------------+------------------------------------+-------+

| nova-consoleauth | overcloud-controller-1.localdomain | up |

| nova-scheduler | overcloud-controller-1.localdomain | up |

| nova-conductor | overcloud-controller-1.localdomain | up |

| nova-compute | overcloud-controller-1.localdomain | up |

| nova-consoleauth | overcloud-controller-0.localdomain | up |

| nova-consoleauth | overcloud-controller-2.localdomain | up |

| nova-scheduler | overcloud-controller-0.localdomain | up |

| nova-scheduler | overcloud-controller-2.localdomain | up |

| nova-conductor | overcloud-controller-0.localdomain | up |

| nova-conductor | overcloud-controller-2.localdomain | up |

| nova-compute | overcloud-controller-0.localdomain | up |

| nova-compute | overcloud-controller-2.localdomain | up |

| nova-compute | overcloud-compute-0.localdomain | up |

+------------------+------------------------------------+-------+And the driver we passed with IronicEnabledDrivers is also enabled:

$ openstack baremetal driver list

+---------------------+------------------------------------------------------------------------------------------------------------+

| Supported driver(s) | Active host(s) |

+---------------------+------------------------------------------------------------------------------------------------------------+

| pxe_ssh | overcloud-controller-0.localdomain, overcloud-controller-1.localdomain, overcloud-controller-2.localdomain |

+---------------------+------------------------------------------------------------------------------------------------------------+Bare metal network

This network will be:

- The provisioning network for the Overcloud’s Ironic.

- The cleaning network for wiping the baremetal node’s disks.

- The tenant network for the Overcloud’s Ironic instances.

Create the baremetal network in the Overcloud with the same subnet and gateway as the Undercloud’s ctlplane using a different range:

$ . overcloudrc

$ openstack network create \

--share \

--provider-network-type flat \

--provider-physical-network baremetal \

--external \

baremetal

$ openstack subnet create \

--network baremetal \

--subnet-range 192.168.3.0/24 \

--gateway 192.168.3.1 \

--allocation-pool start=192.168.3.150,end=192.168.3.170 \

baremetal-subnetThen, we need to configure each controller’s /etc/ironic.conf to use this network to clean the nodes’ disks at registration time and also before tenants use them as baremetal instances:

$ openstack network show baremetal -f value -c id

f7af39df-2576-4042-87c0-14c395ca19b4$ ssh heat-admin@$CONTROLLER_IP

$ sudo vi /etc/ironic/ironic.conf

$ cleaning_network_uuid=f7af39df-2576-4042-87c0-14c395ca19b4

$ sudo systemctl restart openstack-ironic-conductorWe should also leave it ready to be included in our next update by adding it to the “ControllerExtraConfig” section in the ironic.yaml template:

parameter_defaults:

ControllerExtraConfig:

ironic::conductor::cleaning_network_uuid: f7af39df-2576-4042-87c0-14c395ca19b4Bare metal deployment images

We can use the same deployment images we use in the Undercloud:

$ openstack image create --public --container-format aki --disk-format aki --file ~/images/ironic-python-agent.kernel deploy-kernel

$ openstack image create --public --container-format ari --disk-format ari --file ~/images/ironic-python-agent.initramfs deploy-ramdiskWe could also create them using the CoreOS images. For example, if we wanted to troubleshoot the deployment, we could use the CoreOS images and enable debug output in the Ironic Python Agent or adding our ssh-key to access during the deployment of the image.

Bare metal instance images

Again, for simplicity, we can use the overcloud-full image we use in the Undercloud:

$ KERNEL_ID=$(openstack image create --file ~/images/overcloud-full.vmlinuz --public --container-format aki --disk-format aki -f value -c id overcloud-full.vmlinuz)

$ RAMDISK_ID=$(openstack image create --file ~/images/overcloud-full.initrd --public --container-format ari --disk-format ari -f value -c id overcloud-full.initrd)

$ openstack image create --file ~/images/overcloud-full.qcow2 --public --container-format bare --disk-format qcow2 --property kernel_id=$KERNEL_ID --property ramdisk_id=$RAMDISK_ID overcloud-fullNote that it uses kernel and ramdisk images, as the Overcloud default image is a partition image.

Create flavors

We create two flavors to start with, one for the baremetal instances and another one for the virtual instances.

$ openstack flavor create --ram 1024 --disk 20 --vcpus 1 baremetal

$ openstack flavor create --disk 20 m1.smallBare metal instances flavor

Then, we set a Boolean property in the newly created flavor called baremetal, which will also be set in the host aggregates (see below) to differentiate nodes for baremetal instances from nodes virtual instances.

And, as by default the boot_option is netboot, we set it to local (and later we will do the same when we create the baremetal node):

$ openstack flavor set baremetal --property baremetal=true

$ openstack flavor set baremetal --property capabilities:boot_option="local"Virtual instances flavor

Lastly, we set the flavor for virtual instances with the boolean property set to false:

$ openstack flavor set m1.small --property baremetal=falseCreate host aggregates

To have OpenStack differentiating between baremetal and virtual instances we can create host aggregates to have the nova-compute service running on the controllers just for Ironic and the the one on compute nodes for virtual instances:

$ openstack aggregate create --property baremetal=true baremetal-hosts

$ openstack aggregate create --property baremetal=false virtual-hosts

$ for compute in $(openstack hypervisor list -f value -c "Hypervisor Hostname" | grep compute); do openstack aggregate add host virtual-hosts $compute; done

$ openstack aggregate add host baremetal-hosts overcloud-controller-0.localdomain

$ openstack aggregate add host baremetal-hosts overcloud-controller-1.localdomain

$ openstack aggregate add host baremetal-hosts overcloud-controller-2.localdomainRegister the nodes in Ironic

The nodes can be registered with the command, openstack baremetal create, and a YAML template where the node is defined. In this example, I register only one node (overcloud-2-node4), which I had previously registered in the Undercloud for introspection (and later deleted from it or set to “maintenance” mode to avoid conflicts between the two Ironic services).

The root_device section contains commented examples of the hints we could use. Remember that while configuring the Libvirt XML file for the node above, we added a wwn ID section, which is the one we’ll use in this example.

This template is like the instackenv.json one in the Undercloud, but in YAML.

$ cat overcloud-2-node4.yaml

nodes:

- name: overcloud-2-node5

driver: pxe_ssh

driver_info:

ssh_username: stack

ssh_key_contents: |

-----BEGIN RSA PRIVATE KEY-----

MIIEogIBAAKCAQEAxc0a2u18EgTy5y9JvaExDXP2pWuE8Ebyo24AOo1iQoWR7D5n

fNjkgCeKZRbABhsdoMBmbDMtn0PO3lzI2HnZQBB4BdBZprAiQ1NwKKotUv9puTeY

[..]

7DsSKAL4EDqjufY3h+4fRwOcD+EFqlUTDG1sjsSDKjdiHyYMzjcrg8nbaj/M9kAs

xXnSm9686KxUiCDXO5FWKun204B18mPH1UP20aYw098t6aAQwm4=

-----END RSA PRIVATE KEY-----

ssh_virt_type: virsh

ssh_address: 10.0.0.1

properties:

cpus: 4

memory_mb: 12288

local_gb: 60

#boot_option: local (it doesn't set 'capabilities')

root_device:

# vendor: "0x1af4"

# model: "QEMU HARDDISK"

# size: 64424509440

wwn: "0x0000000000000001"

# serial: "0000000000000001"

# vendor: QEMU

# name: /dev/sda

ports:

- address: 52:54:00:a0:af:daWe create the node using the above template:

$ openstack baremetal create overcloud-2-node4.yamlThen we have to specify which are the deployment kernel and ramdisk for the node:

$ DEPLOY_KERNEL=$(openstack image show deploy-kernel -f value -c id)

$ DEPLOY_RAMDISK=$(openstack image show deploy-ramdisk -f value -c id)

$ openstack baremetal node set $(openstack baremetal node show overcloud-2-node4 -f value -c uuid) \

--driver-info deploy_kernel=$DEPLOY_KERNEL \

--driver-info deploy_ramdisk=$DEPLOY_RAMDISKAnd lastly, just like we do in the Undercloud, we set the node to available:

$ openstack baremetal node manage $(openstack baremetal node show overcloud-2-node4 -f value -c uuid)

$ openstack baremetal node provide $(openstack baremetal node show overcloud-2-node4 -f value -c uuid)You can have all of this in a script and run it together every time you register a node.

If everything has gone well, the node will be registered and Ironic will clean its disk metadata (as per above configuration):

$ openstack baremetal node list -c Name -c "Power State" -c "Provisioning State"

+-------------------+-------------+--------------------+

| Name | Power State | Provisioning State |

+-------------------+-------------+--------------------+

| overcloud-2-node4 | power off | cleaning |

+-------------------+-------------+--------------------+Wait until the cleaning process has finished and then set the boot_option to local:

$ openstack baremetal node set $(openstack baremetal node show overcloud-2-node4 -f value -c uuid) --property 'capabilities=boot_option:local'Start a bare metal instance

Just as in the virtual instances we’ll use a ssh key and then we’ll start the instance with Ironic:

$ openstack keypair create --public-key ~/.ssh/id_rsa.pub stack-keyThen we make sure that the cleaning process has finished (“Provisioning State” is available):

$ openstack baremetal node list -c Name -c "Power State" -c "Provisioning State"

+-------------------+-------------+--------------------+

| Name | Power State | Provisioning State |

+-------------------+-------------+--------------------+

| overcloud-2-node4 | power off | available |

+-------------------+-------------+--------------------+And we start the baremetal instance:

$ openstack server create \

--image overcloud-full \

--flavor baremetal \

--nic net-id=$(openstack network show baremetal -f value -c id) \Now check its IP and access the newly created machine:

$ openstack server list -c Name -c Status -c Networks

+---------------+--------+-------------------------+

| Name | Status | Networks |

+---------------+--------+-------------------------+

| bm-instance-0 | ACTIVE | baremetal=192.168.3.157 |

+---------------+--------+-------------------------+

$ ssh [email protected]

Warning: Permanently added '192.168.3.157' (ECDSA) to the list of known hosts.

Last login: Sun Jan 15 07:49:37 2017 from gateway

[cloud-user@bm-instance-0 ~]$Start a virtual instance

Optionally, we can start a virtual instance to test whether virtual and baremetal instances are able to reach each other.

As I need to create public and private networks, an image, a router, a security group, a floating IP, etc., I’ll use a Heat template that does it all for me and, including creating the virtual instance, so I will use it skip the details of doing this:

$ openstack stack create -e overcloud-env.yaml -t overcloud-template.yaml overcloud-stackCheck that the networks and the instance have been created:

$ openstack network list -c Name

+----------------------------------------------------+

| Name |

+----------------------------------------------------+

| public |

| baremetal |

| HA network tenant 1e6a7de837ad488d8beed626c86a6dfe |

| private-net |

+----------------------------------------------------+

$ openstack server list -c Name -c Networks

+----------------------------------------+------------------------------------+

| Name | Networks |

+----------------------------------------+------------------------------------+

| overcloud-stack-instance0-2thafsncdgli | private-net=172.16.2.6, 10.0.0.168 |

| bm-instance-0 | baremetal=192.168.3.157 |

+----------------------------------------+------------------------------------+We now have both instances and they can communicate over the network:

$ ssh [email protected]

Warning: Permanently added '10.0.0.168' (RSA) to the list of known hosts.

$ ping 192.168.3.157

PING 192.168.3.157 (192.168.3.157): 56 data bytes

64 bytes from 192.168.3.157: seq=0 ttl=62 time=1.573 ms

64 bytes from 192.168.3.157: seq=1 ttl=62 time=0.914 ms

64 bytes from 192.168.3.157: seq=2 ttl=62 time=1.064 ms

^C

--- 192.168.3.157 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.914/1.183/1.573 msThis post first appeared on the Tricky Cloud blog. Superuser is always interested in community content, email: [email protected].

Cover Photo // CC BY NC

)