We’ve all seen the plethora of installation, configuration, best practices and not-so-best practices books, guides, articles and blogs on OpenStack. This series will not be one of them.

I don’t want to produce yet another installation guide. Rather, I want to share my experiences learning and understanding the OpenStack platform. (My fear is that, by the time I am done with these articles, they will sound like yet another guide, but I will try to make this series a bit more entertaining by adding my two cents where applicable.)

I believe that, sometimes, OpenStack is misunderstood. It’s not complicated; however, like all magnanimous things, it is complex. So what do you do when you encounter something complex? You take a 180-degree turn and run like your life depended on it! Just kidding.

However, if you’re like me and adore this nerdy misery in your life, you’ll try to make some sense out of the complexity. You will never understand it in its entirety and attempting to do so is futile. Rather, you need to ask yourself what you want it, then learn more about those aspects accordingly.

However, before we jump in, let me introduce the main character of this series, OpenStack. What is it? Simply put, it’s a software platform that will allow you to embrace the goodies of the cloud revolution. In simpler terms, if you want to rent servers, VMs, containers, development platforms and middleware to customers while charging them for your services, OpenStack will allow you to do this. There are other, more complicated questions you may have, such as: Who are your customers? How are they paying? What are they getting? All of these will be answered in due time.

OpenStack has a great community that meets twice per year in wonderful locations across the globe, bringing the best brains of the business together to talk about technology and how it’s changing the world.

Like anything important in life, if you want something and you’re serious about it, you have got to define your goals and do the ground work. This platform is no exception. Right now, our goal is to perform a manual installation of OpenStack.

For the record: I think that all vendors that offer OpenStack are doing a wonderful job with their respective installers and, in a production environment, you may want to use these installers. Besides being automated and simple to setup, they also take care of high availability and upgrades among other things. But, if one day you have to troubleshoot an OpenStack environment (and believe me, you will), you will be thankful that you did the manual install in your lab to understand how things are configured. That’s precisely our goal here.

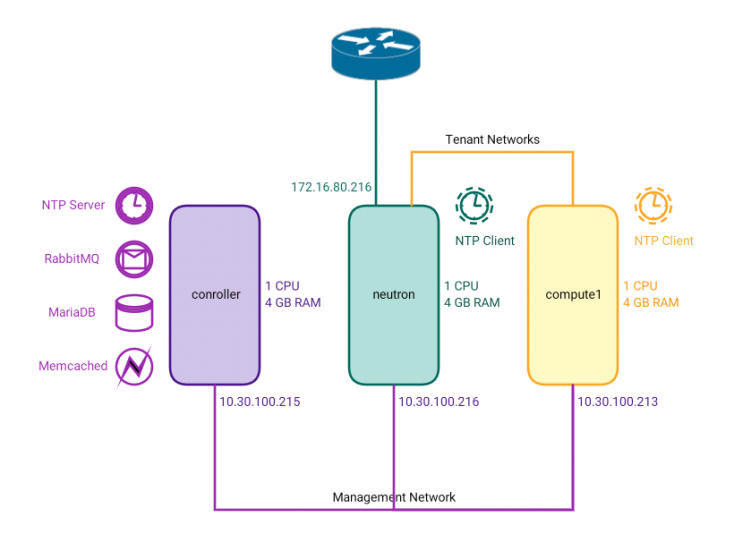

So let’s explore the neighborhood. Go through the diagram below:

OpenStack Base Environment

The above setup describes where OpenStack will reside in our setup. Below are the details:

| Server 1 | controller |

| Comment | Here is where it keeps all the important stuff |

| Server OS | Ubuntu 16.04 |

| Resources | 1 CPU, 4GB RAM |

| IP Addresses | 10.30.100.215 |

| Technical Brief | This is the main hub that hosts most of the fundamental services that form OpenStack |

| ——————– | —————————————————- |

| Server 2 | neutron |

| Comment | This is how it interacts with the outside world |

| Server OS | Ubuntu 16.04 |

| Resources | 1 CPU, 4GB RAM |

| IP Addresses | 10.30.100.216, 172.16.80.216 |

| Technical Brief | This is the networking component that handles all IP based communications for components and hosted guests |

| ——————– | ————————————————— |

| Server 3 | compute1 |

| Comment | This is where it entertains all its guests |

| Server OS | Ubuntu 16.04 |

| Resources | 1 CPU, 4GB RAM |

| IP Addresses | 10.30.100.213 |

| Technical Brief | This is the server where you host the virtual machines that are rented to your customers. |

| ——————– | ————————————————— |

| Management Network (inner voice) | Used for all internal communication within OpenStack. When one OS component wants to talk to the other. |

| Tenant Networks (chatty guests) | Network used by customer virtual machines. When customers are rented virtual machines, they can also be given certain networks, in case they want to setup more than one machine and make them talk to each other. This network is used by such customer networks. |

| External Network (Lets call overseas) | Used by OS to talk to the outside world. Lets say your customer machine needs internet, then this is the network used by the respective virtual machines to access the internet. |

On each of my three servers, the /etc/hosts file looks like this:

A. Host file configuration: /etc/hosts

# controller

10.30.100.215 controller

# compute1

10.30.100.213 compute1

# neutron

10.30.100.216 neutronNote that I am assigning “controller”, “compute1” and “neutron” as aliases. If you change these, make sure to use the changed references when you use them in the configuration files later.

Also, networking seems to have given me some issues in the past so below is a sample /etc/network/interfaces file for each of the servers:

B. Interface file configuration: /etc/network/interfaces

From this point on, whenever you see “@SERVERNAME,” it simply implies that the configuration that I am talking about needs to be done on that server. So “@controller” means, “please perform these configurations/installations on the controller server.”

@controller

# This file describes the network interfaces available on your system# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The management1 interface

auto ens3

iface ens3 inet static

address 10.30.100.215

netmask 255.255.255.0@neutron

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The management1 interface

auto ens3

iface ens3 inet static

address 10.30.100.216

netmask 255.255.255.0

# The Tunnel interface

auto ens9

iface ens9 inet manual

up ifconfig ens9 up

# The external interface

auto ens10

iface ens10 inet static

address 172.16.80.216

netmask 255.255.255.0

gateway 172.16.80.254

dns-nameservers 8.8.8.8@compute1

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The management1 interface

auto ens3

iface ens3 inet static

address 10.30.100.213

netmask 255.255.255.0

# The tunnel interface

auto ens9

iface ens9 inet manual

up ifconfig ens9 upI’m not going into details for each interface, but there is one thing to keep in mind: the tunnel interface in both the Neutron and compute nodes will not have any IP since it is used as a trunk for multiple tenant (or customer) networks in OpenStack. Also if you are using VLAN tagging, make sure that this particular interface is untagged.

@controller

C. NTP configuration:

NTP is network time protocol. Since our protagonist (OpenStack) resides across three houses (servers), we need to make sure that the clocks in all these houses show the same time. What happens if they don’t? Ever get off a 15-hour flight? You are jet lagged, completely out of sync with the local time, rendering you useless until you have rested and the body has readjusted. Something similar happens to distributed software working across systems with out of sync clocks. The symptoms are sometimes quite erratic and it is not always straight forward to pin point the problem. So setup NTP as follows:

Configure @controller as the master clock:

- Install the service:

sudo apt install chronyNOTE: using sudo vi means that I want you to edit the file. The indented lines that follow are what need to be edited in the file

- Configure a global NTP server in the configuration file:

sudo vi /etc/chrony/chrony.conf

server 0.asia.pool.ntp.org iburst

allow 10.30.100.0/24- Start the NTP service:

sudo service chrony restartConfigure @neutron and @compute1 to sync time with the controller:

Install the service:

sudo apt install chronyConfigure controller as the master NTP server in the configuration file:

sudo vi /etc/chrony/chrony.conf

server controller iburst #(Comment all other pool or server entries)Start the NTP service:

sudo service chrony restartD. OpenStack packages

Since we are performing the install on an Ubuntu Linux system, we need to add the corresponding repositories to get the OpenStack Software. For the record, I’m working with the Newton release of OpenStack.

Since we are performing the install on an Ubuntu Linux system, we need to add the corresponding repositories to get the OpenStack Software. For the record, I’m working with the Newton release of OpenStack.

On @controller, @neutron and @compute1 perform the following configuration:

sudo apt install software-properties-common

sudo add-apt-repository cloud-archive:newton

sudo apt update && sudo apt dist-upgrade

sudo reboot #(This seems to be a good idea to avoid surprises)

sudo apt install python-openstackclient #(Just the OS client tools)E. Maria DB

Like most distributed systems, OS requires a database to store configuration and data. In our case, it resides on the controller and almost all components will access it. Do note that the base configuration of most components reside in configuration files, however what you create inside OS is saved in the database. Consider this the intellectual capital of the OS environment. Perform the following steps on the @controller node:

Install the software:

sudo apt install mariadb-server python-pymysqlConfigure the database:

sudo vi /etc/mysql/mariadb.conf.d/99-openstack.cnf

[mysqld]

bind-address = 10.30.100.215

#The bind-address needs to be set to the Management IP of the controller node.

default-storage-engine = innodb

innodb_file_per_table

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8Restart the service:

sudo service mysql restartSecure the installation:

sudo mysql_secure_installation(Answer the questions that follow with information relevant to your environment.)

F. RabbitMQ

By now, you must have realized that OpenStack, our main character, is unusual. All of its parts are not tightly knit together. Rather, it’s composed of a number of autonomous/semi-autonomous modules that perform their respective functions to form the greater whole. However, in order to do their respective parts, they need to communicate effectively and efficiently. In certain cases, a centralized message queuing system, RabbitMQ, is used to pass messages between components within OpenStack. Please do note that certain components sit on more than one system and perform different functions in different situations; we will leave that for a later episode. For now, perform the following configuration on the @controller node:

Install the software:

sudo apt install rabbitmq-serverCreate a master user. Replace “MINE_PASS” with your own password:

sudo rabbitmqctl add_user openstack MINE_PASSAllow full permissions for the user created above:

sudo rabbitmqctl set_permissions openstack ".*" ".*" ".*"G. Memcached:

Honestly, I’m not an expert at this one, but I do know that it makes loading the web pages for the application a bit faster (one way of giving OpenStack a better short-term memory, I suppose).

Install the software on the @controller node:

sudo apt install memcached python-memcacheConfigure the IP for the controller management interface in the “conf” file:

sudo vi /etc/memcached.conf

-l 10.30.100.215Start the service:

sudo service memcached restartRECAP:

- You now know the central character’s name.

- You now know its whereabouts.

- You now know what things it needs to survive and how to set them up, if you wanted one for yourself.

- You are now EXCITED for the next episode of these tutorials! (hopefully.)

What we’ve achieved so far

If you’ve gotten this far, thanks for your patience. If you have any questions or comments, please comment below section so that everyone can benefit from the discussion.

This post first appeared on the WhatCloud blog. Superuser is always interested in community content, email: [email protected].

Cover Photo // CC BY NC

- Managing port level security in OpenStack - April 21, 2017

- Let’s heat things up with Cinder and Heat - April 7, 2017

- Getting to know the essential OpenStack components better - March 21, 2017

)