CPU pinning, also known as CPU affinity, is a feature in OpenStack that allows you to control which physical CPU core a virtual CPU (vCPU) of an instance is executed on. This can be useful in certain situations where you want to ensure that a particular instance always runs on the same physical core, for example, to optimize performance or for compatibility reasons. The CPU pinning configuration can be set through the Nova compute service in OpenStack and is applied to the instance’s domain XML, specifying the physical CPU core IDs to which the vCPUs should be pinned.

CPU topology

For the libvirt driver, you can define the topology of the processors in the virtual machine using properties. The properties with max limit the number that can be selected by the user with image properties.

$ openstack flavor set FLAVOR-NAME \

--property hw:cpu_sockets=FLAVOR-SOCKETS \

--property hw:cpu_cores=FLAVOR-CORES \

--property hw:cpu_threads=FLAVOR-THREADS \

--property hw:cpu_max_sockets=FLAVOR-SOCKETS \

--property hw:cpu_max_cores=FLAVOR-CORES \

--property hw:cpu_max_threads=FLAVOR-THREADS

Where:

- FLAVOR-SOCKETS: (integer) The number of sockets for the guest VM. By default, this is set to the number of vCPUs requested.

- FLAVOR-CORES: (integer) The number of cores per socket for the guest VM. By default, this is set to

1. - FLAVOR-THREADS: (integer) The number of threads per core for the guest VM. By default, this is set to

1.

CPU pinning policy

For the libvirt driver, you can pin the virtual CPUs (vCPUs) of instances to the host’s physical CPU cores (pCPUs) using properties. You can further refine this by stating how hardware CPU threads in a simultaneous multithreading-based (SMT) architecture be used. These configurations will result in improved per-instance determinism and performance.

SMT-based architectures include Intel processors with Hyper-Threading technology. In these architectures, processor cores share several components with one or more other cores. Cores in such architectures are commonly referred to as hardware threads, while the cores that a given core shares components with are known as thread siblings.

Host aggregates should be used to separate these pinned instances from unpinned instances as the latter will not respect the resourcing requirements of the former.

$ openstack flavor set FLAVOR-NAME \

--property hw:cpu_policy=CPU-POLICY \

--property hw:cpu_thread_policy=CPU-THREAD-POLICY

Valid CPU-POLICY values are:

shared: (default) The guest vCPUs will be allowed to freely float across host pCPUs, albeit potentially constrained by NUMA policy.dedicated: The guest vCPUs will be strictly pinned to a set of host pCPUs. In the absence of an explicit vCPU topology request, the drivers typically expose all vCPUs as sockets with one core and one thread. When strict CPU pinning is in effect the guest CPU topology will be set up to match the topology of the CPUs to which it is pinned. This option implies an overcommit ratio of 1.0. For example, if a two vCPU guest is pinned to a single host core with two threads, then the guest will get a topology of one socket, one core and two threads.

Valid CPU-THREAD-POLICY values are:

prefer: (default) The host may or may not have an SMT architecture. Where an SMT architecture is present, thread siblings are preferred.isolate: The host must not have an SMT architecture or must emulate a non-SMT architecture. If the host does not have an SMT architecture, each vCPU is placed on a different core as expected. If the host does have an SMT architecture – that is, one or more cores have thread siblings – then each vCPU is placed on a different physical core. No vCPUs from other guests are placed on the same core. All but one thread sibling on each utilized core is therefore guaranteed to be unusable.require: The host must have an SMT architecture. Each vCPU is allocated on thread siblings. If the host does not have an SMT architecture, then it is not used. If the host has an SMT architecture, but not enough cores with free thread siblings are available, then scheduling fails.

The hw:cpu_thread_policy option is only valid if hw:cpu_policy is set to dedicated.

NUMA topology

For the libvirt driver, you can define the host NUMA placement for the instance vCPU threads as well as the allocation of instance vCPUs and memory from the host NUMA nodes. For flavors whose memory and vCPU allocations are larger than the size of NUMA nodes in the compute hosts, the definition of a NUMA topology allows hosts to better utilize NUMA and improve the performance of the instance OS.

$ openstack flavor set FLAVOR-NAME \

--property hw:numa_nodes=FLAVOR-NODES \

--property hw:numa_cpus.N=FLAVOR-CORES \

--property hw:numa_mem.N=FLAVOR-MEMORY

Where:

- FLAVOR-NODES: (integer) The number of host NUMA nodes to restrict execution of instance vCPU threads to. If not specified, the vCPU threads can run on any number of the host NUMA nodes available.

- N: (integer) The instance NUMA node to apply a given CPU or memory configuration to, where N is in the range

0toFLAVOR-NODES–1. - FLAVOR-CORES: (comma-separated list of integers) A list of instance vCPUs to map to instance NUMA node N. If not specified, vCPUs are evenly divided among available NUMA nodes.

- FLAVOR-MEMORY: (integer) The number of MB of instance memory to map to instance NUMA node N. If not specified, memory is evenly divided among available NUMA nodes

hw:numa_cpus.N and hw:numa_mem.N are only valid if hw:numa_nodes is set. Additionally, they are only required if the instance’s NUMA nodes have an asymmetrical allocation of CPUs and RAM (important for some NFV workloads).

Now, let’s apply this to OpenStack.

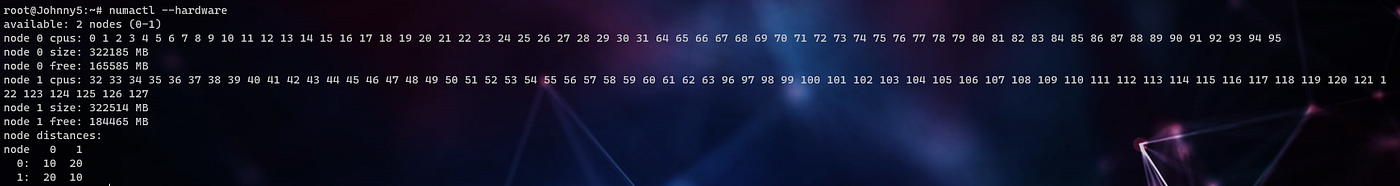

Check the Current NUMA Nodes Topology

By running numactl --hardware we can examine the NUMA layout of its hardware:

root@Johnny5:~# numactl --hardware

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95

node 0 size: 322185 MB

node 0 free: 310411 MB

node 1 cpus: 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127

node 1 size: 322514 MB

node 1 free: 309682 MB

node distances:

node 0 1

0: 10 20

1: 20 10

The output tells me that this system has two NUMA nodes, node 0 and node 1. Each node has 64 CPU threads and 32 GB of RAM associated with it. The output also shows the relative “distances” between nodes, this becomes important with more complex NUMA topologies with different interconnect layouts connecting nodes together.

So the cores are marked:

- NUMA node0 CPU(s): 0–31,64–95

- NUMA node1 CPU(s): 32–63,96–127

We should do two things:

- Dedicate some cores to Host Processes (Hypervisor)

- The other cores will be distributed for CPU pinning

2. Marking the Cores for Pinning

On each Compute node the pinning of virtual machines will be permitted on open the /etc/nova/nova.conf file and make the following modifications:

Set the vcpu_pin_set value to a list or range of physical CPU cores to reserve for virtual machine processes. OpenStack Compute will ensure guest virtual machine instances are pinned to these CPU cores. Using my example host I will reserve two cores in each NUMA node – note that you can also specify ranges, e.g. 0–31,32–63

vcpu_pin_set=0–31

Set the reserved_host_memory_mb to reserve RAM for host processes. For the purposes of testing, I am going to use the default of 512 MB:

reserved_host_memory_mb=512

Restart the Nova Compute:

systemctl restart openstack-nova-compute.service

Now, we just told the nova-compute to use these cores for pinning.

3. Dedicating Host Processes Pins

Next, we need to tell the hypervisor not to race on these cores and not to use them for host processes, and we do that by isolating them but defining parameters to the boot loader of the kernel (GRUB).

On the Red Hat Enterprise Linux 7 systems used in this example, this is done using grubby to edit the configuration:

$ grubby --update-kernel=ALL --args="isolcpus=0–31"

We must then run grub2-install <device> to update the boot record. Be sure to specify the correct boot device for your system! In my case the correct device is /dev/sda:

$ grub2-install /dev/sda

The resulting kernel command line used for future boots of the system to isolate cores 0 to 31 will look similar to this:

linux16 /vmlinuz-3.10.0-229.1.2.el7.x86_64 root=/dev/mapper/rhel-root ro rd.lvm.lv=rhel/root crashkernel=auto rd.lvm.lv=rhel/swap vconsole.font=latarcyrheb-sun16 vconsole.keymap=us rhgb quiet LANG=en_US.UTF-8 isolcpus=0–31

These are the cores we want the guest virtual machine instances to be pinned to. After running grub2-install reboot the system to pick up the configuration changes.

4. Configuring the Nova Scheduler

On each node where the OpenStack Compute Scheduler (openstack-nova-scheduler) runs edit /etc/nova/nova.conf. Add the AggregateInstanceExtraSpecFilter and NUMATopologyFilter values to the list of scheduler_default_filters. These filters are used to segregate the compute nodes that can be used for CPU pinning from those that can not and to apply NUMA-aware scheduling rules when launching instances:

scheduler_default_filters=RetryFilter,AvailabilityZoneFilter,RamFilter,ComputeFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,CoreFilter,NUMATopologyFilter,AggregateInstanceExtraSpecsFilter

Restart the Scheduler:

$ systemctl restart openstack-nova-scheduler.service

5. Create Aggregates, Flavors and Finalizing Configuration

Host aggregates can be regarded as a mechanism to further partition an availability zone; while availability zones are visible to users, host aggregates are only visible to administrators. Host aggregates started out as a way to use Xen hypervisor resource pools, but have been generalized to provide a mechanism to allow administrators to assign key-value pairs to groups of machines. Each node can have multiple aggregates, each aggregate can have multiple key-value pairs, and the same key-value pair can be assigned to multiple aggregates. This information can be used in the scheduler to enable advanced scheduling, to set up xen hypervisor resources pools or to define logical groups for migration.

$ nova aggregate-create performance

+----+-------------+-------------------+-------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-------------+-------------------+-------+----------+

| 1 | performance | - | | |

+----+-------------+-------------------+-------+----------+

Set metadata on the performance aggregate, this will be used to match the flavor we create shortly – here we are using the arbitrary key pinned and setting it to true:

$ nova aggregate-set-metadata 1 pinned=true

Metadata has been successfully updated for aggregate 1.

+----+-------------+-------------------+-------+---------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-------------+-------------------+-------+---------------+

| 1 | performance | - | | 'pinned=true' |

+----+-------------+-------------------+-------+---------------+

Create the normal aggregate for all other hosts:

$ nova aggregate-create normal

+----+--------+-------------------+-------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+--------+-------------------+-------+----------+

| 2 | normal | - | | |

+----+--------+-------------------+-------+----------+

Set metadata on the normal aggregate, this will be used to match all existing ‘normal’ flavors – here we are using the same key as before and setting it to false.

$ nova aggregate-set-metadata 2 pinned=false

Metadata has been successfully updated for aggregate 2.

+----+--------+-------------------+-------+----------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+--------+-------------------+-------+----------------+

| 2 | normal | - | | 'pinned=false' |

+----+--------+-------------------+-------+----------------+

Before creating the new flavor for performance intensive instances update all existing flavors so that their extra specifications match them to the compute hosts in the normal aggregate:

$ for FLAVOR in `nova flavor-list | cut -f 2 -d ' ' | grep -o [0-9]*`; \

do nova flavor-key ${FLAVOR} set \

"aggregate_instance_extra_specs:pinned"="false"; \

done

Create a new flavor for performance intensive instances. Here we are creating the m1.small.performance flavor, based on the values used in the existing m1.small flavor. The differences in behaviour between the two will be the result of the metadata we add to the new flavor shortly.

$ nova flavor-create m1.small.performance 6 2048 20 2

+----+----------------------+-----------+------+-----------+------+-------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs |

+----+----------------------+-----------+------+-----------+------+-------+

| 6 | m1.small.performance | 2048 | 20 | 0 | | 2 |

+----+----------------------+-----------+------+-----------+------+-------+

Set the hw:cpy_policy flavor extra specification to dedicated. This denotes that all instances created using this flavor will require dedicated compute resources and be pinned accordingly.

$ nova flavor-key 6 set hw:cpu_policy=dedicated

Set the aggregate_instance_extra_specs:pinned flavor extra specification to true. This denotes that all instances created using this flavor will be sent to hosts in host aggregates with pinned=true in their aggregate metadata:

$ nova flavor-key 6 set aggregate_instance_extra_specs:pinned=true

Finally, we must add some hosts to our performance host aggregate. Hosts that are not intended to be targets for pinned instances should be added to the normal host aggregate:

$ nova aggregate-add-host 1 compute1.nova

Host compute1.nova has been successfully added for aggregate 1

+----+-------------+-------------------+----------------+---------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-------------+-------------------+----------------+---------------+

| 1 | performance | - | 'compute1.nova'| 'pinned=true' |

+----+-------------+-------------------+----------------+---------------+

$ nova aggregate-add-host 2 compute2.nova

Host compute2.nova has been successfully added for aggregate 2

+----+-------------+-------------------+----------------+---------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-------------+-------------------+----------------+---------------+

| 2 | normal | - | 'compute2.nova'| 'pinned=false'|

+----+-------------+-------------------+----------------+---------------+

6. Creating an Instance

$ nova boot --image rhel-guest-image-7.1-20150224 \

--flavor m1.small.performance test-instance

Assuming the instance launches, we can verify where it was placed by checking the OS-EXT-SRV-ATTR:hypervisor_hostname attribute in the output of the:

$ nova show test-instance

After logging into the returned hypervisor directly using SSH we can use the virsh tool, which is part of Libvirt, to extract the XML of the running guest, it should look something like the below:

$ virsh list

Id Name State

----------------------------------------------------

1 instance-00000001 running

$ virsh dumpxml instance-00000001

...

<vcpu placement='static'>2</vcpu>

...

<cputune>

<vcpupin vcpu='0' cpuset='2'/>

<vcpupin vcpu='1' cpuset='3'/>

<emulatorpin cpuset='2-3'/>

</cputune>

...

<numatune>

<memory mode='strict' nodeset='0'/>

<memnode cellid='0' mode='strict' nodeset='0'/>

</numatune>

...

As you can see CPU tune picked vCPU 0 to be mapped to cpuset=2 and vCPU 1 to be mapped to cpuset=3 as planned.

Also, The numatune element, and the associated memory and memnode elements have been added – in this case resulting in the guest memory being strictly taken from node 0.

The cpu element contains updated information about the NUMA topology exposed to the guest itself, the topology that the guest operating system will see:

<cpu>

<topology sockets='2' cores='1' threads='1'/>

<numa>

<cell id='0' cpus='0-1' memory='2097152'/>

</numa>

</cpu>

)