In under 20 minutes, Intel’s Mahati Chamarthy offers a deep dive into Ceph’s object storage system. The object storage system allows users to mount Ceph as a thin-provisioned block device known as RADOS block Device (RBD). Chamarthy, a cloud software engineer who previously contributed to Swift and is an active contributor to Ceph, delves into the RBD, its design and features in this talk at the recent Vault ’19 event.

Meet RBD images

Ceph is software-defined storage designed to scale services horizontally. That means there’s no single point of failure and object block and file storage are available in one unified system. RBD is a software that facilitates the storage of block-based data in Ceph distributed storage. RBD images are thin-provisioned images resizable images that store data by striping them across multiple OSDs in a Ceph cluster and it offers two libraries one is the us-based library, librbd, typically used in virtual machines and the other is a kernel module used in container and bare-metal environment.

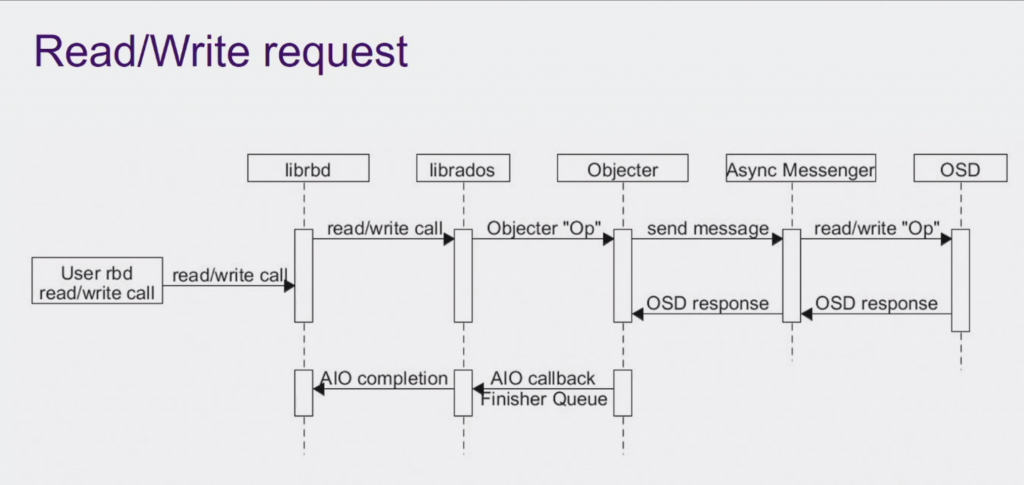

Here’s a look at a somewhat simplified sample flow for the read/write request environment:

Features

By default, Ceph will default striping and layering for users. Other useful features include exclusive lock, object-map (keeps the location of where the data resides speeding up I/o operations as well as importing and exporting), fast diff (an object map property that helps generate discs between snapshots) and deep flatten (resolves issues with snapshots taken from clone images.)

RBD has two image formats:

Mirroring (available per pool and per image; journaling and exclusive_lock)

In-memory librbd cache (other RO, RWL caching works in progress)

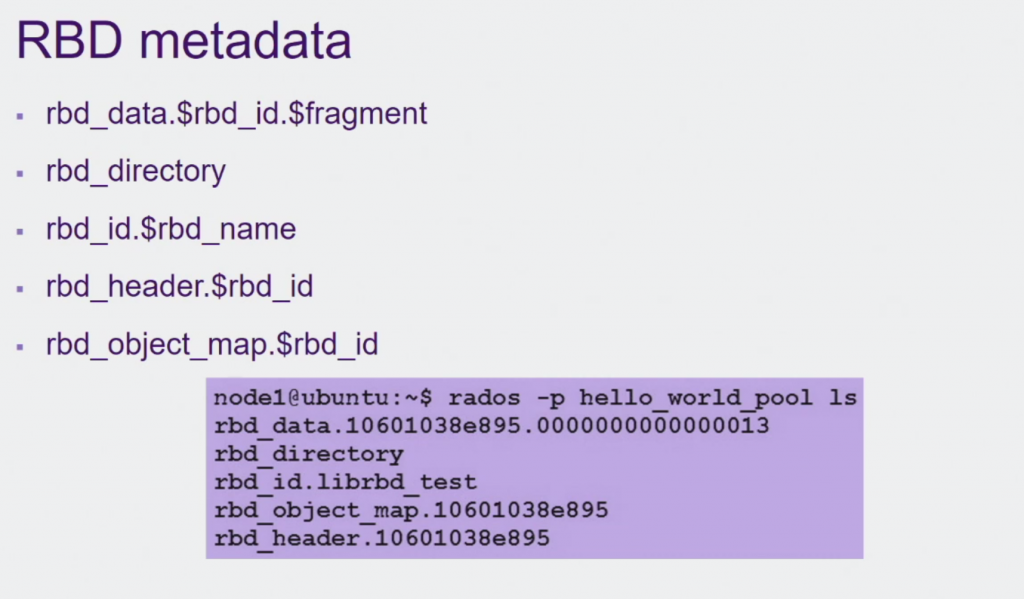

Here’s a look at what gets created with an RBD image, with more details here.

Chamarthy also goes over the details of striping, how snapshots work, layering and use cases for it, RBD and libvert/qemu as well as how to configure them with virtual machines. Check out the full video here.

Get involved

For more on Ceph, check out the code, join the IRC channels and mailing lists or peruse the documentation.

The Ceph community is participating at the upcoming Open Infrastructure Summit – check out the full list of sessions ranging from “Ceph and OpenStack better together” to “Storage 101: Rook and Ceph.”

- Exploring the Open Infrastructure Blueprint: Huawei Dual Engine - September 25, 2024

- Open Infrastructure Blueprint: Atmosphere Deep Dive - September 18, 2024

- Datacomm’s Success Story: Launching A New Data Center Seamlessly With FishOS - September 12, 2024

)