Learn how to run a Kubernetes cluster to deploy a simple application server running WordPress in this tutorial by Omar Khedher.

Kubernetes in OpenStack

Kubernetes is a container deployment and management platform that aims to strengthen the Linux container orchestration tools. The growth of Kubernetes comes from its long experience journey, led by Google for several years before offering it to the open source community as one of the fastest-growing container-based application platforms. Kubernetes is packed with several overwhelming features, including scaling, auto deployment, and resource management across multiple clusters of hosts.

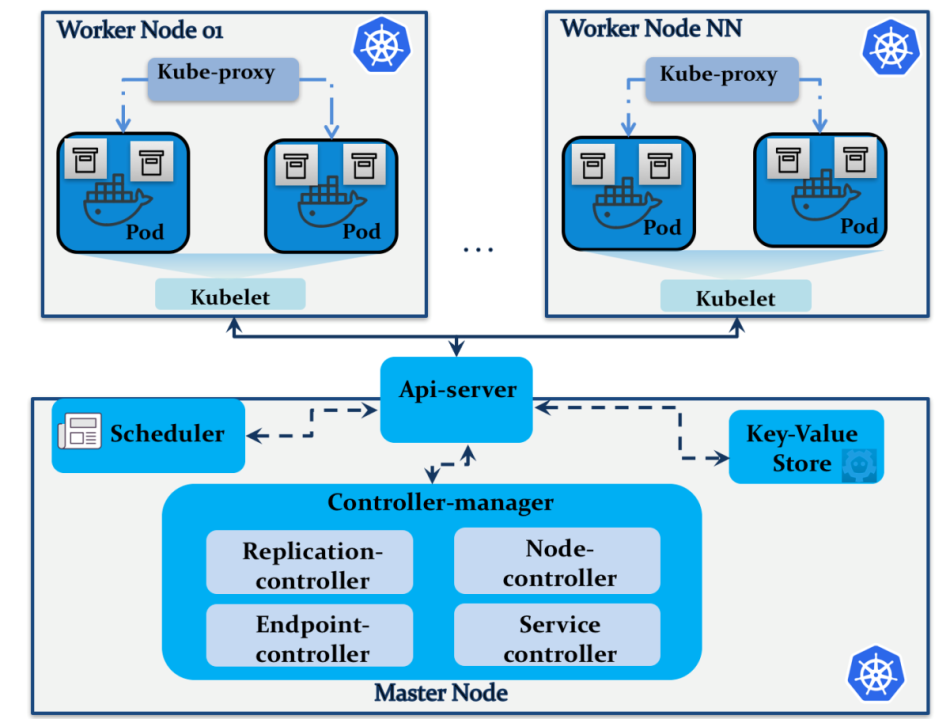

Magnum makes Kubernetes available in the OpenStack ecosystem. Like Swarm, users can use the Magnum API to manage and operate Kubernetes clusters, objects, and services. Here’s a summary of the major player components in the Kubernetes architecture:

The Kubernetes architecture is modular and exposes several services that can be spread across multiple nodes. Unlike Swarm, Kubernetes uses different terminologies as follows:

- Pods: A collection of containers forming the application unit and sharing the networking configuration, namespaces, and storage

- Service: A Kubernetes abstraction layer exposing a set of pods as a service, typically, through a load balancer

From an architectural perspective, Kubernetes essentially defines the following components:

- Master node: This controls and orchestrates the Kubernetes cluster. A master node can run the following services:

- API-server: This provides API endpoints to process RESTful API calls to control and manage the cluster

- Controller manager: This embeds several management services including:

- Replication controller: This manages pods in the cluster by creating and removing failed pods.

- Endpoint controller: This joins pods by providing cluster endpoints.

- Node controller: This manages node initialization and discovery information within the cloud provider.

- Service controller: This maintains service backends in Kubernetes running behind load balancers. The service controller configures load balancers based on the service state update.

- Scheduler: This decides the pod on which the service deployment should take place. Based on the node resource capacity, the scheduler makes sure that the desired service would run onto the nodes belonging to the same pod or across different ones.

- Key-value store: This stores REST API objects such as node and pod states, scheduled jobs, service deployment information, and namespaces. Kubernetes uses etcd as its main key-value store to share configuration information across the cluster.

- Worker node: This manages the Kubernetes pods and containers runtime environment. Each worker node runs the following components:

- Kubelet: This is a primary node agent that takes care of containers running in their associated pods. The kubelet process reports the health status of pods and nodes to the master node periodically.

- Docker: This is the default container runtime engine used by Kubernetes.

- Kube-proxy: This is a network proxy to forward requests to the right container. Kube-proxy routes traffic across pods within the same service.

Example – Application server

With the following example, you can set up a new Magnum bay running the Kubernetes cluster as COE. The Kubernetes cluster will deploy a simple application server running WordPress and listening on port 8080 as follows:

- Create a new Magnum cluster template to deploy a Kubernetes cluster:

# magnum cluster-template-create --name coe-swarm-template \ --image-id fedora-latest \ --keypair-id pp_key \ --external-network-id pub-net\ --dns-nameserver 8.8.8.8 \ --flavor-id m1.small \ --docker-volume-size 4 \ --network-driver docker \ --coe swarm # magnum cluster-template-create --name coe-k8s-template \ --image fedora-latest \ --keypair-id pp_key \ --external-network-id pub-net\ --dns-nameserver 8.8.8.8 \ --flavor-id m1.small \ --docker-volume-size 4 \ --network-driver flannel \ --coe kubernetes

Note that the previous command line assumes the existence of an image, fedora_atomic, in the Glance image repository, a key pair named pp_key, and an external Neutron network named pub-net. Ensure that you adjust your parameters with the correct command-line arguments.

- Initiate a Kubernetes cluster with one master and two worker nodes by executing the following magnum command:

# magnum cluster-create --name kubernetes-cluster \ -cluster-template coe-k8s-template \ --master-count 1 \ --node-count 2 +---------------------+----------------------------------+ | Property | Value +---------------------+----------------------------------+ | status | CREATE_IN_PROGRESS | cluster_template_id | 258e44e3-fe33-8892-be3448cfe3679822 | uuid | 3c3345d2-983d-ff3e-0109366e342021f4 | stack_id | dd3e3020-9833-477c-cc3e012ede5f5f0a | status_reason | - | created_at | 2017-12-11T16:20:08+01:00 | name | kubernetes-cluster | updated_at | - | api_address | - | coe_version | - | master_addresses | [] | create_timeout | 60 | node_addresses | [] | master_count | 1 | container_version | - | node_count | 2 +---------------------+---------------------------------+

- Verify the creation of the new Swarm cluster. You can check the progress of the cluster deployment from the Horizon dashboard by pointing to the stack section that visualizes different events of each provisioned resource per stack:

# magnum cluster-show kubernetes-cluster +---------------------+------------------------------------------+ | Property | Value +---------------------+------------------------------------------+ | status | CREATE_COMPLETE | cluster_template_id | 258e44e3-fe33-8892-be3448cfe3679822 | uuid | 3c3345d2-983d-ff3e-0109366e342021f4 | stack_id | dd3e3020-9833-477c-cc3e012ede5f5f0a | status_reason | Stack CREATE completed successfully | created_at | 2017-12-11T16:20:08+01:00 | name | kubernetes-cluster | updated_at | 2017-12-11T16:23:22+01:00 | discovery_url | https://discovery.etcd.io/ed33fe3a38ff2d4a | api_address | tcp://192.168.47.150:2376 | coe_version | 1.0.0 | master_addresses | ['192.168.47.150'] | create_timeout | 60 | node_addresses | ['192.168.47.151', '192.168.47.152'] | master_count | 1 | container_version | 1.9.1 | node_count | 2 +---------------------+------------------------------------------+

- Generate the signed client certificates to log into the deployed Kubernetes cluster. The following command line will place the necessary TLS files and key in the cluster directory, kubernetes_cluster_dir:

# magnum cluster-config kubernetes-cluster \

--dir kubernetes_cluster_dir

{'tls': True, 'cfg_dir': kubernetes_cluster_dir '.', 'docker_host': u'tcp://192.168.47.150:2376'}

- The generated certificates are in the kubernetes_cluster_dir directory:

# ls kubernetes_cluster_dirca.pem cert.pem key.pem

- Access the master node through SSH and check the new Kubernetes cluster info:

# ssh [email protected] [email protected] ~]$ kubectl cluster-info Kubernetes master is running at http://10.10.10.47:8080 KubeUI is running at http:// 10.10.10.47:8080/api/v1/proxy/namespaces/kube-system/services/kube-ui

- To get your WordPress up and running, you’ll need to deploy your first pod in the Kubernetes worker nodes. For this purpose, you can use a Kubernetes package installer called Helm. The brilliant concept behind Helm is to provide an easy way to install, release, and version packages. Make sure to install Helm in the master node by downloading the latest version as follows:

$ wget https://github.com/kubernetes/helm/archive/v2.7.2.tar.gz

- Unzip the Helm archive and move the executable file to the bin directory:

$ gunzip helm-v2.7.2-linux-amd64.tar.gz$ mv linux-amd64/helm /usr/local/bin

- Initialize the Helm environment and install the server component. The server portion of Helm is called Tiller; it runs within the Kubernetes cluster and manages the releases:

$ helm init Creating /root/.helm Creating /root/.helm/repository Creating /root/.helm/repository/cache Creating /root/.helm/repository/local Creating /root/.helm/plugins Creating /root/.helm/starters Creating /root/.helm/repository/repositories.yaml Writing to /root/.helm/repository/cache/stable-index.yaml $HELM_HOME has been configured at /root/.helm. Tiller (the helm server side component) has been instilled into your Kubernetes Cluster. Happy Helming!

- Once installed, you can enjoy installing applications and managing packages by firing simple command lines. You can start by updating the chart repository:

$ helm repo update Hang tight while we grab the latest from your chart repositories... ...Skip local chart repository Writing to /root/.helm/.helm/repository/cache/stable-index.yaml ...Successfully got an update from the "stable" chart repository Update Complete. Happy Helming!

- Installing WordPress is straightforward with Helm command line tools:

$ helm install stable/wordpress … NAME: wodd-breest LAST DEPLOYED: Fri Dec 15 16:15:55 2017 NAMESPACE: default STATUS: DEPLOYED …

The helm install command line output shows the successful deployment of the default release. Different items that refer to the configurable resources of the WordPress chart are listed during the deployment. The application install is configurable through template files defined by the application chart. The default WordPress release can be configured by providing default parameters passed in the Helm install command-line interface.

The default templates for your deployed WordPress application can be found at https://github.com/kubernetes/charts/tree/master/stable/wordpress/templates. Specifying a different default set of values for the WordPress install is just a matter of issuing the same command line and specifying the name of the new WordPress release and updated values as follows:

$ helm install --name private-wordpress \ -f values.yaml \ stable/wordpress NAME: private-wordpress LAST DEPLOYED: Fri Dec 15 17:17:33 2017 NAMESPACE: default STATUS: DEPLOYED …

You can find the stable charts ready to deploy using Kubernetes Helm package manager at https://github.com/kubernetes/charts/tree/master/stable.

- Now that you’ve deployed the first release of WordPress in Kubernetes in the blink of an eye, you can verify the Kubernetes pod status:

# kuberctl get pods NAME READY STATUS RESTARTS AGE my-priv-wordpress-…89501 1/1 Running 0 6m my-priv-mariadb-…..25153 1/1 Running 0 6m ...

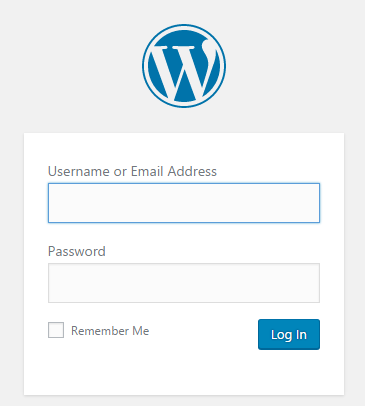

- To provide access to the WordPress dashboard, you’ll need to expose it locally by forwarding default port 8080 in the master node to port 80 of the instance running the pod:

$ kubectl port-forward my-priv-wordpress-42345-ef89501 8080:80

- WordPress can be made accessible by pointing to the Kubernetes IP address and port 8080:

http://192.168.47.150:8080

You have successfully now run a Kubernetes cluster to deploy a simple application server running WordPress.

If you enjoyed reading this article, explore OpenStack with Omar Khedher’s Extending OpenStack. This book will guide you through new features of the latest OpenStack releases and how to bring them into production in an agile way.

- Exploring the Open Infrastructure Blueprint: Huawei Dual Engine - September 25, 2024

- Open Infrastructure Blueprint: Atmosphere Deep Dive - September 18, 2024

- Datacomm’s Success Story: Launching A New Data Center Seamlessly With FishOS - September 12, 2024

)