The retail market is getting an extreme makeover, like it or not. At a time when online sales have upended brick-and-mortar shopping habits, Target Corporation has upgraded its back-end infrastructure to improve how it does business and serves customers.

It’s a big job: Target has 1,829 stores in the United States with about 240 million square feet of retail space. It operates 39 distribution centers across the country and employs about 350,000 employees. Target.com is the fourth-most visited website in the US, with more than 26 million unique visitors each month.

The company has moved from the traditional suburban large-format store to more urban, smaller, college campus-style stores. They have introduced services like Restock, which lets customers order from home and have household items delivered the next day for a small fee. Target has also made a few key buys this year with Shipt, a same-day grocery delivery service and Grand Junction, a shipping logistics company.

In order to make all these changes, innovate in their stores, online and mobile, the company has gone through some transformative changes, including a broad adoption of open source and enabling agile for all its software teams. In addition, everything the company does now has an API. “OpenStack is an important enabler for that,” said principal engineer Marcus Jensen, speaking at the OpenStack Summit about Target’s use of OpenStack and its deployment at the massive retailer.

Before 2014, Target had two production data centers. Everything was centrally managed on a mix of blade and rack mount infrastructure, with Intel 2630L with 384 GB of RAM and traditional Layer 2 switching broadly across the data centers. It could take weeks to get a virtual machine (VM) assigned, with a manual approval and provisioning process in place.

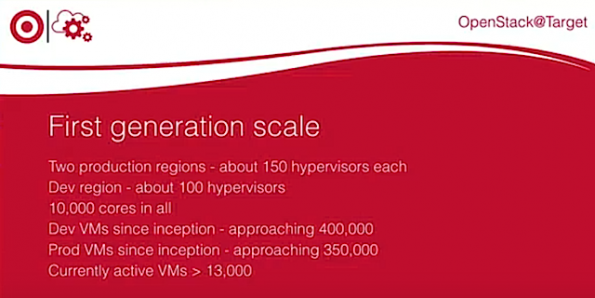

Target deployed OpenStack for the first time in 2015. The team grew quickly, adding about half a dozen engineers in the first year. The team ran development mostly on Linux, but also some Windows workloads to support the company’s point-of-sale development efforts. Most of it was still internally-facing projects like inventory management, however. Now Target has about 150 hypervisors in each data center and another facility on campus that developers use to do their initial deployment work before moving it to production. That adds up to an eye-popping total of about 10,000 cores and 350,000 virtual machines in production and slightly more than 13,000 active VMs.

The team upgraded to a Layer 3 Spine/Leaf topology they deploy in the fabric as a private operations network. There are three separate VLANs and three separate IP networks — the control plane talks to the hypervisors that takes care of the storage. There’s a routable service network that supports incoming APIs, and then a SNAP network where the network address translation for the VMs occurs on its own VLAN.

Target’s team put together new hardware, too, standardizing on rack-mount servers. Each rack consists of two blade chassis, so the data center now has 32 Intel 2690 blades per rack with half a terabyte of RAM each.

The second generation deployment was powered by Ironic, which uses the same OpenStack APIs Target was already comfortable with. They used Ironic Baremetal provisioning, starting with the vendor implementation of TripleO with Mitaka. They eventually replaced TripleO with an internally-developed stack-per-node model to help with future updates. Jensen and his team also build their own DIB images, modifying the disk image builder to take advantage of Ansible in the process.

They use Chef for config management, mostly because it was already pretty well entrenched at Target, and have been moving to containerize everything in the future. They transitioned from vendor OS/Openstack to CentOS/RDo, so that Target is now completely open source. They utilize Hashey Consul and Vault, with a reliance on Vault for secret management, while planning to implement Consul more for service discovery and some configuration settings and management. They use Jenkins and Drone for CI, and other tools like GitHub, Enterprise ARtifactory, and more. The team mostly uses Telegraf to collect metrics and sends them, along with their logs, to Kafka topic, which are picked up by internal aggregation services that use Grafana and Cabana.

The third generation is currently in the planning stages, and includes an internally developed Target Application Platform (TAP), which lets applications run on a variety of infrastructure, including OpenStack, Kubernetes, or even public clouds.

You can catch the entire talk below.

- Yes it blends: Vanilla Forums and private clouds - November 5, 2018

- How Red Hat and OpenShift navigate a hybrid cloud world - July 25, 2018

- Airship: Making life cycle management repeatable and predictable - July 17, 2018

)